- The AI accelerator chips market is projected to exceed $360 billion by 2032, up from $28.5 billion in 2024, with about 37.4% CAGR.

- In 2024, GPUs accounted for about 35% of AI accelerator revenue, while custom AI ASICs were the fastest-growing segment at 43%+ CAGR.

- Cloud data centers dominate AI chip demand at roughly 75%, while edge AI chips are growing at more than 40% annually.

- North America held about 44% of the AI accelerators market in 2024, and NVIDIA commanded over 80% of data-center AI accelerator hardware.

- Hyperscalers like Google with TPU and AWS with Inferentia/Trainium have built in-house AI chips to reduce cost and reliance on merchant silicon.

- China’s National IC Investment Fund, known as the Big Fund, has poured tens of billions into domestic semiconductors, and more than 1,200 AI chip companies were registered in China by 2023.

- Since 2022, U.S. export controls restrict high-end AI chips to China, prompting NVIDIA to offer modified A800 and H800 GPUs for Chinese customers.

- Europe’s Chips Act of 2023 commits €43 billion to bolster chip design and manufacturing, aiming to raise Europe’s global production share to 20% by 2030.

- Cerebras Systems markets a wafer-scale engine (WSE) with about 850,000 cores, a chip the size of a dinner plate.

- McKinsey projects that by 2030 about 50% of logic chip demand could be for AI workloads, with inference likely forming about 60% of AI accelerator revenue.

Introduction: The explosion of artificial intelligence has unleashed an unprecedented gold rush for AI accelerator chips – specialized processors that power everything from chatbots to self-driving cars. As AI models grow more complex and ubiquitous, demand is soaring for GPUs, TPUs, NPUs, ASICs, FPGAs and other custom silicon optimized for AI workloads. This is spurring a fierce global race among tech giants and startups alike to dominate a market projected to reach hundreds of billions of dollars by 2030 nextmsc.com fingerlakes1.com. North America, China, Europe and others are all investing heavily in AI chip capabilities, seeing them as strategic infrastructure for economic and technological leadership. This report provides a comprehensive market analysis for 2025–2030 – covering the major players (NVIDIA, AMD, Intel, Google, Amazon, Apple, Alibaba, Huawei, Graphcore, Cerebras, Tenstorrent, and more), key regions, use cases from cloud data centers to edge devices, as well as market drivers, challenges, technological trends, regulatory factors, and financial forecasts (TAM, CAGR, segment breakdowns). The era of AI accelerators is upon us, and the stakes could not be higher in the quest to supply the brains of our AI-powered future.

Market Overview & Growth Forecast (2025–2030)

The AI accelerator chips market is expected to skyrocket from $28.5 billion in 2024 to over $360 billion by 2032, growing at an astounding 37.4% CAGR globenewswire.com.

The global market for AI accelerator chips is entering a phase of explosive growth. Industry forecasts converge on the expectation of double- or even triple-digit billion-dollar revenues by the end of the decade. For instance, Next Move Strategy Consulting estimates the AI chip market at about $52.9 billion in 2024, climbing to $295.6 billion by 2030 (33.2% CAGR) nextmsc.com. Similarly, Grand View Research projects roughly $305 billion by 2030 (around 29% CAGR) techstrong.ai. Some Wall Street analysts are even more bullish – Bank of America forecasts the AI accelerator market could reach $650 billion in annual revenue by 2030, a more than threefold jump from ~$200B in 2025 fingerlakes1.com. While estimates vary, there is consensus that annual growth of 25–35%+ will far outpace the broader semiconductor industry as AI adoption accelerates.

Driving this exponential expansion is the insatiable compute appetite of modern AI. Each new generation of models (e.g. large language models and generative AI) demands more parallel processing power, memory bandwidth, and energy efficiency than traditional CPUs can provide. This has made specialized accelerators indispensable for AI training and inference. As one report summarizes: “AI Accelerator Chips market growth is driven by surging demand for generative AI, edge computing, and energy-efficient chips, with innovations from leaders like Nvidia and Google.” globenewswire.com. In other words, booming use cases – from training massive GPT-style models in the cloud to running real-time AI on smartphones and sensors at the edge – are fueling a massive upgrade cycle in hardware.

Notably, the segment breakdown reveals key dynamics in this growth. In 2024, GPUs (graphics processing units) still accounted for roughly 35% of AI accelerator chip revenue, thanks to their versatility and head start as the workhorse of deep learning globenewswire.com. However, custom AI ASICs (application-specific chips like Google TPUs or other NPUs) are the fastest-growing segment, projected to surge at 43%+ CAGR as organizations seek tailored, power-efficient chips for specific AI workloads globenewswire.com. In terms of deployment, cloud data centers currently dominate (~75% of AI chip demand) given the requirements of training large models on clusters of accelerators globenewswire.com. Yet edge computing is quickly catching up – with on-device AI needs driving a >40% annual growth in edge AI chips globenewswire.com. By industry, consumer electronics (smartphones, smart devices) made up about 34% of AI chip usage in 2024 (through integrated NPUs in phones, etc.), while automotive AI chips (for autonomous driving and ADAS) are set to be the fastest-growing vertical at ~42.6% CAGR as self-driving capabilities expand globenewswire.com globenewswire.com. Regionally, North America leads the market with ~44% share in 2024 – unsurprising given the presence of major Silicon Valley players – but Asia-Pacific (especially China) is poised for the most rapid growth (near 40% CAGR) owing to massive investments in AI infrastructure, and could significantly close the gap by 2030 globenewswire.com globenewswire.com.

In sum, all signs point to a multi-hundred-billion dollar TAM by 2030 for AI accelerators. The combination of breakneck demand (from ever-expanding AI applications) and continuous technology advancements (smaller transistors, new architectures) will likely sustain high double-digit growth for the foreseeable future. Next, we examine the competitive landscape and how different regions and companies are maneuvering to capture this burgeoning market.

North America: Silicon Valley’s AI Silicon Dominance

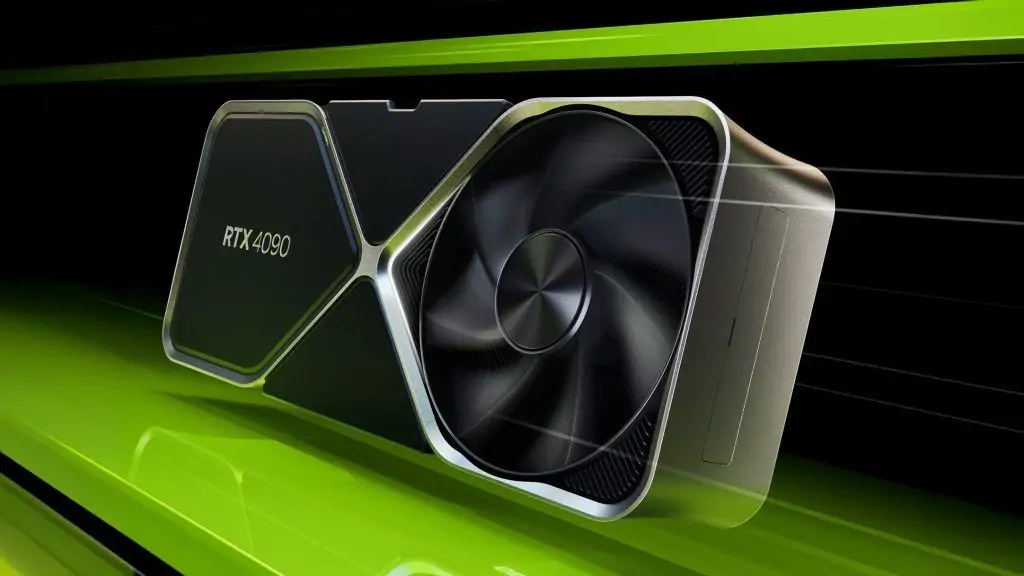

North America – led by the United States – currently stands at the epicenter of the AI chip industry. U.S. companies pioneered the GPU computing revolution that enabled the modern AI boom and continue to hold an outsized edge in technology and market share. NVIDIA, in particular, towers over the landscape: it effectively “remains essentially a monopoly for critical AI infrastructure” with its GPU platforms, “with pricing power, margin control, and demand from every hyperscaler and government AI buildout on the planet.” fingerlakes1.com. NVIDIA’s data center GPUs (like the A100 and H100) have become the default hardware for training cutting-edge AI models – to the point that a single training run for OpenAI’s GPT-4 reportedly required some 25,000 NVIDIA GPUs running for months research.contrary.com. Little wonder that NVIDIA enjoys an estimated 80%+ share of AI accelerator hardware in data centers, and saw its valuation soar as AI investment surged in 2023–2025. The company continues to push new frontiers (e.g. “Blackwell” generation GPUs and networking) and its CEO Jensen Huang famously likened the AI demand boom to a “golden wave” that is still in its early stages fingerlakes1.com fingerlakes1.com.

NVIDIA’s dominance, however, is spurring intense competition. AMD (Advanced Micro Devices) has emerged as the chief rival in high-performance GPUs for AI. AMD’s latest MI200 and MI300 series accelerators, featuring advanced 5nm chiplet designs and integration of CPU+GPU, are aimed at closing the gap with NVIDIA in both training and inference tasks. With its acquisition of Xilinx, AMD also inherited FPGA technology that can be applied to adaptive AI accelerators. Major cloud firms like Microsoft and Google have begun testing AMD Instinct GPUs as an alternative in their data centers. While AMD’s market share in AI accelerators remains modest, the company is aggressively iterating and positioning itself as a viable second source – a dynamic noted by analysts who still see NVIDIA “far ahead” but with rivals gearing up fingerlakes1.com.

Meanwhile, Intel – long the giant of general-purpose computing – has been scrambling to establish a foothold in AI accelerators. After some false starts (e.g. scrapping its Nervana AI chips), Intel acquired Israeli startup Habana Labs and now offers the Gaudi series of AI training and inference processors. Intel’s Gaudi2 accelerators have been adopted by some cloud providers (Amazon AWS offers Gaudi-based instances) as a lower-cost alternative for certain workloads. Intel also remains a leader in edge AI via its OpenVINO toolkit and the dominance of its Mobileye division in vision chips for advanced driver-assistance (Mobileye’s EyeQ chips power many cars’ ADAS). However, Intel faces tough sledding in the data center accelerator space – reports in 2025 even suggested Intel might scale back on discrete AI GPU efforts amid NVIDIA’s overwhelming lead mitrade.com. Still, with massive R&D resources and “a push toward chiplet and advanced packaging” as part of its strategy, Intel cannot be counted out in the long term.

North America’s strength also comes from its hyperscaler cloud giants and a vibrant startup ecosystem. Companies like Google, Amazon, Meta, and Microsoft have taken matters into their own hands by designing custom AI silicon for internal use, in order to lower costs and reduce reliance on NVIDIA. Google was a trendsetter here with its TPU (Tensor Processing Unit), an ASIC optimized for neural network ops that Google has deployed since 2015 in its data centers (now on TPUv4 and TPUv5). Google’s TPUs have achieved significant performance/$ for workloads like language models and are offered through Google Cloud. Amazon Web Services (AWS) likewise developed Inferentia and Trainium chips for AI inference and training, respectively, to power AWS cloud instances at lower cost than GPUs for certain tasks. Hyperscalers will continue this “build vs buy” strategy – using custom ASICs for scale when advantageous, but still buying merchant chips for versatility, as a TD Securities analysis noted tdsecurities.com. In fact, one study estimates that by 2030 around 15% of AI accelerator spending will be on custom in-house chips (up from ~10% today) – growing ~30% annually to ~$50B – while merchant silicon (GPUs from NVIDIA/AMD and others) still doubles to nearly $300B tdsecurities.com.

The region also boasts numerous AI chip startups pushing novel architectures. Notable examples include Graphcore (UK-origin but with U.S. presence) with its IPU processors, Cerebras Systems with its record-setting wafer-scale engine (a single enormous chip the size of a dinner plate for AI training), SambaNova Systems (offering AI systems with reconfigurable dataflow chips), Groq (streamlined inference processors), Mythic (analog AI chips), Hailo (edge AI accelerators from Israel), and Tenstorrent (Canada-based, led by famed chip architect Jim Keller, designing RISC-V based AI chips). Venture capital investment has poured into these challengers. Since 2016, over $6 billion has been invested into AI chip startups like Cambricon, Graphcore, Cerebras, Groq, SambaNova, and Habana galileo.ai. Many have reached lofty unicorn valuations – for example Graphcore raised ~$680M and hit a $2.8B valuation at its peak research.contrary.com, while Lightmatter and Groq in the US each topped ~$1B valuations research.contrary.com. This flourishing startup scene in North America (and Europe/Israel) underscores both the huge opportunity and the technological diversity in play – from wafer-scale designs to photonic computing – as entrepreneurs seek to leapfrog current incumbents.

However, the North American lead is not unassailable. Competitors abroad are rising quickly, and U.S. dominance has also drawn geopolitical scrutiny (e.g. export controls) which we’ll discuss later. First, let’s survey the parallel efforts in China, Europe, and other regions vying to build their own AI chip prowess.

China: Scaling Up Under Constraints

China views AI chips as a core strategic priority and has mobilized enormous resources to cultivate a domestic industry, especially as U.S. export restrictions tighten. Chinese tech giants and startups alike are developing a range of accelerators to serve the country’s booming AI application market – from cloud data centers to surveillance, smartphones, and autonomous vehicles. Notably, Alibaba, Tencent, Baidu, and Huawei – sometimes dubbed China’s “AI Dragons” – have all designed in-house AI chips in recent years:

- Alibaba unveiled the Hanguang 800 NPU in 2019 for AI inference in its cloud, and has semiconductor subsidiaries (like T-Head) producing AI-centric SoCs for cloud and edge.

- Baidu created the Kunlun AI accelerator, deployed both in Baidu Cloud and at the edge (e.g. powering Baidu’s voice assistants and autonomous driving platform). Baidu’s Kunlun 2 chip (7nm) launched in 2021 with competitive performance for NLP and computer vision tasks.

- Huawei developed the Ascend series AI chips (Ascend 910 for training, Ascend 310 for edge) as part of its broader strategy to build full-stack AI solutions. The Ascend 910 (7nm) was one of the world’s most powerful AI training chips upon release, and Huawei uses these in its cloud and AI hardware (like the Atlas server modules). Huawei’s smartphone division also integrated NPUs (Neural Processing Units) in its Kirin mobile SoCs, enabling on-device AI features – though U.S. sanctions have limited its ability to produce cutting-edge phone chips recently.

- Tencent and ByteDance (TikTok’s parent) have reportedly initiated projects to design custom AI chips to use in their data centers, given the scale of AI recommendation algorithms and content moderation AI they run. And SenseTime, a leading AI software unicorn in China, has also dabbled in AI chip design to optimize its AI inference in facial recognition deployments.

Beyond the giants, Chinese startups have proliferated – often with government backing – to build indigenous AI accelerators. Notable names include Cambricon Technologies, which began by supplying neural network IP for Huawei’s early Kirin NPUs and later launched its own AI processor line (Cambricon’s chips have been used in servers by Alibaba and others). Horizon Robotics focuses on automotive AI chips for driver-assistance and has deals with Volkswagen and Chinese OEMs. Biren Technology made headlines developing a high-end GPU-like AI chip (the Biren BR100) fabbed on TSMC 7nm – aimed to rival NVIDIA A100 – though export restrictions on EDA tools forced some spec downgrades. Other players like Iluvatar, Denglin, Montage, Black Sesame and many more are targeting niches from cloud training to edge AI in IoT. Collectively, this ecosystem has benefited from major government funding (such as China’s National IC Investment Fund, sometimes called the “Big Fund,” which has poured tens of billions into semiconductors). According to industry reports, over 1,200 AI chip companies had registered in China by 2023, spurred by generous incentives, though only a fraction have reached advanced product stages.

A significant challenge for China’s AI chip push has been the U.S. trade restrictions on advanced semiconductors. Since 2019, the U.S. government has progressively tightened export controls, barring companies like NVIDIA from selling their top-of-the-line GPUs (A100, H100) to Chinese customers. In 2022 and 2023, new rules also restricted U.S. and allied equipment makers from supplying China with the tools to fabricate cutting-edge chips below ~14nm without licenses. These moves directly impact AI accelerators, as high-end training chips require the latest process nodes and often U.S.-origin IP. To adapt, Chinese firms have taken creative measures – for example, NVIDIA now sells a modified A800 and H800 GPU to China, capped in performance to meet U.S. export limits (lower interconnect speeds, etc.), so that Chinese cloud providers can still get somewhat close to state-of-art. Meanwhile, China is racing to achieve self-sufficiency in critical chip tech. Domestic foundry SMIC surprised observers by producing 7nm-class chips (using DUV lithography techniques) – reportedly used in a new Huawei smartphone and AI accelerator – despite not having access to EUV tools globenewswire.com. This indicates China’s determination to advance even under sanctions: for example, SMIC’s DUV innovations enabled fabrication of Huawei’s Ascend 920 AI chip at ~7nm, helping “overcome export restrictions and stay competitive” globenewswire.com. The government has also launched programs to develop homegrown EDA software, semiconductor materials, and talent to shore up the supply chain.

Despite these hurdles, China’s sheer market scale virtually guarantees it will be a major player. The nation produces and consumes enormous quantities of data – from its 1+ billion mobile users to widespread AI-powered services – creating huge domestic demand for accelerators. Sectors like surveillance (with millions of cameras employing AI vision), fintech, e-commerce, and manufacturing automation all rely on AI chips. Moreover, China’s push in autonomous vehicles and smart infrastructure provides a strong catalyst (Baidu’s Apollo self-driving platform, for instance, uses local AI chips for onboard perception). By some estimates, China could account for nearly one-third of global AI chip demand by 2030, though much of that is currently served by foreign chips. If China’s native industry continues to mature, it could capture a large slice of this value internally. Beijing’s goal is to dramatically raise its self-reliance: China’s government has stated aims to produce 70% of chips it uses by 2025 (all types, not just AI), and to close the gap in advanced logic chips by 2030. While the highest-end fabrication (5nm, 3nm nodes) may remain elusive in the near term, Chinese AI chip designers can leverage 14nm/7nm processes to produce highly competitive accelerators for many applications – especially inference at the edge, where slightly older process nodes are often sufficient.

In summary, China is sprinting to build a complete AI chip ecosystem under a mix of necessity and ambition. The U.S.-China tech rivalry has in some ways accelerated China’s resolve to invest in chips. We can expect China to introduce more capable AI accelerators each year, gradually reducing reliance on imports. However, sanctions will also constrain how quickly Chinese chips can reach parity on absolute performance (given limits on top-tier manufacturing). This dynamic – Chinese innovation under external constraints – will be a defining theme in the AI accelerator race through 2030.

Europe and Other Regions: Striving for Semiconductor Sovereignty

Europe, while not home to an Nvidia or Huawei equivalent in AI chips, is nonetheless an important arena in the global landscape. European industries (automotive, aerospace, healthcare, etc.) are huge consumers of semiconductors and increasingly of AI accelerators, even if the chips themselves often come from abroad. Recognizing the strategic importance, the EU has launched a concerted effort to boost its semiconductor sovereignty. The flagship initiative is the EU Chips Act, enacted in 2023, which mobilizes €43 billion in public and private funding to strengthen Europe’s chip design and manufacturing capabilities eetimes.eu. A key goal is to double Europe’s share of global chip production to 20% by 2030, up from a historic low of ~9% today eetimes.eu. This is an ambitious target (especially as the worldwide market is expanding), but it reflects Europe’s desire to not be entirely dependent on foreign suppliers for critical technology.

In practice, Europe’s strategy has involved attracting leading chipmakers to build fabs on European soil (with generous subsidies). For example, Intel is investing up to €30 billion to build advanced fabs in Germany and Ireland (with around €10B+ in subsidies from Germany) nextmsc.com. TSMC (Taiwan) has agreed to build a fab in Germany as well, with government support. These fabs won’t be purely for AI chips, but they will bolster overall capacity and know-how in the region. Europe is also strong in semiconductor equipment (ASML in the Netherlands is the monopoly supplier of EUV lithography machines, for instance) and in research (with institutes like IMEC in Belgium). France and Germany have initiatives to develop indigenous processor IP (like the European Processor Initiative targeting exascale computing), which could spill over to AI. And while Europe lacks many large AI chip companies, it has a number of startups and niche players:

- The UK’s Graphcore (though the UK is now outside the EU) is a prominent AI accelerator startup known for its Intelligence Processing Unit (IPU) architecture. It has raised over $680 million and achieved a valuation of ~$2.8B research.contrary.com, partnering with firms like Dell to offer IPU systems. However, Graphcore has faced challenges gaining widespread adoption against NVIDIA’s ecosystem.

- XMOS (Bristol, UK) produces edge AI chips and was an early innovator in AI inference at low power.

- Hailo (Israel – closely tied to European auto industry) offers AI edge chips for cameras and has raised substantial funding.

- BMW and Mercedes in Germany have done in-house AI chip R&D for autonomous driving, sometimes in collaboration with suppliers.

- Bosch, the German auto supplier, is investing in chip fabs and has developed AI ASICs for radar and camera systems used in cars.

Europe also leverages ARM – the UK-based chip IP designer (now owned by Japan’s SoftBank) – whose processor and NPU cores are found in many AI-enabled SoCs worldwide. While not an AI chipmaker itself, ARM’s influence (and the fact that NVIDIA attempted to buy ARM in 2020 before regulators blocked it) plays into Europe’s role in the chip value chain.

Overall, Europe’s current market share in AI accelerators is relatively small (most chips are imported from the U.S. or Asia), but it is focusing on policy and partnerships to grow. European leaders frequently cite the need to be able to build the “brains” that will go into European products – whether it’s electric cars, robotics or cloud infrastructure. The region’s companies are huge customers: for example, European automakers will be major buyers of AI chips for autonomous driving, and firms like SAP and Siemens will incorporate AI hardware into industrial solutions. Thus, Europe’s strategy is partly to leverage this demand to entice chip investments locally.

Other key regions in the AI chip landscape include East Asia (outside of China) and emerging players:

- Taiwan is absolutely pivotal, albeit primarily as the manufacturing base. Taiwan’s TSMC alone fabricates an estimated 90% of the world’s most advanced chips (5nm and below), including virtually all cutting-edge AI accelerators eetimes.eu. This means whether the chip was designed by NVIDIA, Google, or Alibaba, chances are it’s made in Taiwan. This concentration poses supply chain risks (e.g. geopolitical tensions over Taiwan) but so far TSMC has been a reliable linchpin, expanding capacity to meet AI chip demand. Taiwan also has AI chip design talent (e.g. MediaTek is a major mobile chip firm that includes NPUs in its designs, and startup TensorCore is developing AI chips).

- South Korea is a leader in memory (essential for AI systems) and also has Samsung, which both manufactures chips and designs some. Samsung has developed NPUs integrated in its Exynos mobile processors and is researching AI accelerators (including neuromorphic chips). Another Korean startup, Rebellions, builds AI chips for data centers and received government support. South Korea aims to leverage its strength in memory and electronics to not miss the AI hardware wave.

- Japan has deep expertise in certain chip areas (sensors, automotive chips) and companies like Sony and Renesas have integrated AI processing into their products (Sony’s image sensors with AI processing, Renesas’s chips for cars, etc.). Japan’s government is also investing in advanced chip consortia (like Rapidus for 2nm fabs by late 2020s) which, if successful, could eventually produce AI chips domestically. Additionally, Japan remains a top supplier of semiconductor equipment and materials (vital for making any AI chip).

- Israel deserves mention for its outsized role in AI chip startups: Habana (now Intel), Mobileye (Intel), Hailo, NeuroBlade, and more – many innovations originate there, often acquired by U.S. firms.

- India and Canada are also making early moves: India, historically not a chip producer, has announced incentives for semiconductor fabs and could become an assembly/testing hub, while Canada’s Tenstorrent is an example of North American diversity beyond Silicon Valley.

One interesting development is the involvement of the Middle East as investors and end-users of AI hardware. Sovereign funds and tech initiatives in the Middle East (e.g. Saudi Arabia’s AI initiatives, UAE’s G42 group) have started purchasing cutting-edge AI systems in bulk, effectively importing large numbers of NVIDIA GPUs and even partnering with companies like Cerebras. For example, UAE’s G42 was the largest customer of Cerebras in 2023, using its wafer-scale AI computers to build large models nextplatform.com. Saudi Arabia is reportedly building data centers with tens of thousands of GPUs for national AI research. While these countries are not developing accelerator chips themselves, their deep pockets are funding the ecosystem (and even taking equity stakes in AI chip firms, as seen with Abu Dhabi’s investments).

In summary, outside the U.S. and China, other regions are keen not to be left behind in the AI hardware race. Europe is pursuing strategic autonomy via funding and local fabs, Taiwan and South Korea remain indispensable manufacturing and are seeking to climb up the value chain, and smaller players contribute via niche expertise or capital. The AI accelerator market by 2030 will thus be shaped by a complex global tapestry of cooperation and competition – with chips often co-designed in one place, fabricated in another, and used worldwide.

Diverse Use Cases Across Cloud, Edge, and Devices

AI accelerator chips are being deployed across an incredibly diverse range of applications and environments, each with its own requirements. Here we break down some key use cases and how accelerators are fueling them:

- Cloud Data Centers: The epicenter of AI training is in hyperscale cloud and enterprise data centers. Here, racks of accelerators (GPUs/TPUs/ASICs) work in parallel to train large AI models and serve heavy-duty inference. Cloud providers (AWS, Google Cloud, Microsoft Azure, Alibaba Cloud, etc.) offer AI accelerator instances for rent, enabling researchers and companies to spin up thousands of chips on-demand. The scale is staggering – top-tier AI training clusters now link tens of thousands of GPUs with high-speed interconnects to create “AI supercomputers.” As noted, GPT-4’s training run consumed an estimated 25,000 GPUs over months research.contrary.com. These data centers increasingly feature specialized infrastructure (fast networking like InfiniBand, cooling systems for high-power chips, etc.) to accommodate AI workloads. Cloud AI chips focus on maximizing throughput (e.g. matrix operations per second) and memory bandwidth. NVIDIA’s dominance in this arena is being challenged by in-house chips (TPUs, etc.) but as of mid-2025, GPUs still power the majority of cloud AI compute. Given the explosive growth of generative AI usage (search, assistants, content creation), analysts predict 300%+ increase in AI spending in just the next 3 years in data centers io-fund.com. Indeed, by 2030, data center AI workloads are expected to be enormous – one scenario estimates 25×10^30 FLOPs of generative AI compute demand globally mckinsey.com. Serving this will require continual advances in accelerator chips and likely more AI-specific servers (with co-packaged accelerators, photonic interconnects, etc. on the horizon).

- Edge Computing & IoT: Not all AI happens in the cloud – there is a major trend towards pushing AI to the edge: on devices like cameras, wearables, appliances, drones, and industrial machines. Edge AI accelerators (often tiny NPUs or DSPs) enable real-time inference with low latency and without constant internet connection. Use cases include smart cameras doing on-device vision analytics, factory machines with AI for predictive maintenance, and AR/VR headsets with on-board scene understanding. The Internet of Things (IoT) boom means billions of devices generating data; embedding AI chips in them allows immediate processing of that data. This is driven by latency, privacy, and bandwidth advantages. For example, a security camera with an AI chip can detect intruders locally in milliseconds rather than sending video to a cloud server. The market for edge AI chips is set to grow rapidly (42% CAGR as mentioned) globenewswire.com. Already, smartphones are a major edge AI platform – every modern phone SoC from Apple’s A-series to Qualcomm Snapdragon includes an AI accelerator for tasks like image enhancement, voice recognition, and augmented reality. In fact, consumer electronics accounted for ~34% of AI chip usage in 2024 globenewswire.com largely due to the volume of smartphones with NPUs. On the industrial side, companies like NXP, Infineon, and Analog Devices are integrating AI capabilities into microcontrollers and sensors. Even tiny micros (MCUs) now sometimes have “TinyML” accelerators to run simple neural nets in wearables or household gadgets. This distributed intelligence is expected to permeate smart homes, cities, and infrastructure. As one report noted, the proliferation of IoT devices generating massive data is fueling demand for AI chips to enable intelligent processing at the device level, for real-time analytics and decision-making on-site nextmsc.com. Edge accelerators prioritize low power consumption (to extend battery life) and often need to be rugged or specialized for environments (e.g. automotive-grade chips enduring heat/vibration).

- Autonomous Vehicles: Self-driving cars and advanced driver-assistance systems (ADAS) are hungry consumers of AI chips. A single autonomous test vehicle can carry multiple accelerator chips crunching camera feeds, LiDAR data, radar signals, and more to make split-second driving decisions. For instance, Tesla developed a custom FSD (Full Self-Driving) chip to process vision in its cars, and NVIDIA’s DRIVE Orin and newer Atlan/Thor SoCs are being used by many automakers to power Level 2+ autonomous features. These automotive AI chips are designed for functional safety and high reliability, often featuring redundant processors. They perform tasks like object detection, driver monitoring, path planning – essentially functioning as the “brain” of the vehicle. The automotive AI chip segment is expected to be the fastest-growing, as even mid-range cars incorporate more ADAS (e.g. automatic emergency braking, lane-keeping) which rely on onboard AI. By 2030, full self-driving taxi fleets and trucks could further boost demand. One expert noted that AI chips are pivotal for autonomous driving, interpreting surroundings and making intelligent choices in real time techstrong.ai. Companies like Mobileye (Intel) have shipped tens of millions of EyeQ vision chips in vehicles, and startups like Horizon Robotics in China are offering driving-assist chips domestically. Even beyond cars, autonomous drones, delivery robots, and other self-navigating machines all leverage AI accelerators for perception and control. The unique requirement here is real-time processing with strict safety – an AI mistake can be life-threatening in traffic – so automotive AI chips emphasize deterministic performance and extensive validation.

- Smartphones & Consumer AI: Our pocket devices have quietly become AI powerhouses. Virtually all high-end (and many mid-range) smartphones now contain dedicated AI accelerators. Apple’s Bionic chips feature the Neural Engine, Google’s Tensor chip has a TPU-lite, Samsung and Qualcomm embed NPUs – these handle tasks like computational photography (e.g. Night Mode, which involves AI image enhancement), voice assistants (speech recognition on-device), face identification (using neural nets for FaceID), language translation, and even user behavior predictions to save power. The scale here is huge: billions of phones, tablets, and PCs performing AI inference daily. While individually these NPUs are smaller than data center chips, collectively they represent a significant market. They are usually integrated into system-on-chip (SoC) designs alongside CPU/GPU/ISP, rather than standalone chips. The trend is towards increasing AI performance each generation – e.g. Apple’s Neural Engine went from 600 billion ops/sec to 15.8 trillion ops/sec in a few years. Beyond phones, consumer devices like smart speakers (Amazon Echo with its AZ2 neural processor), smart TVs, and VR headsets (Meta’s devices use Qualcomm XR chips with AI) all leverage on-device AI silicon. The demand is driven by the need for instant responsiveness and privacy (processing personal data like voice locally). As AR glasses and IoT wearables proliferate, expect to see ever more specialized low-power AI chips in consumer tech.

- Robotics & Automation: Robots in warehouses, hospitals, and offices are increasingly using embedded AI chips to navigate and interact with the world. In manufacturing, robotic arms use AI accelerators for visual inspection and precision control. Logistics robots in Amazon warehouses have on-board AI to avoid obstacles and sort packages. Service robots (like hospital delivery bots or restaurant servers) use AI chips for movement and speech interfaces. These scenarios often rely on efficient inference chips that can run vision models and reinforcement learning policies in real time. As one analyst observed, the rapid automation in warehouses is driving adoption of AI chips, which control robots and process sensor data to make decisions techstrong.ai. A related domain is drones – both consumer and industrial UAVs – which use AI silicon for image recognition (e.g. tracking targets) and autonomous flight. Robotics typically requires accelerators that are very power-efficient (to fit in small robots or battery-powered units) but still deliver enough compute for tasks like SLAM (simultaneous localization and mapping) and object recognition. NVIDIA’s Jetson line of modules is one popular solution, essentially packing GPU and CPU into a small form for robots and edge AI. Numerous startups (like Brazil’s Speedbird or US’s Dusty Robotics) embed AI chips to give their machines greater autonomy. With projections of millions of robots in workplaces by 2030, this will be a modest but meaningful slice of the AI chip pie.

- Enterprise AI & HPC: Many large enterprises and research institutions are deploying AI accelerators in their on-premise data centers and high-performance computing (HPC) clusters. Reasons range from data governance (keeping sensitive data in-house) to custom needs (specialized research). Sectors like finance (algorithmic trading, fraud detection), healthcare (medical imaging analysis), and defense (intelligence and simulation) often build private AI infrastructure. These setups use the same class of chips – high-end GPUs or AI ASICs – but packaged into turnkey systems (e.g. NVIDIA DGX servers or Cerebras CS systems) for ease of deployment. The enterprise AI hardware market is growing as AI becomes a core workload alongside traditional IT. Turnkey AI appliances allow enterprises without big AI engineering teams to benefit from accelerated computing. For example, banks might install NVIDIA DGX nodes to run risk models or utilize FPGA-based appliances for low-latency trading algorithms. Enterprise demand also ties into the HPC world, where supercomputers for scientific research are increasingly blending AI accelerators into their architecture. Modern supercomputers (at national labs, etc.) often have GPU partitions for AI-driven simulations and data analysis. By 2030, as AI and HPC converge (AI being used in physical simulations and vice versa), nearly all major HPC systems are expected to incorporate some form of AI acceleration. This cross-pollinates advancements – for instance, the H100 GPU is as much an HPC chip as an AI chip, serving both markets. Thus, enterprise and HPC use cases ensure accelerators penetrate beyond the tech giants, reaching every large organization that can harness AI for competitive advantage or innovation.

In summary, AI accelerators are becoming ubiquitous across computing – from the cloud to the edge, from personal devices to industrial machines. Each use case values different metrics (throughput, latency, power, cost), which is why we see a variety of chip types co-existing (GPUs for flexibility, ASICs for efficiency, FPGAs for adaptability, etc.). This broad adoption across domains is the fundamental engine of the AI chip market’s growth – AI is not a single niche but a horizontal enabling technology, much like electricity or the internet, and specialized silicon is the backbone making it feasible at scale.

Key Market Drivers

Several powerful drivers are propelling the AI accelerator chip market’s rapid growth:

- Generative AI & Advanced ML Demand: The hype around generative AI (large language models like ChatGPT, image generators, etc.) is translating into real investments in AI hardware. Organizations are racing to train ever-larger models to stay competitive, which directly drives demand for more GPUs and AI chips in data centers. Analysts call this the next “golden wave” of tech investment, as AI capabilities unlock new products and services fingerlakes1.com. Unlike some past tech cycles, AI has proven transformative across industries – from content creation to customer service – so companies are budgeting accordingly for AI infrastructure. NVIDIA’s CEO noted that every sector is now figuring out how to apply AI, leading to a virtuous cycle of model innovation and hardware spending. Generative AI inference (serving AI responses to users) also creates ongoing demand for accelerators, since these models must run on GPUs/ASICs to deliver results with low latency.

- Broad Industry Adoption of AI: Beyond the headline-grabbing chatbots, AI is being embedded in workflows in healthcare, finance, manufacturing, retail, and more. This widespread adoption boosts chip demand. For example, hospitals use AI chips for diagnostic imaging (AI-driven MRI analysis), farms use them in drones for crop monitoring, and retailers deploy AI for supply chain optimization. As the NextMSC report highlighted, sectors from automotive to finance are integrating AI – autonomous driving, fraud detection, personalized retail – all of which “boosts demand for AI chips” nextmsc.com. In many cases, AI enables things previously not possible or economical, creating new usage (e.g. predictive maintenance sensors in oil rigs now use on-site AI accelerators to detect anomalies). The digitization of industries acts as a tailwind: more digital data -> more AI analysis -> more need for AI-optimized hardware.

- Edge AI & IoT Growth: The surge of IoT devices and the need for real-time processing at the edge is a significant driver. Billions of connected sensors and smart devices are being deployed, and many will include some form of AI acceleration to handle tasks locally. As noted, spending on the IoT ecosystem is growing strongly, projected to top $1 trillion by 2026 nextmsc.com, which correlates with more investment in edge AI chips. Applications like smart cities (traffic cameras with AI), smart factories (AI monitoring equipment), and smart homes (intelligent appliances) all require “efficient AI chips for on-device analytics” nextmsc.com. The push for privacy (keeping data on device) and latency (immediate response) means edge AI will continue to expand, driving high volumes of accelerator shipments (albeit smaller, cheaper per unit than cloud chips).

- Energy Efficiency Imperative: As AI workloads scale, power consumption has become a critical concern. Data centers running AI can draw immense electricity – one analysis warned that AI data centers could consume >10% of global power by 2030 if trends continue mckinsey.com. This creates a strong incentive for more efficient chips to handle AI operations per watt. Thus, one market driver is the need for energy-efficient AI silicon. Specialized accelerators can be far more power-efficient than CPUs for AI tasks, and each new chip generation (5nm to 3nm, etc.) brings efficiency gains. Companies are investing in new architectures (e.g. analog computing, optical AI chips) aiming for orders-of-magnitude lower power for neural network processing. Governments and cloud providers alike are keen to reduce the carbon and cost footprint of AI – and upgrading to the latest efficient accelerators is a key strategy. The SNS Insider report explicitly notes “energy-efficient chips” as a growth driver alongside generative AI and edge computing globenewswire.com. Going forward, energy efficiency is not just a cost matter but also one of sustainability and regulatory compliance, possibly spurring adoption of newer chips (with lower TDP) to replace older, power-hungry ones.

- Government Policies & Investments: Geopolitical and economic factors are strongly catalyzing the AI chip market. Government funding programs like the $52 billion U.S. CHIPS Act and Europe’s €43B Chips Act are pumping money into semiconductor R&D and manufacturing nextmsc.com eetimes.eu. While these are broad, AI accelerators stand to benefit significantly as a high-growth segment that aligns with national priorities in AI leadership. For instance, the U.S. CHIPS Act specifically includes incentives for foundries that will fabricate leading-edge logic chips used in AI, and research funding for AI chip design. Likewise, China’s government has made semiconductors a top priority in its five-year plans, reportedly pouring tens of billions of dollars of subsidies into the domestic AI chip sector (grants, equity investment, cheap loans to AI chip startups). This public-sector push is accelerating innovation and capacity – new fabs, new startups, and new university programs in chip design have all emerged due to these incentives. Additionally, the defense sector’s interest in AI (e.g. for military autonomous systems) is resulting in government-driven demand for advanced AI chips and could lead to more public R&D in specialized processors.

- Technological Advances & New Use Cases: On the supply side, continuous advances in semiconductor technology are unlocking better AI chips, which in turn open new use cases. For example, the transition to 3nm and upcoming 2nm processes is allowing more transistors to be packed in accelerators, enabling on-chip memory, bigger matrix cores, etc., which improve AI performance. TSMC’s 2nm is slated for mass production in 2025, promising chips with higher density and efficiency nextmsc.com – this will likely yield a next wave of AI chips with unprecedented capability. Such improved chips could make things like real-time AR glasses or autonomous drones far more viable (where earlier chips were too weak or too power-hungry). In essence, better chips create new markets by making AI viable in more contexts. For instance, if a breakthrough makes AI accelerators 10× more efficient, we might see them in every appliance and vehicle, not just high-end ones. The virtuous cycle of Moore’s Law (though slowing, still alive via chiplet architectures, 3D stacking, etc.) and AI algorithm progress continues to drive the market forward. As a concrete example, the introduction of HBM (High Bandwidth Memory) in GPU-based accelerators has vastly increased memory speeds, allowing larger AI models to be processed, which then spurs deployment of those chips in data centers hungry to train trillion-parameter models. Thus, R&D achievements – from new circuit designs to novel chip materials – are fundamental drivers enabling the market’s growth trajectory.

- AI as Strategic Priority for Enterprises: Finally, at a strategic level, companies across the board now recognize that adopting AI is key to staying competitive. This “AI-first” mindset translates into budget allocation for AI infrastructure. CIOs and CTOs are advocating for AI platforms in their organizations, often with board-level support, meaning that even traditionally conservative industries are investing in AI hardware. Cloud providers have reported that AI workloads are a top driver of new cloud spend – enterprise cloud customers are explicitly seeking GPU/TPU resources for their projects. This broad-based demand from enterprise and institutional customers (banks, universities, medicine, etc.) is a driver that didn’t fully exist a decade ago when AI was niche. Today, if a Fortune 500 company wants to deploy an AI-enhanced service, they might purchase a cluster of accelerators or subscribe to cloud instances – either way generating revenue in the AI chip ecosystem. The momentum of AI in business (as evidenced by over 70% of organizations adopting some AI by 2024, up from 20% in 2017 research.contrary.com) ensures a strong underlying demand signal for accelerator vendors.

These drivers reinforce each other – e.g. government support accelerates tech advances, which enable new use cases, which drive more enterprise adoption, and so on. Together they paint a picture of robust, sustained growth conditions for the AI accelerator chip market through 2030 and beyond.

Challenges and Constraints

Despite the optimism, the AI accelerator industry faces a number of challenges and headwinds that could temper growth or shape the competitive landscape:

- Chip Supply Constraints: One immediate challenge is that demand is outstripping supply for cutting-edge AI chips. The global semiconductor shortage of 2021–2022 revealed how limited fab capacity, especially at advanced nodes, can bottleneck industries. Even in 2024–2025, companies have reported long lead times for GPUs. Foundries like TSMC are operating at capacity for 5nm/4nm wafers, and expanding capacity takes years and tens of billions in investment. As a result, AI companies sometimes struggle to get enough chips to meet internal or customer needs. The “global chip deficit” has created long waiting lists and supply chain difficulties, with foundries prioritizing other mass-market products over some AI chips nextmsc.com. This dynamic could slow AI projects or drive up prices. Additionally, the reliance on a few foundries (TSMC, Samsung) means any disruption (political conflict, natural disaster) could severely impact supply. Industry analysts warn that by 2030 there may be a “logic wafer supply gap” in leading nodes driven by explosive AI demand – potentially a shortfall of millions of wafers unless more fabs are built mckinsey.com. Building sufficient new fabs (each easily $10B+ and 2–3 years to construct) is both a race and a geopolitical priority (hence the Chips Acts). Until supply and demand balance, limited availability of the latest chips could throttle AI deployment in some cases.

- High R&D and Production Costs: Developing state-of-the-art AI chips is incredibly expensive and complex. The design cost for a 5nm or 3nm ASIC can run into hundreds of millions of dollars, once you factor in engineering talent, EDA tools, prototyping, and software stack development. For GPUs and other large chips, the expense is even greater (NVIDIA reportedly spends billions on each new GPU generation’s R&D and tape-out). This high barrier to entry means only firms with deep pockets (or very strong niche focus) can compete at the bleeding edge. Startups must either raise huge amounts of capital or find a less contested niche. Even then, turning a prototype into a product requires navigating manufacturing (mask sets alone cost tens of millions) and then marketing against established players. On the manufacturing side, the capital expenditure to add capacity is enormous, as noted. So a challenge is: can supply keep up without driving costs (and thus chip prices) too high? The risk is that rising fab and design costs could make each new generation of AI accelerators pricier, potentially slowing adoption. However, historically volume growth has helped offset this, and major players enjoy economies of scale. Still, the financial risk for any misstep in design (a failed chip project) is high, which could deter innovation at the margins.

- Competitive Moats – Software Ecosystem Lock-in: One less tangible but critical challenge for new entrants is the software ecosystem. NVIDIA’s dominance is not just hardware; it’s also the CUDA programming platform and a vast developer community optimized for its GPUs. Similarly, Google’s TPUs tie into TensorFlow and Google’s cloud ecosystem. For a new AI chip to gain traction, it must offer not only raw performance but also easy integration into AI workflows (support for frameworks like PyTorch, tools for migration, etc.). This is a high hurdle – many promising chips struggled because the AI software stack was not mature or developers were unwilling to rewrite code. It creates a kind of incumbency advantage: even if a startup’s chip is, say, 2× faster, if it takes a ton of effort to use, customers might stick with NVIDIA. Graphcore’s struggles illustrate this; despite innovative hardware, building the compiler and convincing developers to port models proved challenging research.contrary.com. This “network effect” in software is a barrier to entry that can stymie competition and is a challenge the industry must address (through standards or middleware) if a diverse set of accelerators is to flourish. Some moves, like ML frameworks becoming more hardware-agnostic or use of open standards (like OpenAI’s Triton or oneAPI), could help ease this, but it remains a friction point.

- Power and Thermal Limits: As AI chips pack more compute, power density and heat dissipation become limiting factors. A single top-end GPU now can draw 300–400 watts under load; servers with 8 such GPUs can consume >3 kW. Data center operators face challenges in power delivery and cooling – many are reaching the limits of what air cooling can do and are shifting to liquid cooling for racks full of AI accelerators. There’s also an upper bound where chips simply can’t keep growing TDP without novel cooling (like immersion cooling) or more efficient architectures. While efficiency per operation is improving, the sheer scale of models is pushing total energy use up. This raises concerns about sustainability and operating cost. If AI compute demands were to continue doubling every 1–2 years, we might hit scenarios where current data center infrastructure can’t handle the power required without significant upgrades. Thus, a challenge is innovating to keep performance growing within reasonable power envelopes. Techniques like chiplet architectures (spreading compute across multiple dies), better materials, and new cooling techniques are all being pursued to tackle this. Nonetheless, power is a fundamental constraint that could make certain deployments (like AI at the extreme edge, on battery) difficult until breakthroughs happen.

- Geopolitical and Trade Risks: The AI chip arena is entangled with geopolitical competition, especially between the U.S. and China. Trade restrictions (export controls) create uncertainty and market bifurcation. For instance, U.S. rules in 2022 banned the export of certain high-performance AI chips to China, which cut off a large market for those chips and forced NVIDIA to create reduced versions. There is a risk of further decoupling: if China cannot get Western chips, it will accelerate its own alternatives, potentially leading to divergent ecosystems (different software standards, etc.). Companies must navigate these politics carefully – U.S. firms don’t want to lose the China market but have to comply with regulations. Conversely, Chinese companies face risk if reliant on Western IP or fabs – e.g. a Chinese AI chipmaker using TSMC could be stymied by export license issues for EDA tools or equipment. As one industry expert noted, new export regulations on AI chips (especially to China) “will impact the cost and availability of AI chips in the market.” techstrong.ai. The regulatory environment is in flux, and that uncertainty is a challenge. Additionally, political tensions (e.g. around Taiwan) cast a shadow – a conflict or sanctions could severely disrupt the supply chain. This has driven efforts at regional self-reliance (U.S. onshoring some chip production, China doing the same, Europe aiming for capacity) nextmsc.com eetimes.eu. In the near term, companies must often redesign or re-spec products to comply with rules (like NVIDIA’s A800 for China), which incurs engineering effort and possibly performance compromises.

- Intellectual Property and Patent Disputes: As the AI chip space heats up, so do IP battles. Established semiconductor companies hold extensive patent portfolios on GPU architectures, memory access methods, etc. New entrants risk infringing on these, whether knowingly or inadvertently, which can lead to lawsuits. Patent litigation can be a major drag – it’s costly, time-consuming, and can delay product rollouts or force design changes. For example, NVIDIA sued Intel’s Habana (and previously other rivals) for alleged IP infringements, resulting in a settlement with licensing fees techstrong.ai. There have also been cases of startups suing each other or poaching talent leading to trade secret disputes. This legal minefield is a challenge especially for startups that may not have war chests for protracted litigation. IP issues can also deter investors or acquirers (nobody wants to buy a company that will be tied up in court). Moreover, overlapping IP can complicate partnerships – e.g. licensing IP cores vs. developing in-house. The cautionary note from analysts: “Growing IP and patent disputes are obstructing development and distribution of AI chips and could constrain market growth” techstrong.ai. Navigating patent thickets (or cross-licensing deals) will be an ongoing chore for industry players.

- Market Consolidation and Business Risks: With dozens of startups entering the fray, not all will survive – we can expect consolidation. Already we’ve seen some casualties (Wave Computing, an early AI chip unicorn, went bankrupt; Graphcore had to cut staff amid delays; other small players pivoted away). There’s a risk of a boom-bust cycle: huge expectations driving many entrants, followed by a shakeout where only a few capture most of the market. If a major player (like NVIDIA) continues to outperform others by a large margin, customers may not adopt alternative solutions, causing funding to dry up for competitors. Additionally, big customers (clouds) might strategically support multiple suppliers to avoid one vendor lock-in, but if alternatives lag too much, the market could tip heavily to one or two winners. For startups and smaller companies, one challenge is demonstrating not just a good chip, but longevity (roadmap, support, scaling manufacturing) to convince buyers to take a chance on them. Many enterprises will default to the safe choice (NVIDIA or other established vendor) unless a new chip shows, say, 10× better performance/cost for their workload – an extremely high bar. This means newcomers must either target niche applications where they can be clearly superior, or partner with larger companies to gain market access (e.g. via OEM deals). The next few years will likely separate those with truly differentiated technology or execution from those who can’t keep up, leading to acquisitions or exits. Such consolidation could reduce competition, ironically possibly slowing innovation or keeping prices higher.

- Regulatory Compliance (AI-specific): While not a direct hardware issue, the emerging AI regulations (like the EU’s AI Act) could indirectly affect the hardware market. For example, if certain AI applications are regulated or require explainability, there may be new requirements on chips to support audit logs or safe operational modes. Data privacy laws might encourage on-device processing (benefiting edge chips) but also limit centralized data usage (potentially dampening some cloud AI growth). Environmental regulations might push data centers to be more energy-efficient or cap power usage, which then forces adoption of more efficient accelerators (actually a boon for newer chips, a bane for legacy hardware). Export controls we covered under geopolitical – they are essentially regulatory measures shaping who can sell what to whom. Another angle is antitrust and competition law: regulators could scrutinize if one company (like NVIDIA) becomes too dominant in AI chips, perhaps influencing mergers (as in blocking NVIDIA’s ARM acquisition) or practices (ensuring fair access to IP). While speculative, these factors mean the AI chip industry doesn’t operate in a vacuum and must adapt to a changing landscape of laws and norms around AI deployment.

In short, while the AI accelerator market is on a strong growth trajectory, it must overcome significant challenges – from physical limitations and supply issues to competitive and regulatory hurdles. How the industry addresses these will influence which players succeed and how smoothly AI technology can continue scaling. Historically, the tech industry has navigated similar challenges (e.g. Moore’s Law slowdowns, trade disputes), often with innovation and collaboration. We are likely to see efforts like new fabrication partnerships, standards to simplify AI software portability, and continued government-industry collaboration to mitigate these constraints.

Technological Trends Shaping the Future

The rapid evolution in AI accelerator chips is driven by key technological trends and innovations that are shaping how chips are designed, manufactured, and utilized. Here are some of the most important trends from 2025 toward 2030:

- Advances in Semiconductor Process Nodes: The march down Moore’s Law continues (albeit with greater difficulty). By 2025, the industry will be transitioning from 5nm/4nm nodes to 3nm and even 2nm processes for leading-edge chips. TSMC’s 2nm process is slated to start production in 2025, using novel nanosheet (GAAFET) transistors for better energy efficiency nextmsc.com. For AI accelerators, moving to smaller transistors means more cores and memory can be packed on a chip, or alternatively the same compute power in a smaller, more power-efficient form. For example, a 2nm-based AI chip in 2026–2027 could potentially house trillions of transistors, enabling larger on-chip caches or more matrix multiplication units specifically for AI. These node shrinks will deliver improvements in performance per watt, which is crucial as AI compute needs skyrocket. However, they also raise design complexity and cost. We may see fewer design starts at each new node focusing on high-volume or high-value chips (GPUs, datacenter ASICs), while edge/consumer AI chips might lag a node or two behind if that offers a better cost trade-off. Regardless, the introduction of 3nm and 2nm chips around 2025–2027 will likely be a tipping point for AI – enabling, for instance, AI inference chips in phones that rival a 2020s GPU in capability, or training chips that bring day-long tasks down to hours. Beyond 2nm, research is ongoing into post-silicon technologies (like carbon nanotube FETs, or even quantum accelerators for AI), but those are not expected to be mainstream by 2030. Instead, we’ll see 3D scaling (moving upwards since 2D scaling slows) become more prominent.

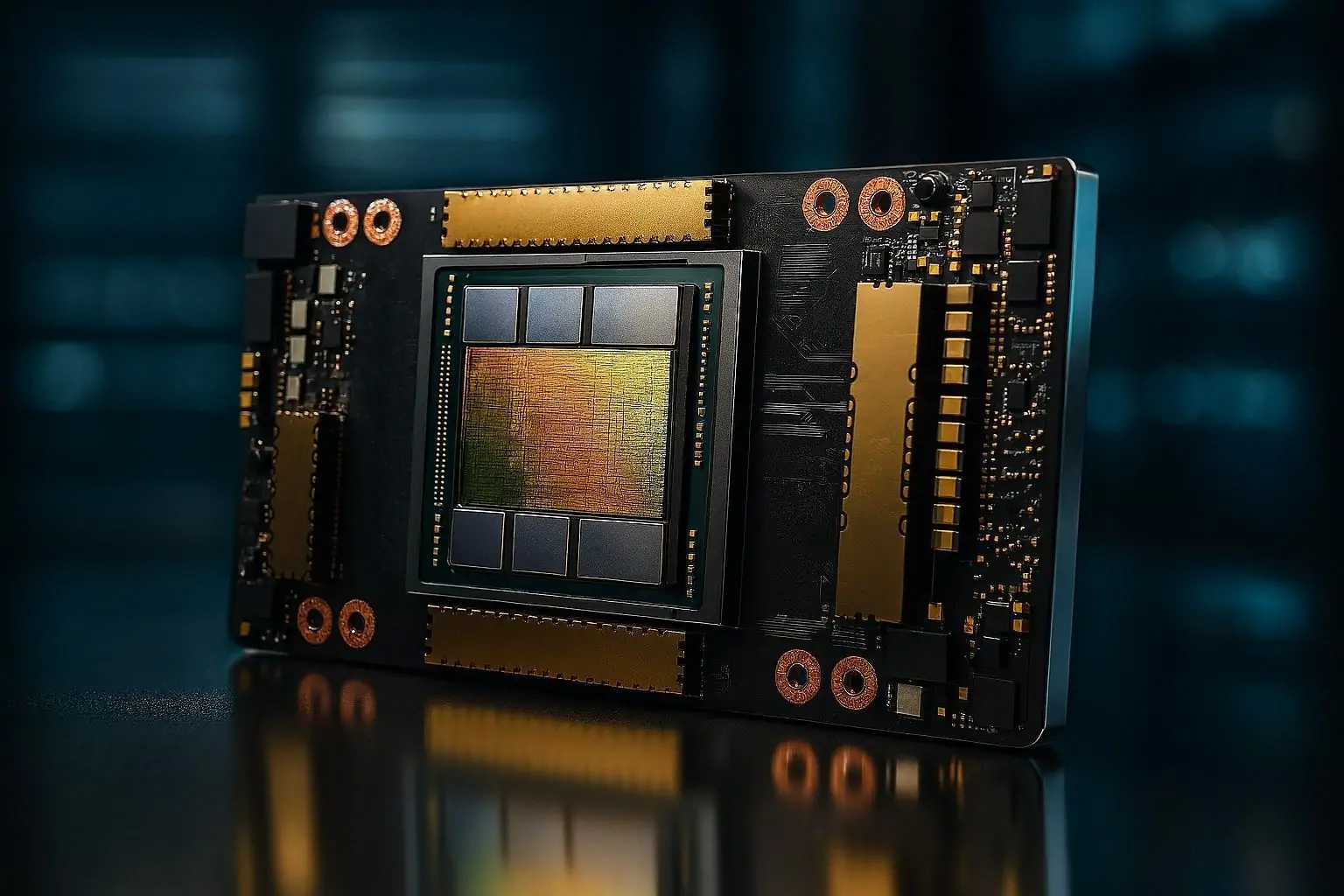

- Chiplet and Heterogeneous Integration: One major trend is breaking large chips into smaller chiplets and then packaging them together in advanced modules. This is already seen in products like AMD’s MI300, which combines multiple GPU and CPU chiplets, or Intel’s Ponte Vecchio GPU which used Foveros/EMIB to integrate many tiles. Chiplets allow designers to mix and match technologies – e.g. use a leading-edge node for cores, but a cheaper node for I/O or analog circuits – thus optimizing cost and yield. For AI accelerators, this is transformative: instead of one monolithic die (which faces yield issues as it gets bigger), chiplets let you scale out by adding more “tiles.” For instance, future accelerators might have dozens of small AI cores chiplets on an interposer, acting like one giant chip. NVLink switches or proprietary high-bandwidth links can connect the chiplets at almost on-die speeds. We also see heterogeneous integration in packaging different types of chips: memory and logic. The latest GPUs often come with HBM (High-Bandwidth Memory) stacks co-packaged on the same substrate, drastically increasing memory bandwidth (multiple TB/s) available to AI processors globenewswire.com. Looking ahead, companies are developing even tighter integration like 3D stacking of logic on logic (placing some smaller compute die atop a larger base die) and logic on memory (processing-in-memory concepts). By 2030, it’s plausible we’ll have AI chips where the SRAM or DRAM is layered directly above compute units, shortening data paths – this could mitigate the memory bottleneck for big AI models. In short, advanced packaging is becoming as important as transistor shrink for performance gains, enabling “chips” that are really modular assemblies of specialized pieces. This modularity also fosters customization – e.g. a cloud provider could commission a chiplet-based design tailored to its needs (some cores optimized for transformers, some for recommendation models, etc.).

- Specialized Architectures for AI: Early AI accelerators took existing GPU or DSP designs and repurposed them. Now, we’re seeing architectures built from the ground up for AI. This includes Tensor Cores (NVIDIA’s matrix units) which massively speed up matrix multiplications, systolic arrays (used in Google TPUs) that efficiently pipe data for dense linear algebra, and novel dataflow architectures (like SambaNova or Graphcore’s approach) that aim to minimize data movement. As AI algorithms diversify, we might see more domain-specific accelerators: for example, chips optimized for transformer models (which could handle attention mechanisms more efficiently), or ones for graph neural networks (with sparse computation handling). Indeed, some startups are exploring reconfigurable architectures that can adapt to the model type. Neuromorphic chips (inspired by brains, spiking neural nets) are another avenue, promising ultra low-power pattern recognition, though they remain mostly research with few commercial deployments yet. By 2030, it’s expected that AI ASICs will handle the majority of specialized inference workloads mckinsey.com, as McKinsey predicts, because these ASICs can be finely tuned for their task in a way general GPUs cannot. GPUs themselves are evolving to be more AI-focused – for instance, newer GPUs incorporate more AI-specific cores and even some FPGA-like reconfigurable logic for flexibility. Another trend is “fusion” architectures that combine elements: IBM’s AIU chip, for example, is an AI core that can sit alongside CPU cores on a system-on-chip, indicating future CPUs might have robust AI coprocessors (we see hints of this in Apple’s M-series chips and upcoming Intel Meteor Lake with integrated NPUs). The bottom line: architecture innovation is rampant, and we’re moving beyond one-size-fits-all. The market will likely stratify into training-optimized chips, inference-optimized chips (with subcategories for cloud vs edge), and adaptive chips for research that can handle emerging model types.

- Memory and Interconnect Innovations: AI workloads are famously memory- and bandwidth-hungry. Training a large model can require reading terabytes of data and shuffling huge parameter matrices. Thus, innovation in memory technology is crucial. Apart from HBM mentioned, there’s work on new memory types like MRAM or ReRAM that could be integrated on chip to hold weights closer to compute. Some startups (e.g. Mythic) tried analog in-memory computing, storing weights as analog values in flash cells to achieve massive parallelism, though with mixed success. Still, as we approach limits of DDR and standard DRAM scaling, we might see new AI-centric memory hierarchies: possibly larger on-chip caches, or multi-level memory with HBM + emerging non-volatile memory for overflow. On the interconnect side, connecting thousands of accelerators efficiently is a challenge. Technologies like NVIDIA’s NVLink and AMD’s Infinity Fabric allow direct GPU-to-GPU communication at high speeds, enabling big model training across many chips. Future incarnations might use optical interconnects (Facebook and others have research on optical networking for AI clusters) to provide higher bandwidth and lower latency across racks of accelerators. By 2030, disaggregated architectures could appear – where memory pools and compute pools are separate but linked by ultra-fast links, giving accelerators access to a vast memory space. This could be enabled by technologies like CXL (Compute Express Link) which allow sharing memory between CPUs and accelerators. The goal of all these trends is to tackle the “memory wall” and “communication bottleneck” in AI. Expect to see specs like 400 Gbit/s network interfaces on accelerators, on-package photonic links, and creative memory solutions in cutting-edge AI hardware.

- AI Chip Design Assisted by AI: Interestingly, AI itself is helping design the next generation of chips. EDA tool makers and big firms are incorporating machine learning to optimize chip design – from floorplanning to verification. In 2023, for example, Google used AI reinforcement learning to optimize TPU floorplans, and NVIDIA applied AI to chip layout tasks. Deloitte Insights noted key semiconductor companies spent ~$300M on AI tools for chip design in 2023, projected to rise to $500M by 2026 nextmsc.com. These tools can potentially cut development time and improve performance by finding non-intuitive optimizations. So there is a bit of a feedback loop: AI chips enable better AI, which is then used to design better chips. By late 2020s, using AI in the design process will be routine – maybe entire blocks auto-generated for given specs. This could lower entry barriers somewhat or at least accelerate the iteration cycle. It might also allow more customization – e.g. an AI system could automatically generate an accelerator tailored for a specific company’s workload. If design costs can be reduced via AI, we could see a proliferation of semi-custom chips (perhaps using open source hardware like RISC-V for basics, then AI-generated accelerators for the secret sauce). This trend is nascent but promising to ease one of the pain points of chip development: the lengthy, human-intensive design phase.

- Focus on Security and Reliability: As AI chips become widespread (especially in critical applications like cars, healthcare, national security), there’s growing emphasis on their security and reliability features. This includes things like: ensuring the hardware is robust against hacking (e.g. adding encryption engines, secure boot, detection of tampering on accelerators), and against functional failures (especially in automotive, chips need to meet strict safety standards like ISO 26262, with redundancy built in). Future AI accelerators might incorporate more on-chip monitoring, error correction, and even explainability aids (for example, logging decisions for later auditing in high-stakes use). Some research is looking at making AI hardware that can prove certain behaviors (important for trust in, say, military or medical AI devices). Also, resilience is key – cosmic rays causing bit flips can be catastrophic if they make an AI go haywire; thus ECC memory and fault-tolerant design from the HPC world are coming into AI chips. By 2030, if AI systems are integrated into, say, railway control or surgical robots, regulators will demand strong assurances from the hardware up. This could favor players who invest in safety-critical design (Mobileye’s EyeQ is an example that gained trust by focusing on safe computing for ADAS).

- Quantum and Optical Computing (Beyond 2030): While likely outside the 2025–2030 window for mainstream, it’s worth noting emerging paradigms that could disrupt accelerators down the line. Quantum computing has promise for certain optimization problems and maybe machine learning (quantum ML). Companies like D-Wave and IBM are exploring quantum methods for AI, but quantum computers would need to scale many orders larger to rival classical accelerators for most AI tasks. More relevant perhaps is optical computing for AI – startups like Lightmatter and LightOn have built optical neural network accelerators that use photons for matrix multiplication, aiming at extremely high speed and low energy for certain tasks. Lightmatter’s photonic chip reportedly can interface with conventional electronics to accelerate transformer models, for instance. These are in early stages and will complement, not replace, electronic chips initially. If they mature, the latter half of the 2030s could see hybrids: optical pre-processors feeding electronic cores. Additionally, neuromorphic chips (like Intel’s Loihi or research from BrainChip) offer brain-inspired processing which could find a niche in ultra low-power edge AI by 2030. For now, however, these are mostly experimental or specialized.

In summary, the technology trends indicate a continued rapid improvement in AI accelerator capabilities – likely outpacing the historical improvement rate of general CPUs. By leveraging new manufacturing nodes, 3D integration, specialized AI-focused designs, and better memory/interconnect, the industry is working to ensure that hardware doesn’t become the bottleneck to AI progress. If anything, the risk is the opposite: hardware enabling AI models so large or complex that software and data become the limiting factors! For the period up to 2030, one can expect each new generation of AI chips to bring major leaps – e.g. 2–3× performance per watt gains every 1.5–2 years – through some combination of the above advancements. This will feed directly into making AI more powerful and pervasive.

Regulatory and Geopolitical Environment

The global competitive dynamics of AI accelerators are deeply influenced by government policies, international trade rules, and national security considerations. The period 2025–2030 will likely see even more interplay between technology and geopolitics in this sector. Key aspects of the regulatory and geopolitical environment include:

- U.S.–China Tech Tensions: The rivalry between the United States and China in AI and semiconductors is a defining factor. The U.S. has taken a strategic stance to maintain a lead in cutting-edge chips and prevent China from acquiring hardware that could be used in advanced military AI or mass surveillance. Concretely, the U.S. Commerce Department has issued export controls that prohibit sales of high-end AI accelerators (GPUs/ASICs above certain performance thresholds) to Chinese entities without a license techstrong.ai. This led to NVIDIA’s modified A800/H800 GPUs for China, and similar adjustments by AMD and others. There’s also a ban on exporting the most advanced chip manufacturing equipment (like ASML’s EUV lithography) to China, aiming to cap China’s domestic fabs at older process nodes. On the Chinese side, there could be retaliatory measures – for instance, China has at times restricted exports of critical minerals (rare earth elements) needed for chip production. These tit-for-tat measures essentially amount to a technology cold war around semiconductors. The implications for AI chips are significant: Chinese companies may be stuck using slightly less powerful hardware, or will channel efforts into domestic replacements. Meanwhile, U.S. firms lose some immediate market but hope to extend their innovation lead. A bifurcation of the market is possible, where China develops an ecosystem with its own supply chain (fabs, EDA software, etc.) if the standoff continues. Both countries also pour money into research consortia (the U.S. launching e.g. a National AI Research Resource, and China funding national labs, etc.) to push the envelope. For companies, this environment means navigating export compliance carefully – e.g. removing certain high-speed interconnect features from products destined for China – and possibly facing competition from new Chinese entrants if they succeed in homegrown designs.

- Chips Acts and Subsidies: Recognizing chips as critical infrastructure, multiple governments have enacted subsidy programs to boost domestic semiconductor production and innovation. The U.S. CHIPS and Science Act (2022) set aside ~$52 billion to incentivize chip manufacturing in the U.S. (with $39B in direct subsidies for fabs, plus R&D funds) nextmsc.com. This is leading to new fabs in Arizona, Texas, New York, etc., and some of these will produce logic chips relevant to AI (TSMC’s Arizona plant, Intel’s expansions). The Act also promotes advanced packaging capabilities and workforce development. Similarly, Europe’s Chips Act (2023) is investing €43B with the goal of doubling Europe’s share of global production to 20% eetimes.eu. This has already attracted big projects like Intel in Germany and STMicro/GlobalFoundries in France. Japan, South Korea, and India have launched their own initiatives (Japan offering subsidies for a TSMC-backed fab, India trying to woo foundries with financial incentives). These moves, while not specific to AI chips, will shape the supply chain by roughly 2027–2030 – we expect a more geographically distributed fab landscape. That could improve resilience (less concentration in Taiwan) but also means higher production costs in some cases (Western fabs can be pricier than Asian counterparts). For AI chip designers, these policies might mean more local fab options and maybe preferential treatment for using them (some U.S. government procurement might favor chips made in America, for instance). Another effect is an increase in public-private collaboration: e.g. national labs working with startups to test new AI chips, or defense agencies funding next-gen chip research (DARPA in the U.S. has programs like ERI – Electronics Resurgence Initiative – that supports novel chip designs, including AI). In essence, industrial policy is back in fashion, and semiconductors are at the heart of it.

- Export/Import Regulations and Standards: Beyond the U.S.-China duel, there are broader regulatory considerations. Countries are updating export control lists for dual-use technologies, and AI chips certainly have dual-use potential (commercial and military). For instance, high-end FPGAs and GPUs have long been on controlled lists due to possible military applications (like missile guidance or code-breaking). Companies must keep track of these and often need export licenses to sell to certain countries. On the import side, some nations might impose tariffs or require approvals for importing foreign AI hardware if they view it as a security matter (somewhat analogous to how telecom equipment from certain countries is restricted). Additionally, international standards bodies might influence the market – for example, if the IEEE or ISO issues standards for AI chip safety or interoperability, compliance could become de facto necessary. Currently, AI accelerator designs are mostly proprietary, but there’s movement toward standardized interconnects (like UCIe for chiplets) and data formats (FP8 numeric format recently proposed for AI). Governments sometimes push for open standards to avoid lock-in or to enable local players – Europe, for one, prefers open ecosystems (they funded an open-source RISC-V initiative as an alternative to proprietary cores). Thus, regulatory nudges toward openness or interoperability could shape product roadmaps (e.g. making sure an accelerator supports an open programming model so it can be used in government projects).

- Antitrust and Competition Law: As a relatively new but fast-consolidating field, the AI chip market could draw scrutiny from antitrust regulators. For example, NVIDIA’s attempted acquisition of ARM in 2020–2021 was blocked by regulators in the US, UK, and EU due to concerns it would give NVIDIA too much control over a fundamental IP provider for the industry. This indicates regulators are watching big chip mergers closely. If any player becomes overwhelmingly dominant, we could see pressure for fair licensing (for example, if one company’s software stack becomes essential, regulators might push it to remain open or compatible for others). So far, NVIDIA’s dominance in AI hasn’t triggered action – likely because competition exists and the field is evolving – but it’s not inconceivable that governments could step in if, say, NVIDIA were to try to acquire a major direct competitor or otherwise stifle competition. On the other hand, governments might support consolidation domestically to create national champions (China has merged some state-backed chip companies to pool resources, and one could envision them facilitating, for instance, a merger of smaller AI chip firms to better challenge NVIDIA). Competition law also affects pricing: accusations of predatory pricing or unfair bundling can arise in enterprise tech. The presence of strong open-source alternatives (e.g. RISC-V architecture) is partly an attempt to ensure no single company can completely control the ecosystem. Regulatory balance here is tricky – policymakers want to encourage innovation (often by protecting smaller innovators) but also want their national firms to be powerful on the global stage.