- $100 Billion Partnership: Nvidia will invest up to $100 billion in OpenAI to build massive AI data centers totaling at least 10 gigawatts of power capacity reuters.com openai.com. This is one of the largest AI infrastructure deals ever, aimed at deploying millions of Nvidia GPUs for OpenAI’s next-generation models.

- Strategic Alliance: Announced in late September 2025, the Nvidia–OpenAI partnership is a landmark tie-up between the world’s leading AI chipmaker and the creator of ChatGPT. It gives Nvidia a financial stake in OpenAI and guarantees OpenAI priority access to Nvidia’s cutting-edge AI hardware reuters.com 1 .

- Scale & Timeline: The plan calls for 10 GW of new data center capacity (roughly equivalent to the power used by ~10 million homes) dedicated to AI. The first 1 GW phase is slated to go online in H2 2026 using Nvidia’s new “Vera Rubin” supercomputing platform openai.com. OpenAI will co-design systems with Nvidia, aligning hardware and software roadmaps to train ever-larger AI models on the path to artificial general intelligence (AGI) openai.com 2 .

- Complements Other Partnerships: This deal supplements OpenAI’s existing cloud partnership with Microsoft and ongoing collaborations with Oracle and SoftBank. Microsoft – OpenAI’s early investor and Azure cloud host – recently allowed OpenAI to pursue additional infrastructure partners techcrunch.com. The Nvidia partnership will “complement…existing partnerships” with Microsoft, Oracle, and others rather than replace them 3 .

- Industry Impact: News of the alliance sent Nvidia’s stock to record highs (up ~4% intraday) and boosted shares of partners like Oracle by ~5% reuters.com. Analysts say the deal underscores skyrocketing demand for AI compute and cements Nvidia’s lead in AI hardware reuters.com. Competitors and Big Tech firms are now racing to secure their own AI infrastructure, fueling a broader “AI arms race” and raising concerns about the energy requirements of these mega-scale data centers techcrunch.com 4 .

A Landmark Nvidia–OpenAI Data Center Deal

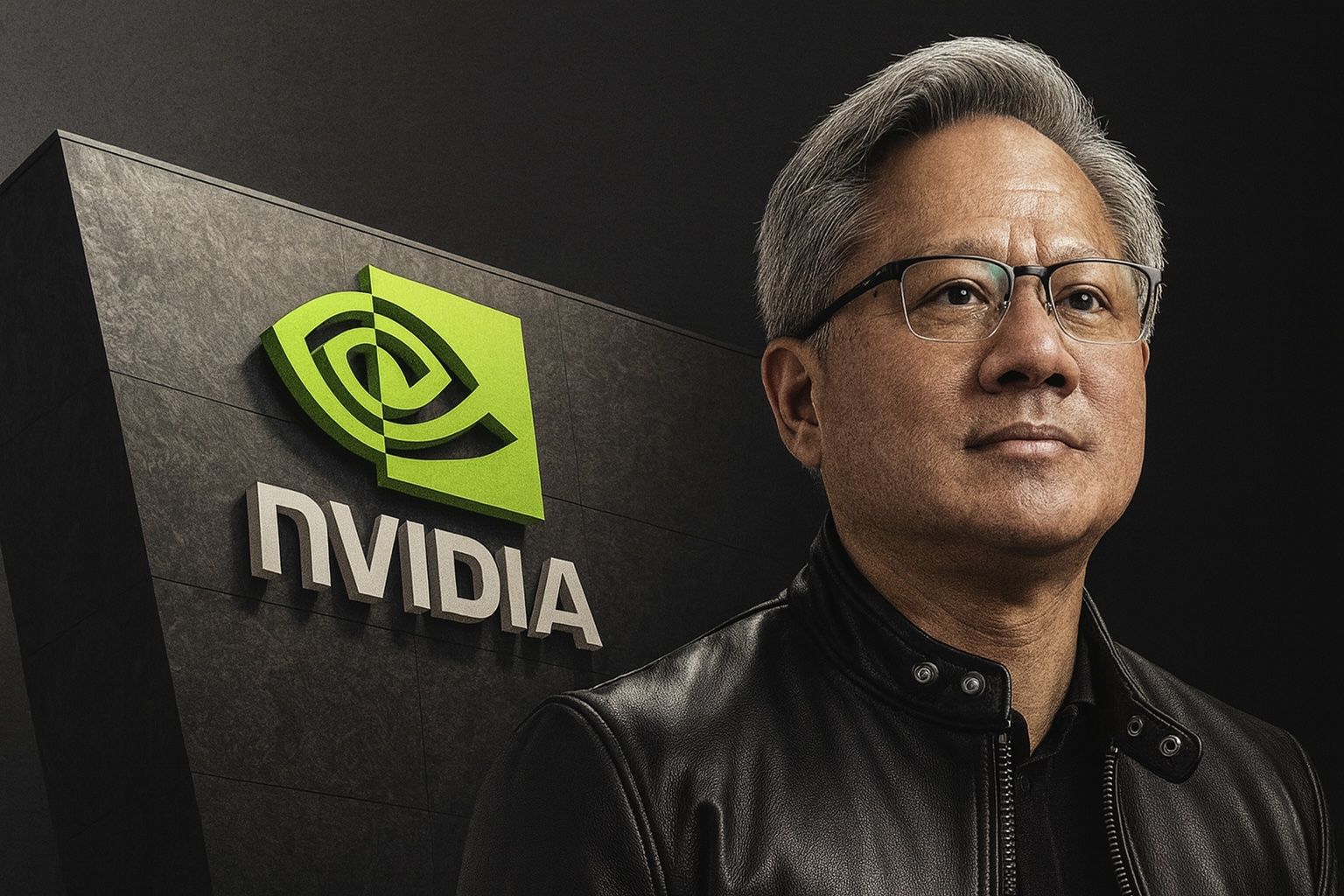

On September 22, 2025, OpenAI and Nvidia announced an unprecedented strategic partnership to build out a massive new AI computing infrastructure openai.com. Under a letter of intent, Nvidia will supply OpenAI with at least 10 GW worth of AI systems – powered by its latest GPUs – and invest up to $100 billion in OpenAI as these data centers come online openai.com. The goal is to dramatically expand OpenAI’s capacity to train and deploy advanced AI models, including future iterations of GPT and other AI systems “on the path to deploying superintelligence” openai.com. In practical terms, 10 GW of computing is enormous: on the order of millions of AI chips and equivalent to the output of about ten large power plants techcrunch.com. “Nvidia and OpenAI have pushed each other for a decade… This investment and infrastructure partnership mark the next leap forward – deploying 10 gigawatts to power the next era of intelligence,” said Nvidia CEO Jensen Huang 5 .

Financially, the deal is structured as a two-pronged arrangement. OpenAI will purchase Nvidia hardware (servers and GPUs) with cash, and Nvidia in turn will take a non-controlling equity stake in OpenAI by investing portions of that cash back reuters.com reuters.com. The companies plan to finalize details in the coming weeks, but a person familiar said the first $10 billion of Nvidia’s investment would kick in once a definitive hardware purchase agreement is signed reuters.com. OpenAI was recently valued around $500 billion privately reuters.com, so Nvidia’s stake – while large – would remain below 20%. Notably, OpenAI will remain an independent (for-profit) company and Microsoft’s partnership continues unchanged, according to sources, even as OpenAI diversifies its compute suppliers reuters.com. In fact, earlier this month OpenAI and main backer Microsoft agreed in principle to restructure OpenAI’s corporate setup to a more conventional for-profit model, a move likely aimed at enabling major outside investments like Nvidia’s 6 .

Timeline and scale: The build-out will not happen overnight. The first gigawatt-scale tranche of Nvidia-powered capacity is targeted to be online by late 2026 reuters.com. This initial 1 GW will run on Nvidia’s “Vera Rubin” platform openai.com – a new data center system architecture named after the late astronomer Vera Rubin – which presumably integrates Nvidia’s latest GPU technology with high-performance networking and power delivery. Subsequent phases will roll out gradually as data center sites are constructed and powered. Nvidia’s investment will be “progressively [deployed] as each gigawatt is deployed,” indicating a stage-gated approach tied to OpenAI achieving build milestones openai.com. Ultimately, the partnership envisions at least 10 GW of capacity dedicated to OpenAI – an almost unimaginable scale for a single AI platform. For perspective, the entire world’s top supercomputers today are measured in mere tens of megawatts; OpenAI’s project is hundreds of times larger, reflecting the compute hunger of frontier AI models.

Both companies are explicit about the strategic rationale: more compute equals more advanced AI. “Everything starts with compute,” OpenAI CEO Sam Altman said, calling compute infrastructure “the basis for the economy of the future” and essential to “create new AI breakthroughs and empower people and businesses…at scale” openai.com. By securing a dedicated pipeline of Nvidia’s best hardware, OpenAI aims to stay at the cutting edge in the increasingly competitive AI landscape reuters.com. For Nvidia, the deal not only guarantees a massive future customer for its chips, but also gives it a direct equity stake in AI’s hottest startup reuters.com. This deepens Nvidia’s influence in the AI ecosystem – a significant move as rival chipmakers and cloud providers vie for pieces of the AI market. “OpenAI and Nvidia will work together to co‑optimize their roadmaps” across software and hardware, the companies noted, aligning OpenAI’s model development with Nvidia’s chip design to wring out maximum performance openai.com. In effect, OpenAI is betting its AI “factory” on Nvidia as the preferred supplier, while Nvidia is betting on OpenAI to drive the next decade of AI breakthroughs.

Strategic Objectives and Unprecedented Scale

This partnership is driven by mutual strategic objectives. For OpenAI, the motive is clear: ensure access to unparalleled compute power to maintain an edge in developing powerful AI models. OpenAI’s chief scientist Ilya Sutskever has noted that training future “superintelligence”-level models could require vastly more compute than today’s – a need this deal squarely addresses. Sam Altman emphasized that “compute infrastructure will be the basis for the economy of the future” and a foundation for AI progress openai.com. By locking in a long-term supply of Nvidia’s top-tier GPUs, OpenAI can plan ambitious projects like GPT-5 or other advanced systems without fear of hardware shortages or cloud capacity limits. It also potentially gains preferential pricing and early access to Nvidia’s new releases. In practical terms, OpenAI will work with Nvidia to design customized systems for its needs: everything from the GPU server racks to the networking and cooling, optimized for giant AI workloads openai.com. This level of co-design hints at just how specialized and large-scale AI infrastructure is becoming – more akin to building a power plant than renting some cloud servers.

For Nvidia, the deal secures a captive customer for an immense volume of its chips well into the future, reinforcing its dominance in the AI boom. OpenAI is already one of the biggest buyers of high-end AI accelerators (it reportedly used tens of thousands of Nvidia GPUs to train ChatGPT/GPT-4). Committing to supply 10 GW-worth of hardware ups the ante significantly. Analysts note this move “throws cold water on the idea that rival chipmakers or in-house silicon…are anywhere close to disrupting Nvidia’s lead” in AI computing reuters.com. Despite efforts by Google, Amazon, and others to develop alternative AI chips, Nvidia’s platform remains the gold standard for training “frontier” models reuters.com. The partnership also gives Nvidia a stake in OpenAI’s success – aligning its fortunes with the AI leader’s growth. In effect, Nvidia is investing in driving demand for its own product: the more OpenAI succeeds and pushes the boundaries of AI, the more GPUs (and DGX systems, networking gear, etc.) it will need to buy from Nvidia. This synergy is summed up by CEO Jensen Huang’s remark that the alliance will “power the next era of intelligence” by pairing Nvidia’s infrastructure with OpenAI’s AI research 5 .

The sheer scale of 10 GW of AI datacenters cannot be overstated. OpenAI and Nvidia describe it as “millions of GPUs” spread across multiple cutting-edge facilities openai.com. By comparison, the cloud infrastructure used for training GPT-4 (one of the most powerful models as of 2023) is estimated to have been in the low tens of thousands of GPUs. Here we are talking about potentially hundreds of times more compute capacity. OpenAI’s user base has grown exponentially – the company notes it now serves 700 million weekly active users across ChatGPT and its API openai.com – and future AI models will be even more resource-intensive. Delivering snappy responses and advanced AI features to billions of users (and powering AI across industries) will demand huge backend compute. This 10 GW initiative is designed to meet those needs. It also signals confidence that market demand for AI will continue rising: investors are effectively funding a gigantic “AI factory” for OpenAI on the expectation that its products (like ChatGPT, GPT-5, enterprise tools, etc.) will generate enormous revenue to justify the cost.

Crucially, both companies frame the project in almost historic terms. Altman said OpenAI will use what they build with Nvidia to “empower people and businesses…at scale” openai.com, and even characterized compute infrastructure as a pillar of the future economy openai.com. The partnership is portrayed not just as a business deal, but as laying groundwork for the “economy of the future” and accelerating progress toward AGI (artificial general intelligence), which OpenAI’s mission aspires to openai.com. Such language underscores how strategic advanced AI capabilities have become – akin to a new industrial revolution driven by computing power. By investing $100 billion, Nvidia is effectively bankrolling an AI Manhattan Project of sorts, centered on OpenAI. The outcome, if all goes well, would be an AI computing grid of unprecedented size fueling innovations that could reshape software, businesses, and daily life.

Racing the Titans: How It Compares to Other AI Mega-Projects

The Nvidia–OpenAI alliance arrives amid a frenzy of AI infrastructure investments across the tech industry. In the past few years, major AI labs and cloud companies have struck big-money deals to secure the computing muscle needed for modern AI – collectively amounting to tens or even hundreds of billions of dollars. This new partnership takes that trend to new heights, but it’s useful to compare it with other notable initiatives:

- Microsoft and OpenAI (Azure Supercomputer): Microsoft was OpenAI’s earliest partner, investing $1 billion in 2019 and later pouring in a reported $13–14 billion in total funding and cloud credits techcrunch.com. In exchange, Microsoft’s Azure became the exclusive cloud provider for OpenAI’s research. Microsoft built a dedicated supercomputer for OpenAI (one of the world’s most powerful at the time) and supplied the massive Azure GPU capacity that trained models like GPT-3 and GPT-4 techcrunch.com. This close partnership kickstarted the current AI boom and paid off handsomely for both sides – Microsoft integrated OpenAI’s tech (ChatGPT, GPT-4) into products like Bing and Office, and OpenAI got essentially unlimited compute on Azure. However, as model sizes grew, Azure sometimes struggled to meet demand. In January 2025, OpenAI and Microsoft revised their arrangement to remove Azure’s exclusivity techcrunch.com. Microsoft gained a “right of first refusal” on OpenAI’s future cloud needs – meaning OpenAI would ask Azure first, but could use other partners if Microsoft couldn’t deliver techcrunch.com. This pivotal change opened the door for collaborations with firms like Nvidia, Oracle, and others. Notably, Microsoft itself has been developing an AI chip (codenamed Athena) to reduce its own reliance on Nvidia, and has explored other AI models beyond OpenAI’s techcrunch.com. Still, Microsoft remains a key OpenAI backer and will continue providing Azure cloud services as OpenAI expands, including through the new initiatives like Stargate openai.com 7 .

- Amazon and Anthropic (AWS Partnership): In a similar vein, Amazon Web Services (AWS) forged a major alliance with Anthropic, a leading AI startup founded by former OpenAI researchers. In 2023, Amazon announced it would invest up to $4 billion (later expanded to $8 billion) in Anthropic and become its primary cloud provider techcrunch.com. Anthropic gains access to extensive AWS computing power (and specialized hardware), while Amazon receives partial ownership and showcases Anthropic’s AI models on AWS. In fact, Anthropic has been working closely with AWS engineers to optimize Amazon’s custom AI chips (such as its Trainium and Inferentia processors) for Anthropic’s workloads techcrunch.com. This includes low-level tweaks to better suit large-language-model training on AWS silicon. The partnership is mutually beneficial: Anthropic diversifies its compute beyond Google (which was an earlier investor), and Amazon enters the AI race by hosting one of the most promising AI labs on its cloud. The Nvidia–OpenAI deal eclipses it in size, but both reflect a common pattern – cloud giants investing money and compute into AI startups in return for strategic alignment.

- Google and DeepMind (and Others): Unlike Microsoft or Amazon, Google has kept its premier AI efforts largely in-house. Google acquired DeepMind in 2014 and also built Google Brain internally – the two were combined into Google DeepMind in 2023 to concentrate the company’s AI talent. Google has spent untold billions building out its own AI supercomputing infrastructure, centered around its proprietary TPU (Tensor Processing Unit) chips instead of Nvidia GPUs. For instance, Google’s latest Cloud TPU v5p accelerators were introduced as part of an “AI hypercomputer” designed for next-gen AI training cloud.google.com. Google hasn’t publicized a single giant number like “$100B,” but CEO Sundar Pichai and DeepMind’s leaders have indicated that virtually every aspect of Google’s business is being refocused around AI. Google is reportedly investing heavily to support its upcoming Gemini AI models – rivals to GPT-4 – including upgrading data centers and potentially spending $1–2 billion on Nvidia GPUs to supplement its TPUs techcrunch.com reddit.com. Additionally, Google invested $300 million in Anthropic in early 2023 (for ~10% stake) before Anthropic pivoted to partner with Amazon, illustrating how even Google sought to hedge bets. While Google hasn’t sought external “AI cloud” customers in the same equity-for-compute manner, it offers its TPU infrastructure to outside AI firms via Google Cloud, and has signed deals with smaller AI startups to be their primary compute partner techcrunch.com. In short, Google’s strategy is to vertically integrate – owning both the AI research (DeepMind) and the full stack of hardware/software – to compete in the arms race.

- Meta (Facebook) – Do-It-Yourself at Extreme Scale: Meta Platforms (Facebook’s parent company) is pursuing the AI compute race through massive internal investments. CEO Mark Zuckerberg has stated Meta will spend $600 billion on U.S. data center infrastructure by 2028 to support AI and other data-heavy applications techcrunch.com techcrunch.com. In just the first half of 2025, Meta spent an extra $30 billion year-over-year, largely to bolster its AI capacity techcrunch.com. Meta is unique in that it is building some of the world’s largest AI supercomputers for itself rather than partnering for external cloud. It recently unveiled plans for a new 2,250-acre data center campus in Louisiana – dubbed “Hyperion” – estimated to cost $10 billion and provide 5 GW of compute power when completed techcrunch.com. Notably, Meta struck a deal with a nearby nuclear power plant to supply the enormous electricity load for Hyperion techcrunch.com, highlighting the energy challenge of AI at this scale. Another mega-campus in Ohio (nicknamed “Prometheus”) is slated to come online in 2026, powered by dedicated natural gas turbines techcrunch.com. Meta’s approach is to own the facilities outright and develop its AI models (like Llama 2 and beyond) on this private infrastructure. It has even designed custom AI chips internally, though reportedly it hit setbacks and has purchased tens of thousands of Nvidia H100 GPUs in the interim. Meta’s massive spending shows that the compute arms race isn’t limited to startups – even internet giants are retooling their entire physical infrastructure for AI. In scale, OpenAI’s 10 GW plan with Nvidia is comparable to Meta’s Hyperion+Prometheus combined, and Meta appears poised to continue pouring capital into rivaling the AI compute of any peer.

- Oracle, SoftBank, and “Stargate”: An interesting parallel initiative is the so-called Stargate Project, a monumental plan announced in early 2025 to invest up to $500 billion over four years in AI infrastructure for OpenAI openai.com openai.com. This venture – named after a sci-fi film – is backed by Japan’s SoftBank (as lead financier), OpenAI, Oracle, and other partners, with the political blessing of the U.S. government. Shortly after President Trump’s inauguration in January 2025, the White House trumpeted Stargate as “the largest AI infrastructure project in history” techcrunch.com. The concept was that SoftBank would foot the bill, Oracle would build and operate new data centers, and OpenAI would be the anchor tenant guiding the tech specs techcrunch.com. Indeed, Oracle’s cloud division leapt at the opportunity – one early agreement saw Oracle and OpenAI partner on 4.5 GW of data center capacity in the U.S., as part of Stargate openai.com. Oracle’s stock surged on expectations of huge cloud contracts, at one point briefly making Larry Ellison (Oracle’s founder) the world’s richest man techcrunch.com. However, such an astronomical project naturally raised skepticism. Elon Musk (who has a competing AI venture and a history with OpenAI) openly questioned whether “the project [even] had the available funds”, casting doubt on SoftBank’s ability to raise $500 billion techcrunch.com. By August 2025, reports surfaced that the Stargate partners were struggling to reach consensus on execution techcrunch.com. Still, construction has begun – notably in Abilene, Texas, where eight massive data center buildings are underway as part of a Stargate campus slated to be finished by 2026 techcrunch.com. The new Nvidia–OpenAI deal can be seen as complementary to these efforts: in fact, OpenAI says the Nvidia partnership “complements the deep work [we’re] doing with collaborators including Microsoft, Oracle, SoftBank, and Stargate partners” openai.com. It appears OpenAI is leaving no stone unturned – simultaneously working with cloud providers (Azure, Oracle), financial backers (SoftBank), and now a hardware giant (Nvidia) to amass as much compute as possible. Whether all of these initiatives fully materialize or not, the clear message is that unprecedented resources are being mobilized to secure leadership in AI.

In summary, the Nvidia–OpenAI tie-up stands out for its scale ($100B and 10GW) and the direct pairing of a chipmaker with an AI lab. It is both a sign of OpenAI’s independence (branching beyond its Microsoft Azure roots) and Nvidia’s confidence that pouring money into AI infrastructure will pay off. Other companies are pursuing different paths – Amazon and Microsoft via cloud deals, Google and Meta via in-house spending, Oracle via ambitious contracting – but all are converging on the same recognition: tomorrow’s AI breakthroughs require an almost unfathomable amount of computation. This has created an arms race not just in model development, but in building the physical factories of AI – the data centers – with each alliance leap-frogging the last.

Investor and Industry Reactions

The announcement of Nvidia’s $100 billion investment in OpenAI reverberated across the tech and finance worlds. Investors immediately took the deal as a huge bullish signal for AI. Nvidia’s stock price jumped as much as 4.4% to a new record high on the day, reflecting optimism that Nvidia will see enormous future demand (and revenue) from OpenAI reuters.com. In effect, the market sees Nvidia not just as selling chips, but as a stakeholder in OpenAI’s growth – a potentially lucrative position if OpenAI’s valuation (around $500 billion) continues to climb reuters.com. OpenAI itself is not publicly traded, but this deal has fed investor enthusiasm around all AI-centric companies. Shares of Oracle Corporation also surged ~5% on the news reuters.com. Oracle, though not directly part of the Nvidia–OpenAI deal, is heavily involved in OpenAI’s broader infrastructure plans (via Stargate), so traders interpreted the partnership as further validation that Oracle’s cloud business will see massive AI workloads coming its way reuters.com. Indeed, any firm seen as supplying “picks and shovels” for the AI boom – from chipmakers to data center builders – got a boost from the sheer scale of this announcement.

Industry experts have likewise been struck by the implications. “Demand for Nvidia GPUs is effectively baked into the development of frontier AI models,” noted Jacob Bourne, an analyst at Insider Intelligence, adding that deals like this alleviate worries that Nvidia might lose sales due to U.S. export bans to China reuters.com. (U.S. restrictions have limited Nvidia’s ability to sell its top-tier AI chips to Chinese tech firms, but exploding demand in the U.S. and allied countries is more than picking up the slack reuters.com.) Bourne also stated the partnership “throws cold water” on the notion that Nvidia’s dominance is under imminent threat reuters.com. Despite dozens of startups working on AI accelerators and efforts by companies like Google, Amazon, and Meta to develop custom chips, Nvidia’s end-to-end AI platform (GPUs, software libraries, networking) remains far ahead. This deal underscores that even OpenAI – with all its expertise – prefers to collaborate with Nvidia hardware rather than gamble on unproven alternatives. As Stacey Rasgon, a chip industry analyst, put it in one commentary: “If you’re training cutting-edge AI, you pretty much have to go with Nvidia at this point”. The immediate loser in sentiment is likely Nvidia’s would-be rivals such as AMD (which has been pushing its MI300 AI GPUs) – the stock surge and analyst comments suggest a belief that Nvidia’s grip on the market will not loosen soon.

At the same time, the mammoth size of the deal has raised eyebrows in terms of regulatory scrutiny. A $100 billion commitment between two industry leaders inevitably draws antitrust interest. U.S. regulators had already been looking at concentration of power in AI: the Justice Department and FTC reached an agreement in mid-2024 to enable investigations into the roles of Microsoft, OpenAI, and Nvidia in the AI market reuters.com. Critics worry that a few big players are colluding to corner AI compute resources, which could stifle competition. The 10 GW pact could be seen as foreclosing opportunities for other chip suppliers or cloud providers. However, any formal action may depend on whether this partnership is viewed as anti-competitive or as a natural vertical arrangement. Notably, the current U.S. administration (under President Trump as of 2025) has so far signaled a lighter touch on tech competition issues compared to the prior administration reuters.com. Indeed, Trump himself championed OpenAI’s Stargate project as boosting American AI dominance techcrunch.com. This suggests political winds might favor such alliances in the name of national AI leadership – at least for now. Still, it’s an area to watch: if OpenAI and Nvidia collectively start to dominate AI computing too totally, regulators could step in down the line.

Competing tech firms are certainly taking note and reacting in their own ways. Google, for example, has been relatively quiet publicly but is undoubtedly doubling down on its TPU strategy to ensure it isn’t overtaken in raw compute might. Just days after the Nvidia–OpenAI news, Google’s DeepMind unit rolled out a new version of its flagship model Gemini and emphasized the efficiency of Google’s in-house hardware, subtlety signaling that “we don’t need Nvidia for our AI”. Amazon, on the other hand, may see this as validation of its heavy investment in Anthropic – but also a warning that it cannot let up. Amazon’s AI chief might push to accelerate development of the next-gen Trainium chips or even consider similar equity-cloud deals with other AI startups to keep AWS in the game. Meta’s leadership, which has been vocally proud of their DIY approach, could feel pressure to show results from their $600 billion infrastructure bet – perhaps by releasing breakthrough AI services or open-sourcing new models to demonstrate that their compute is being put to good use.

Meanwhile, Elon Musk – ever the outspoken rival – will likely use this news to bolster the narrative for his own AI venture (xAI). Musk has frequently warned about a single company (like OpenAI) becoming too powerful in AI. Hearing that OpenAI is getting 10 GW more muscle courtesy of Nvidia might spur Musk to call for more openness or even government intervention to ensure “AGI is developed safely and democratically.” His xAI has reportedly built a substantial compute facility of its own in Texas, complete with a private power plant, though on a far smaller scale techcrunch.com. If anything, Musk’s skepticism about Stargate’s funding could extend to this: does OpenAI truly need $100 billion of compute, and is it wise for one AI lab to wield so much? These debates are now raging in tech circles and online forums.

In the investment community, there’s also discussion about returns and risks. $100 billion is an astronomical sum – even spread over years – and it raises the question: how will OpenAI eventually monetize all this capacity? OpenAI’s products (ChatGPT, APIs, etc.) are popular, but the company will need significant revenue growth to justify these infrastructure costs. Some investors are enthusiastic, seeing OpenAI as potentially the next trillion-dollar company (“the Google of the AI era”). Others are more cautious, pointing out that the history of supercomputing projects is mixed: large outlays don’t always translate to commensurate breakthroughs. If AI progress stalls or hits theoretical limits, such huge data centers could become underutilized. Nonetheless, in late 2025 the sentiment is largely optimistic – AI is viewed as the future, and this deal as a bold move to seize that future. As one tech investor put it, “It’s a moonshot, but if AGI is as transformative as hoped, the upside is almost infinite. You don’t want to bet against that.”

Implications for AI Hardware and Data Center Markets

Beyond the immediate players, the Nvidia–OpenAI partnership carries major implications for the broader hardware and data center industry. First and foremost, it reinforces the centrality of GPUs as the workhorse of AI. In recent years, there have been many attempts to challenge GPUs with more specialized AI chips (TPUs, neuromorphic chips, FPGA-based accelerators, etc.). But by committing to Nvidia’s platform at such scale, OpenAI is effectively saying that Nvidia GPUs (and their software ecosystem, CUDA, etc.) will remain the backbone of cutting-edge AI for the foreseeable future. This is likely to chill enthusiasm for competing silicon in the short term. Companies like AMD, Cerebras, Graphcore, and others will have a tougher pitch to make to big AI customers now: OpenAI – the most compute-hungry of them all – chose Nvidia. One analyst noted the deal “also eases concerns” Nvidia had about losing business to Chinese chip rivals, since now so much future demand is locked in with OpenAI and partners reuters.com. In the long run, the supply chain for AI chips may need to expand dramatically to meet not just OpenAI’s needs but everyone’s. Nvidia has already begun moves to boost supply – for example, it recently struck a partnership with Intel to manufacture more chips, even committing $5 billion to help Intel’s foundry ramp up capacity reuters.com. We may see more such collaborations, including with TSMC (Nvidia’s primary manufacturer) and other fabs, to produce the millions of GPUs destined for OpenAI and others.

The deal also signifies a blurring of lines between chip vendors and cloud providers. Traditionally, Nvidia sold chips to data center operators or cloud companies, who in turn rented out compute to AI developers. Now, Nvidia is directly investing in an AI developer (OpenAI) and effectively becoming a quasi-cloud provider for that one client (by delivering complete systems via the Vera Rubin platform). This could set a precedent: we might see other hardware firms offer “investment for usage” deals. For instance, could AMD invest in a rising AI startup in return for that startup agreeing to use AMD accelerators exclusively? It’s not far-fetched in this climate. Such arrangements could accelerate innovation (by giving startups budget and hardware), but also raise fairness questions as they might lock startups into particular tech stacks. We’re watching the vertical integration of the AI stack in real time – from silicon all the way to the end-user application – often under the roof of multi-faceted partnerships.

In the data center industry, the plans for 10 GW of new capacity are both a big opportunity and a formidable challenge. Companies that build and equip data centers (construction firms, cooling and power specialists, networking equipment makers) are looking at potentially years of work coming from this and similar projects. OpenAI is already soliciting partners for power, land, and construction – in early 2025 it put out RFPs for sites with hundreds of megawatts of available power and for engineering firms to help design giant server farms openai.com. A single 1 GW data center campus is a mega-project on its own; ten times that will likely be spread across multiple sites globally (the U.S. for sure, and possibly Europe or other regions where OpenAI has users or strategic partnerships, as hinted by initiatives like “OpenAI for Countries” openai.com openai.com). We can expect fierce competition among states and countries to host these facilities, as they bring jobs and prestige (though also huge power needs). For instance, Texas has already landed one OpenAI “Stargate” center in Abilene openai.com, and the UK just announced “Stargate UK” – a partnership involving Nvidia and local players to build compute capacity for OpenAI on British soil openai.com. The geopolitics of AI now includes luring data center investments much like countries compete for car factories or chip fabs.

On the flip side, packing so many GPUs into data centers leads to technical hurdles: power delivery, heat dissipation, and network bandwidth become critical constraints. A modern AI supercomputer rack consumes tens of kilowatts; thousands of racks can consume gigawatts and generate immense heat. This has spurred innovation in cooling (from advanced liquid cooling to novel cooling loops using refrigerants) and in data center design (e.g. new layouts to minimize cable lengths between GPUs, on-site power generation, etc.). OpenAI’s partner SoftBank has hinted at “reimagining how data centers are designed” for AI openai.com. One experimental approach being tried is building data centers adjacent to power plants. Meta’s Hyperion campus connecting directly to a nuclear plant is one example techcrunch.com. Elon Musk’s xAI took another approach by integrating a natural gas power plant into its data center in Memphis – though that drew controversy for emissions techcrunch.com. For OpenAI and Nvidia, ensuring a reliable power supply for 10 GW will be a monumental task. It likely means investing in energy infrastructure or partnerships with energy companies. The implication for energy markets is notable: AI data centers could meaningfully boost electricity demand in certain regions, potentially requiring new generation capacity (hopefully renewable or low-carbon to mitigate environmental impact).

With Nvidia effectively underwriting OpenAI’s compute expansion, one might wonder: will OpenAI still pursue its own custom AI chips? Earlier in 2024, there were reports that OpenAI was co-developing a specialized AI accelerator with Broadcom and TSMC, aiming to reduce dependency on Nvidia in the long term reuters.com. That project (if real) was seen as a strategic hedge, giving OpenAI leverage in price negotiations. According to Reuters, OpenAI insiders say the Nvidia deal “does not change any of OpenAI’s ongoing compute plans, including…efforts to build its own chips” reuters.com. In other words, OpenAI may continue exploring custom silicon – but given the new alliance, any in-house chip would have to be extremely compelling to displace Nvidia’s offering. It’s possible OpenAI will focus its custom chip efforts on longer-term, highly specialized needs, while relying on Nvidia for the bulk of training tasks. In any case, Nvidia’s investment secures its role in OpenAI’s ecosystem for years, making it less likely that OpenAI would fully switch to another supplier or its own chip. The hardware market for AI thus looks to remain Nvidia-centric, with other players either targeting niche applications or serving those who can’t get Nvidia hardware due to supply constraints.

Finally, this race to build massive AI clusters could lead to a consolidation in the cloud/data center market. Traditional cloud providers (the “hyperscalers” like AWS, Azure, Google Cloud) now face competition from new coalitions (OpenAI + Oracle + Nvidia, etc.). If OpenAI’s supercomputers become among the largest in the world, OpenAI itself becomes a sort of cloud provider for its AI services. We might see AI labs offering cloud access to their specialized infrastructure in the future, blurring the line with commercial clouds. For example, OpenAI could decide to rent out some of its 10 GW capacity to enterprise customers for custom model training (much like how OpenAI’s APIs let others use its models, but at the hardware level). This would pit OpenAI/Nvidia against the likes of Amazon and Microsoft in selling AI compute. Indeed, Oracle’s ambition is clearly to establish itself as a top-tier AI cloud player by hosting OpenAI’s workloads – success there could vault Oracle into closer rivalry with the big three clouds. In response, those incumbents may seek out their own marquee AI tenants (or acquire promising AI startups outright) to keep utilization high on their platforms. In sum, as AI drives so much demand, it’s reshaping the server industry: whoever has the most and best chips, in the most efficient data centers, will attract the best AI projects, which then attract more chips – a virtuous (for them) cycle. The Nvidia–OpenAI deal just accelerated that cycle significantly.

The Global AI Arms Race and Energy Challenge

At a broader level, this partnership highlights the intensifying global race for AI supremacy – not just among companies, but among nations. High-end AI has effectively become a new form of strategic capital. Countries are keenly aware that leadership in AI could translate to economic power and military advantage. The U.S. is home to OpenAI, Nvidia, Microsoft, Google, Meta and others leading this charge, and the scale of investments like the 10 GW project reinforces America’s edge. It’s telling that President Biden (in 2023) and President Trump (2025) both met with AI CEOs and supported initiatives to bolster U.S. AI infrastructure techcrunch.com. The Stargate project with SoftBank and Oracle was even pitched in terms of national security and re-industrialization of America openai.com openai.com. We now see analogous moves in allied countries: OpenAI’s Stargate UK partnership (announced September 16, 2025) will bring thousands of Nvidia GPUs to British soil to ensure the UK has sovereign AI compute for sensitive uses openai.com openai.com. Likewise, the European Union has been debating investments in large AI supercomputers (though nothing near the scale of Stargate yet) to avoid dependence on U.S. cloud providers. China, meanwhile, is pushing ahead on AI but faces headwinds due to export controls. U.S. sanctions have blocked Nvidia’s most powerful AI chips (like the A100/H100) from being sold to Chinese firms. In response, Nvidia offers slightly neutered versions (A800/H800) for China, and Chinese companies like Huawei, Alibaba, and Baidu are rushing to develop indigenous AI chips and large-scale computing centers of their own. There are reports of China building new government-backed AI supercomputers to support domestic AI model training, though details are scarce due to secrecy. What’s clear is that computing power is the new high ground. Just as nuclear capability or supercomputers were strategic assets in past eras, now the ability to train advanced AI models is seen as critical. The Nvidia–OpenAI partnership essentially arms OpenAI (and the U.S.) with an order-of-magnitude leap in compute. It’s a move that will not go unnoticed in Beijing or other AI hubs – we can expect them to redouble efforts to close the gap, whether through their own mega-projects or by seeking novel approaches (like more efficient algorithms to do more with less compute).

The energy dimension of this arms race is equally important. Training and running giant AI models devour electricity. Jensen Huang, Nvidia’s CEO, estimated that globally “between $3 and $4 trillion will be spent on AI infrastructure by the end of the decade” techcrunch.com, and much of that will effectively be investment in power: power to run data centers and power to manufacture all the chips. Already, some regions with clusters of AI data centers are feeling the strain on their power grids techcrunch.com. A 2023 analysis found that a single AI model like GPT-3 consumed as much energy as hundreds of households during training, and usage of AI models by millions of users multiplies that further. Now, consider OpenAI’s 10 GW plan – if fully realized and running near capacity, it could draw 10 billion watts continuously. Over a year, that’s roughly 87.6 terawatt-hours of electricity – about the annual consumption of Austria. Even if these facilities won’t be maxed out 24/7, we are still talking about massive power usage, most likely on the scale of whole cities. This raises obvious environmental and sustainability questions. Where will this energy come from? If it’s from fossil fuels, the carbon footprint will be enormous (potentially tens of millions of tons of CO₂ per year if coal were used, for instance). Thankfully, the trend is toward cleaner energy: as noted, Meta is tapping nuclear for base load techcrunch.com, and many data center operators are investing in solar, wind, or buying renewable energy credits to offset usage. OpenAI and Nvidia have not yet detailed their energy strategy, but given the forward-looking tone of their announcement, it would not be surprising if they commit to powering these new data centers with a high percentage of renewables or innovative solutions (perhaps advanced batteries or small modular reactors, as have been discussed in industry circles).

Policy makers and researchers are increasingly pushing for transparency in AI energy use, arguing that companies should disclose how much electricity and carbon is involved in training large models. This partnership could intensify those calls: with such a large project, there may be public pressure to ensure it’s done in an environmentally responsible way. We might see energy efficiency breakthroughs as a positive side-effect – for instance, new cooling techniques or chip designs aimed at improving performance per watt. Nvidia’s architecture updates already emphasize efficiency gains (each new GPU generation tends to do more computing for the same energy). And on the software side, techniques like model optimization and better algorithms can cut power draw. It’s a paradox: AI has the potential to help tackle climate challenges (through smarter grid management, climate modeling, etc.), yet the process of developing AI is energy-intensive and can contribute to emissions. The industry is well aware of this paradox and there is a push for AI models that are not just more powerful but also more sustainable. OpenAI, for its part, has not talked much publicly about its carbon footprint, but Microsoft had claimed that Azure’s AI training for OpenAI was carbon-neutral (via offsets). Going forward, when a single project is the size of a dozen conventional data centers, stakeholders will demand real clean energy solutions, not just offsets.

In the global context, energy availability could even shape where AI hubs emerge. Countries with abundant cheap electricity (like regions with hydropower or geothermal energy) could become attractive locales for AI data centers. Already, Iceland and Norway have marketed their renewable energy and cool climates for data center hosting. OpenAI’s need for 10 GW could lead it to consider such places if the U.S. grid or land availability is a bottleneck – unless political considerations keep most of it on U.S. soil for security reasons. As noted, the UK will host some GPUs for OpenAI’s government-focused workloads openai.com openai.com, and perhaps other allies (Canada? EU states?) might similarly offer to host parts of OpenAI’s infrastructure with green energy guarantees.

Ultimately, the Nvidia–OpenAI partnership encapsulates the dual promise and challenge of the AI era: unprecedented technological capability at unprecedented scale. On one hand, it paves the way for AI systems far more powerful and useful than today’s – enabling breakthroughs in science, medicine, education, and beyond. On the other, it concentrates a lot of power (both computational and corporate) in a few hands and carries significant economic and environmental costs. The coming years will be a delicate balancing act: fostering the innovation that such compute enables, while ensuring it’s accessible and sustainable. As OpenAI and Nvidia put it, they aim to “advance [OpenAI’s] mission to build AGI that benefits all of humanity” openai.com. The world will be watching to see if this immense investment indeed yields benefits that justify the resources – and if the race for AI unfolds as a healthy competition or a headlong rush that leaves policy and ethics struggling to catch up.

Sources:

- OpenAI & Nvidia joint press release (Sept 22, 2025) openai.com openai.com 8

- Reuters – “Nvidia to invest $100 billion in OpenAI as AI datacenter competition intensifies” reuters.com reuters.com 9

- TechCrunch – “Nvidia plans to invest up to $100B in OpenAI” techcrunch.com techcrunch.com; “The billion-dollar infrastructure deals powering the AI boom” techcrunch.com techcrunch.com 4

- Bloomberg – “Nvidia to Invest $100 Billion in OpenAI in Data Center Push” 10

- OpenAI – “Announcing the Stargate Project” (Jan 2025) openai.com openai.com; “Stargate advances with 4.5 GW partnership with Oracle” (July 2025) openai.com openai.com; “Introducing Stargate UK” (Sept 2025) openai.com 11

- Reuters – “Oracle… $500 billion Stargate project” (Sept 22, 2025) reuters.com; Analyst comment on Nvidia/OpenAI deal reuters.com; OpenAI’s chip efforts reuters.com; Antitrust context 12 .