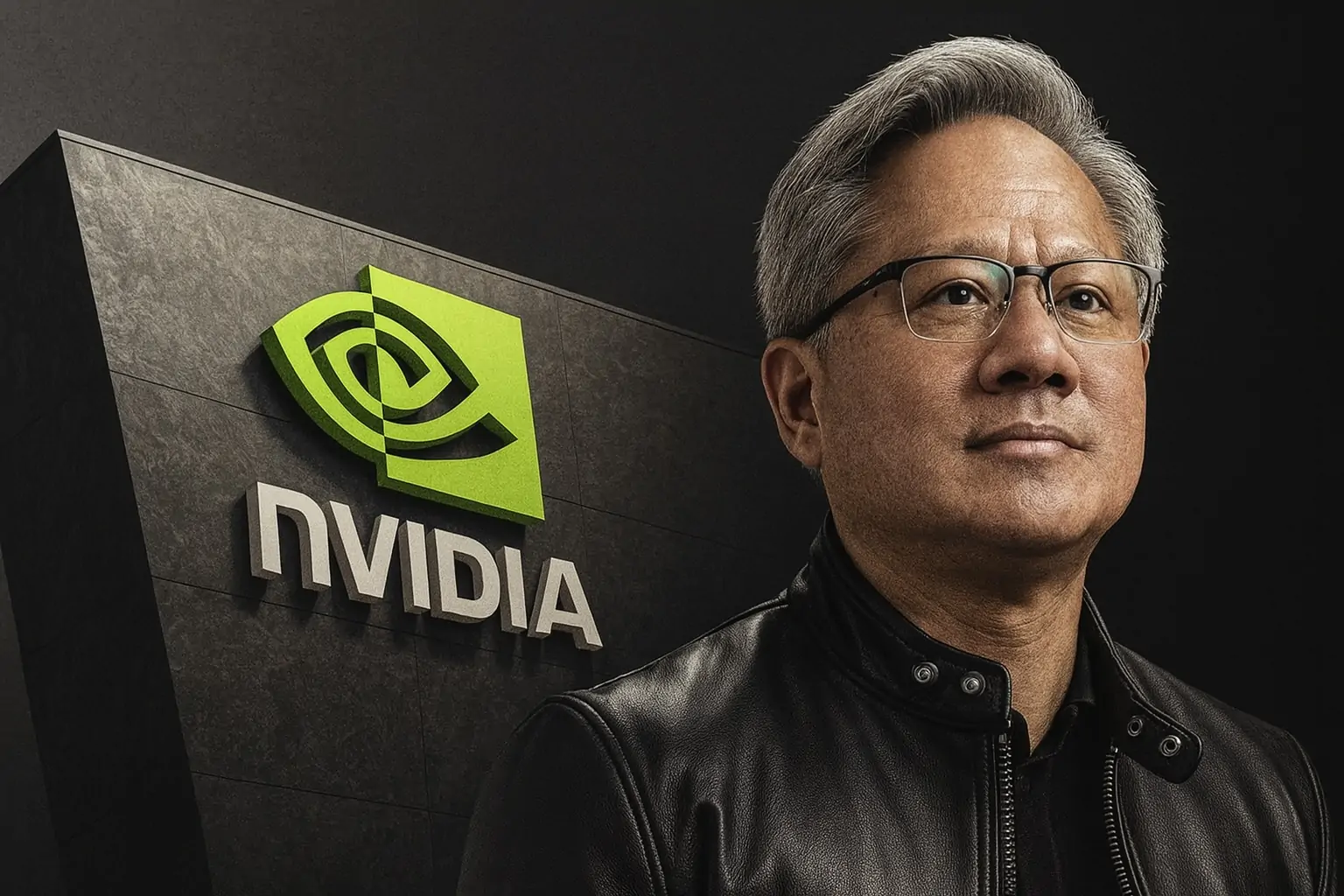

- AI’s “iPhone Moment”: Nvidia CEO Jensen Huang says the launch of consumer-friendly AI (like ChatGPT) is the “iPhone moment” for artificial intelligence nvidianews.nvidia.com. In other words, AI has reached a watershed moment – akin to when the iPhone made smartphones ubiquitous – that will trigger a boom of new AI products and services.

- Nvidia’s Dominance: Nvidia is at the center of this AI revolution. Its data-center GPUs power the vast majority of today’s AI models. In Q2 of 2025, Nvidia reported $46.7 billion in total revenue (up 56% year‑over‑year), with $41.1 billion coming from its Data Center segment manufacturingdive.com manufacturingdive.com. That means AI-related sales accounted for roughly 88% of company revenue, underscoring Nvidia’s hardware glut as companies race to build AI data centers.

- Insatiable Demand: Tech giants (Microsoft, Amazon, Google, Meta, etc.) are ramping up Nvidia-powered AI infrastructure. According to Nvidia, customers like Microsoft Azure, AWS and Google Cloud are integrating its GPUs into their clouds manufacturingdive.com nvidianews.nvidia.com. One analyst notes that demand “has been far outpacing supply” for both Nvidia and AMD chips reuters.com. In fact, investment bank Morgan Stanley reported by late 2024 that “the entire 2025 production” of Nvidia’s latest “Blackwell” AI chips was already sold out 1 .

- Hardware + Software Ecosystem: Nvidia didn’t just build fast chips; it built a full AI stack. Its CUDA programming platform (with over 4 million developers) and AI frameworks lock in customers markets.financialcontent.com. For example, Nvidia released TensorRT-LLM in 2023 to optimize large-language-model (LLM) inference, claiming up to 8× faster performance on LLM tasks markets.financialcontent.com. In short, startups and enterprises can plug into Nvidia’s GPUs and software to train or run generative AI models more easily than ever.

- Competitive Landscape: Rivals are scrambling to catch up. AMD is launching new Instinct MI350 AI accelerators (mass production of successor MI325X in late 2024, MI350 in late 2025) to challenge Nvidia’s Blackwell GPUs reuters.com. Even so, AMD’s AI/data-center sales remain tiny by comparison ($3.2B in Q2 2025) reuters.com. Hyperscale cloud providers are also designing their own chips (Google’s TPUs, Amazon’s Trainium, Meta’s AI accelerators) to reduce Nvidia reliance markets.financialcontent.com. And Intel has its Gaudi GPUs for AI, but it still has only a sliver of the market compared to Nvidia.

- Industry Partnerships: Nvidia is teaming up across the industry. Its DGX Cloud service – essentially AI supercomputers on demand – launched in March 2023 with Oracle Cloud (and soon Azure, Google Cloud) nvidianews.nvidia.com. Hardware partners like HPE, Dell, Cisco and others are building “AI factories” around Nvidia tech (for example, HPE’s new Blackwell-based AI systems) hpe.com. Even national initiatives are forming: Nvidia and U.K. partners (CoreWeave, Nscale, etc.) plan 120,000 Nvidia GPUs for new British AI data centers by 2026 nvidianews.nvidia.com. In one recent deal, Nvidia agreed to buy any unsold capacity from its cloud partner CoreWeave – a $6.3B order – to ensure these AI data centers stay busy reuters.com 2 .

- Expert Perspectives: Nvidia executives stress the seismic impact. CFO Colette Kress said this is “the beginning of an industrial revolution that will transform every industry,” with an estimated $3–4 trillion in AI infrastructure spending by 2030 manufacturingdive.com. Jensen Huang has echoed this, calling the era “a new industrial era — one defined by the ability to generate intelligence at scale.” hpe.com. HPE CEO Antonio Neri similarly noted that “generative… AI” can “transform global productivity” – but only if companies build the right infrastructure 3 .

What Is the “iPhone Moment” of AI?

Huang’s “iPhone moment” analogy captures the idea that generative AI (like ChatGPT) has suddenly become a consumer and enterprise phenomenon. Just as the iPhone in 2007 turned smartphones into a mass-market platform (spurting new apps, services and industries), ChatGPT and related AI tools in 2022-2023 have made AI easy and compelling for ordinary users. “Startups are racing to build disruptive products and business models,” Huang said at Nvidia’s 2023 GTC conference, “and incumbents are looking to respond.” nvidianews.nvidia.com. The implication: we’re entering a phase where AI moves out of niche labs and into everyday applications (customer service bots, image generators, etc.), triggering a rapid wave of innovation.

This view is shared by many analysts. The unprecedented interest in ChatGPT “woke up the entire industry,” Huang told a recent podcast stratechery.com. Venture capital is pouring into dozens of AI startups; every tech firm is asking how AI can affect its business. In Huang’s words, “there’s never been anything like it” since he’s been in the business stratechery.com. The upshot: AI has entered a viral, self-reinforcing loop of awareness and investment – hence the “big bang” Huang now describes 4 .

Nvidia at the Heart of AI

All this excitement means Nvidia sits at a strategic crossroads. The company’s GPUs (graphics cards adapted for AI) and related software are the engines driving large AI models. Over the past few years, Nvidia pivoted from gaming and graphics into deep learning; today it’s essentially the Intel/ARM of AI computing. As Huang likes to say, “we’ve been pioneering GPU computing for over the last decade” and it has “turbocharged” thanks to AI 5 .

In practice, Nvidia’s H100 (Hopper) GPUs and newer Blackwell (GB200/300) GPUs offer massive memory and compute needed by generative AI. Early models like GPT-3 ran on Nvidia A100 chips; GPT-4 and beyond rely on the even faster B100/GB100 chips with advanced interconnects. These chips are scarce: lead times from order to delivery can exceed a year. It was reported that 500,000 H100 cards were sold in Q3 2023 alone en.wikipedia.org, and Blackwell production remains booked solid through 2025 1 .

But chips are only half the story. Nvidia built an entire software stack: CUDA for programming GPUs (now a de‑facto standard), TensorRT libraries for inference, cudnn for neural nets, the NVIDIA AI Enterprise software suite, and even industry-specific tools (like BioNeMo for biotech). These attract customers because it’s easier to optimize models for Nvidia hardware than to port them to a competitor. One analysis notes the CUDA ecosystem — with millions of developers and optimized libraries — creates a lock-in that’s hard to break 6 .

Nvidia also innovates its AI software. For instance, in late 2023 it launched TensorRT-LLM, a library specifically for large language models. Nvidia touts that it can make some AI inference tasks “up to 8× faster” by fusing operations and using lower-precision math markets.financialcontent.com. In short, whether you’re training a new model or running inference (answering queries), Nvidia’s chips + software are often the fastest and most efficient choice. This gives Nvidia a virtuous cycle: as more models use Nvidia tech, more customers optimize for it.

Chip Competition and Global Race

Despite Nvidia’s lead, the AI chip market is heating up. AMD and Intel (and startups) are aggressively pushing their own accelerators. AMD’s Instinct MI300 series (used by Meta and others) is now shipping, and AMD is on track to release the MI350 chips in the second half of 2025 reuters.com. These new AMD chips add memory and use a redesigned architecture to narrow the performance gap with Nvidia’s Blackwell. However, AMD’s AI-centric server sales are still tiny. For example, in Q2 2025 AMD’s entire data-center revenue was only about $3.2 billion reuters.com, versus Nvidia’s $41.1 billion in the same period. As one analyst puts it, Nvidia still “remains a predominant winner” while AMD is the “clear #2” trying to chip away 7 .

Beyond AMD, big cloud players are entering the fray. Google uses its in-house Tensor Processing Units (TPUs), Meta is developing its own AI chips, and Amazon offers AWS Trainium for customers. Microsoft even unveiled Azure AI accelerators (the Maia and Cobalt chips). The motivation: to control costs and performance. As Nvidia’s competitors note, these custom chips can be optimized for specific workloads, even if they lack the generality of Nvidia’s GPUs 8 .

There’s also a geopolitical dimension. In 2022-23, the U.S. restricted export of Nvidia’s most advanced GPUs (H100/Blackwell) to China. Nvidia responded by creating a scaled-down H20 chip just for China, but even that ran into licensing issues. By mid-2025, President Trump signaled he might allow weaker versions of Blackwell chips into China. Reuters reports that Nvidia is internally developing a new single-die Blackwell chip (code-named B30A) that is less powerful than the full dual-die flagship reuters.com reuters.com. “Of course [Huang] would like to sell a new chip to China,” US Commerce official Howard Lutnick said recently reuters.com. However, any Chinese sales now may require Nvidia and AMD to hand over ~15% of revenue from those chips to the US Treasury, an unprecedented pact announced in September 2025 reuters.com. This shows how national security concerns have become intertwined with the AI chip race.

Building AI Infrastructure Around the World

As AI demand surges, companies are forming partnerships to build the infrastructure. Nvidia’s DGX Cloud, launched in March 2023, is one example. It offers businesses instant, pay-as-you-go access to Nvidia supercomputers over the web nvidianews.nvidia.com. Oracle Cloud was the first to host DGX Cloud, with Microsoft Azure and Google Cloud coming soon nvidianews.nvidia.com. This service lets even small firms rent high-end Nvidia GPU clusters without buying the hardware themselves.

Hardware vendors are also teaming up. Hewlett-Packard Enterprise (HPE) and Dell have unveiled “AI factory” solutions built around Nvidia chips and software. For instance, in mid-2025 HPE announced AI systems featuring Nvidia’s newest RTX Pro and Blackwell Ultra GPUs, plus networking and orchestration tools tweaktown.com tweaktown.com. HPE CEO Antonio Neri commented that generative AI “have the potential to transform global productivity”, but that “AI is only as good as the infrastructure and data behind it” hpe.com. He and Nvidia’s Huang stressed that their joint offerings (full-stack hardware+software) will help companies deploy AI more quickly 3 .

On a national level, Nvidia is even partnering with governments. In the UK, for example, Nvidia announced a multi-billion-dollar program with local cloud providers (Nscale, CoreWeave) and the British government. By the end of 2026, they plan to operate AI data centers with 120,000 Nvidia GPUs and ~£11 billion of investment nvidianews.nvidia.com. (These centers are expected to host advanced models, including OpenAI’s upcoming GPT-5.) During a September 2025 event, UK Prime Minister Rishi Sunak (and later Prime Minister Keir Starmer) touted these plans as making the UK a “world leader in AI” and creating new jobs and innovation nvidianews.nvidia.com nvidianews.nvidia.com. Huang himself said, “We are at the big bang of intelligence,” adding that the UK’s ecosystem is “uniquely positioned to thrive in the age of AI.” 4 .

Similarly, US companies and universities are building AI clusters on Nvidia tech. Nvidia-backed startup CoreWeave secured a $6.3 billion deal (Sept 2025) under which Nvidia will buy any unsold GPU capacity through 2032 reuters.com reuters.com. That safeguards CoreWeave’s expansion of Nvidia-powered data centers for AI research. Nvidia also invested heavily in OpenAI – reportedly up to $100 billion in chips and stock over time – tying two AI champions closely together.

Expert Analysis and Outlook

Industry experts see NVIDIA’s role as dominant but note that the landscape is evolving. Nvidia executives describe this phase as revolutionary. CFO Colette Kress stated flatly that we are “at the beginning of an industrial revolution” in computing, forecasting $3–4 trillion in AI infrastructure spending by 2030 manufacturingdive.com. Jensen Huang echoes this optimism, saying the new era is defined by “the ability to generate intelligence at scale” hpe.com. Both compare today’s excitement to past tech booms.

Analysts point out that, despite the hype, Nvidia still faces challenges. Competitors like AMD and Intel are making inroads (e.g. AMD’s new MI350 chips, Intel’s Gaudi line) and could capture niche workloads. Cloud providers’ custom chips might gradually reduce Nvidia’s share in inference tasks. But in the near term, most experts agree Nvidia’s ecosystem gives it a lasting edge. As one analysis noted, Nvidia’s “market share [in AI GPUs] remains significantly larger” than AMD’s markets.financialcontent.com. Another factor is software momentum: the vast CUDA user base and Nvidia’s push into areas like robotics, healthcare, and autonomous driving (all using its platforms) deepen the lock-in.

For the general public, the key takeaway is that AI is entering mainstream use – much like smartphones did 15 years ago – and Nvidia’s technology is fueling that growth. Huang’s “iPhone moment” metaphor suggests we will see AI apps and services proliferate in the coming years. But just as Apple’s App Store created a new economy of developers and tools around the iPhone, the AI era will spawn new companies, jobs (even outside tech – Huang says electricians and technicians will be in high demand), and ethical debates. In Huang’s words, “We are entering a new industrial era.” How society adapts to this “big bang of intelligence” will be the story of the next decade.

Sources: Executive interviews and financial reports (Nvidia, Reuters, Manufacturing Dive, etc.) and industry analysis nvidianews.nvidia.com manufacturingdive.com manufacturingdive.com hpe.com reuters.com reuters.com reuters.com markets.financialcontent.com. These include statements by Nvidia’s CEO and CFO, partner announcements, and data from official earnings releases.