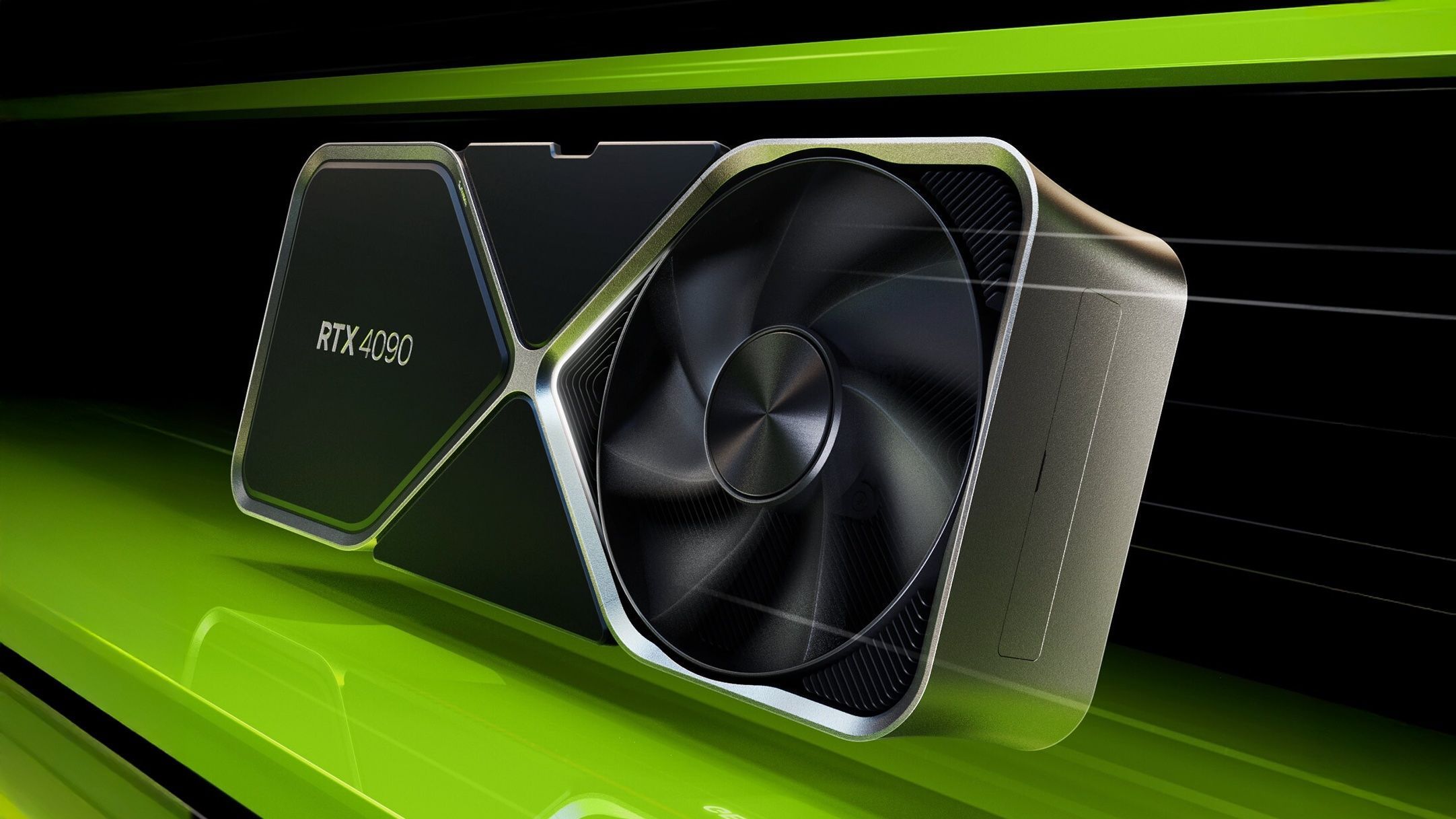

- The GeForce RTX 4090 is the fastest gaming GPU in mid-2025, featuring 24 GB of GDDR6X, 3rd-gen RT cores, 4th-gen Tensor cores, and launched at $1,599 in 2022–2023.

- NVIDIA released the RTX 5090 in early 2025 as a halo card, but its supply is so limited that the RTX 4090 remains the practical top-end for most buyers.

- AMD’s Radeon RX 7900 XTX uses a chiplet RDNA3 design, includes 24 GB of GDDR6 memory, carries about 355 W board power, and is priced at $999.

- The RX 7900 XTX often trades blows with the RTX 4080, sometimes outperforming the 4080 in rasterization while undercutting it by about $200.

- In the mid-range, the RX 7800 XT is around $500 and offers strong raster performance, while the RX 7600 8GB around $269 targets 1080p gaming.

- Intel’s Arc A770 16GB delivers performance comparable to an RTX 3060/3060 Ti in many games after driver improvements, and the Arc B580 launched in late 2024 is around $350 and reportedly faster than the RTX 4070 in some tests.

- NVIDIA’s DLSS 3 Frame Generation, exclusive to RTX 40-series, can double frame rates in CPU-limited or RT-heavy scenarios and is supported by over 50 games.

- AMD’s FSR 3 Fluid Motion Frames is rolling out to provide frame generation across GPUs, complementing the existing FSR 2, which is GPU-agnostic.

- All three vendors support AV1 encoding; Intel Arc was first to market AV1 encoding, NVIDIA added dual AV1 encoders on the 40-series, and AMD introduced AV1 encoding on RX 7000 series.

- By mid-2025, new generations are on the horizon: NVIDIA’s RTX 50-series “Blackwell” and AMD’s RX 9000-series (RDNA4) have begun, while Intel’s Arc Battlemage expansion (B580/B570) continues amid supply constraints and continued price premiums.

The graphics card arena in mid-2025 is more dynamic than ever. Three major players – NVIDIA, AMD, and Intel – are vying for supremacy across gaming, content creation, and even artificial intelligence. Each brings its own philosophy: NVIDIA pushes cutting-edge performance and features (at premium prices), AMD focuses on value and high efficiency, and Intel’s newcomer Arc GPUs aim to disrupt the budget and mid-range market. In this comprehensive comparison, we’ll break down the latest GPU line-ups (like NVIDIA’s RTX 40-series, AMD’s RX 7000-series, and Intel’s Arc series), examine how they stack up in performance, value, power efficiency, and features, and highlight recent developments and controversies shaping the GPU landscape in 2025. Whether you’re a gamer, creator, or casual user, this guide will help you understand the state of GPUs in 2025 in clear, accessible terms.

The Big Three GPU Manufacturers in 2025

NVIDIA – The Performance Leader (and Price Leader): NVIDIA continues to dominate the high-end GPU market. Its GeForce RTX 40-series (“Ada Lovelace” architecture) cards, launched in 2022–2023, set a new bar for performance and features. The flagship RTX 4090 in particular is the undisputed performance champion – Tom’s Hardware dubbed it the “fastest GPU currently available,” albeit with an “extreme” price tag and power draw tomshardware.com tomshardware.com. With 24GB of fast GDDR6X memory and ground-breaking capabilities like 3rd-gen ray tracing cores and 4th-gen Tensor (AI) cores, the RTX 4090 easily outpaces last generation cards by 50% or more in many games tomshardware.com. NVIDIA’s strength isn’t just raw power; it leads in advanced features (we’ll cover those soon) and a robust software ecosystem. However, this leadership comes at a cost – literally. NVIDIA cards often carry a price premium at every tier. For example, the RTX 4090 launched at $1,599 MSRP thepcenthusiast.com, and even mid-range cards like the RTX 4070 Ti debuted at $799 after a controversial rebranding (more on that later) tomshardware.com. Still, NVIDIA’s reputation for top-tier performance, stable drivers, and broad support in games and professional apps makes their GPUs a go-to for those who want the very best – and are willing to pay for it.

AMD – The Value Contender and Efficiency Champ: AMD’s Radeon RX 7000-series (RDNA 3 architecture) has proven that the company can spar with NVIDIA on performance while often undercutting on price. The flagship Radeon RX 7900 XTX arrived with impressive credentials: a chiplet-based GPU design (a first in consumer GPUs) and 24GB of GDDR6 memory at a $999 price, hundreds less than NVIDIA’s rival thepcenthusiast.com. In traditional rasterized gaming (i.e. non-ray-traced rendering), the 7900 XTX and its RDNA 3 architecture deliver “impressive rasterization performance” on par with NVIDIA’s top cards tomshardware.com. Tom’s Hardware lauded these Radeon GPUs for “excellent efficiency gains” and an “innovative chiplet design,” highlighting AMD’s progress in performance-per-watt tomshardware.com. Indeed, with a board power of about 355W on the 7900 XTX (versus 450W for an RTX 4090) thepcenthusiast.com, AMD achieves a slightly better efficiency score in some tests bestvaluegpu.com, meaning you get strong frame rates without as much heat and power draw. AMD has positioned its cards to offer better price-to-performance in many segments – for instance, the 7900 XTX often trades blows with NVIDIA’s $1,199 RTX 4080 while costing ~$200 less, and the mid-tier RX 7800 XT (around $500) competes well against pricier NVIDIA counterparts. However, AMD’s GPUs do have some weaknesses: ray tracing performance, while improved over the prior generation, “remains a second-class citizen” for RDNA 3 – AMD still “can’t touch NVIDIA in DXR (ray-traced) games,” as Tom’s Hardware puts it bluntly tomshardware.com. And although AMD’s FidelityFX Super Resolution (FSR) upscaling technology is useful, it lacks an answer to NVIDIA’s AI-driven DLSS 3 frame generation (more on that in Features below) thepcenthusiast.com. In short, AMD in 2025 is the brand to watch for those seeking high performance per dollar spent, with the caveat that you might be trading away some bleeding-edge features and ray tracing prowess in the process.

Intel – The New Challenger (Arc GPUs): In 2025, Intel’s Arc graphics are carving out a niche as a third option for GPU buyers, primarily in the budget and mid-range segments. Intel’s first-generation Arc Alchemist cards (the Arc A-series like the A750 and A770 launched in late 2022) had a rocky start with driver issues, but persistent improvements have made them quite compelling for value seekers. “Intel Arc software has improved dramatically” over the past year, notes Gamers Nexus, though it “remains a largely manual effort” at times, and the Arc hardware “has always been good when it works.” gamersnexus.net This means early quirks (game compatibility, driver overhead in older DirectX 9/11 titles, etc.) are gradually being solved – Intel even delivered ~40% performance uplifts in some scenarios through driver updates pugetsystems.com techspot.com. The flagship of Intel’s first gen, the Arc A770 16GB, can handle 1080p and 1440p gaming reasonably well – roughly comparable to a NVIDIA RTX 3060 or 3060 Ti in many games after the driver fixes. Where Intel really shines is value: the Arc cards often offer more VRAM for the price and came to market aggressively priced (the A750 8GB dropped to as low as ~$200). By mid-2025, Intel is moving on to its second-generation Arc “Battlemage” GPUs. The new Arc B580 card (launched in late 2024) is “Intel’s top solution” so far and targets the mainstream segment tomshardware.com. According to Tom’s Hardware, the Arc B580 is actually “faster than the RTX 4070” in performance while “ostensibly costing less than either [RTX 4070 or AMD RX 7600 XT],” although limited supply has pushed street prices to around $350 tomshardware.com. That puts Intel in an intriguing position: offering a GPU with near-high-end performance for a mid-range price. Intel also led the industry in adopting modern features like AV1 encode (great for streamers) and has its own AI-upscaling tool (XeSS). The big question for Intel is stability and support – drivers are much better now but still not as “plug and play” as NVIDIA or AMD. As Gamers Nexus remarks, Arc went from “for patient enthusiasts with troubleshooting experience” to something closer to mainstream-friendly, but day-one game support can still lag behind gamersnexus.net. In ecosystem terms, Intel is the newcomer still earning its stripes, but its presence alone has increased competition (and often lowered prices) in the GPU market, which is a win for consumers.

Summary: NVIDIA brings the highest performance and most features (at the highest cost), AMD offers competitive performance with better value and efficiency (while working to catch up on fancy features), and Intel provides budget-friendly alternatives and fresh innovation (tempered by the growing pains of a new ecosystem). Now, let’s dive deeper into head-to-head comparisons in critical areas like performance, pricing, power, features, and real-world use.

Raw Performance Showdown: Gaming and Compute

When it comes to raw performance in games and compute workloads, NVIDIA still holds the crown – but AMD is not far behind, and each brand has its strengths at different price tiers.

- Flagship Gaming Performance: NVIDIA’s GeForce RTX 4090 remains the fastest GPU on the planet for gaming in mid-2025, particularly at high resolutions and with ray tracing enabled. It often outpaces AMD’s top card (RX 7900 XTX) by a significant margin in cutting-edge games. For example, in pure rasterized 4K gaming (no ray tracing), the 4090 might be about 20–30% faster than the 7900 XTX on average thepcenthusiast.com thepcenthusiast.com. TechSpot’s extensive benchmarks found the 7900 XTX can come within ~10-17% of the RTX 4090’s fps in some 4K games when ray tracing is off techspot.com techspot.com – an impressive showing for AMD given the lower price. However, once ray tracing is enabled, the gap widens dramatically: the RTX 4090 can be 50%+ faster than the 7900 XTX in heavy RT scenarios techspot.com techspot.com. In one example with Cyberpunk 2077’s RT Ultra mode, the 7900 XTX was rendering ~41 fps while the 4090 achieved much higher framerates, making the AMD card “62% slower” (or conversely, the RTX 4090 161% faster in that test) techspot.com. Clearly, if maximum performance and ray-traced visuals are your priority (and money is no object), NVIDIA’s flagship still reigns supreme. It’s worth noting that NVIDIA has even launched a new halo card, the GeForce RTX 5090, in early 2025 – but it’s in such limited supply that for most consumers the 4090 remains the practical top-end option tomshardware.com. AMD’s philosophy with RDNA 3 was to compete just below the very top: AMD actually stated it “doesn’t feel the need to compete directly” against a $1600 SKU like the 4090 tomshardware.com. Instead, AMD aimed to beat the RTX 4080 – and it largely succeeded. The Radeon RX 7900 XTX trades blows with the RTX 4080 (which it undercuts by ~$200), often outperforming the 4080 in traditional rendering by a small margin tomsguide.com, although the 4080 still leads in ray tracing. For extreme high-end gamers, this means you have a choice: go NVIDIA for the absolute best performance (especially in RT), or go AMD for nearly top-tier frame rates at a lower cost. Meanwhile, Intel doesn’t play in this ultra-enthusiast space at all – there’s no Intel Arc card that can challenge a 4080 or 4090. The strongest Arc (B580) is more of an upper-midrange performer, roughly comparable to a NVIDIA RTX 4070/Ti class in raw fps tomshardware.com. In summary, NVIDIA leads the flagship performance race, AMD’s flagship is close enough to be a great value alternative for high-end gaming, and Intel’s flagship is aiming at the mid-tier gaming segment.

- Mid-Range and Budget Performance: Most gamers buy mid-range GPUs, and here the competition between brands is fierce. In the $300–$600 range, NVIDIA offers the likes of the RTX 4060 Ti, 4070, and 4070 Ti; AMD offers the RX 7600, 7700 XT, 7800 XT; and Intel has the Arc A770 (and new B-series cards starting to appear). For 1080p or 1440p gaming, all three brands have viable options, but their strengths differ. AMD’s mid-range Radeons tend to have higher raw raster performance and more VRAM for the dollar – for instance, the Radeon RX 7800 XT (around $499) comes with 16GB VRAM and strong 1440p performance, often beating NVIDIA’s 12GB RTX 4070 ($599) in non-ray-traced games. NVIDIA’s advantage in this class lies in features and in ray tracing performance; the GeForce RTX 4070 can outperform an RX 7800 XT or 7700 XT significantly when ray tracing is cranked up, thanks to NVIDIA’s more advanced RT cores. Intel’s Arc A750/A770 were appealing budget contenders, matching or exceeding similarly priced cards (like the NVIDIA RTX 3050/3060 or AMD RX 6600) in many modern games. After driver updates, an Arc A750 8GB (often on sale ~$200) is comparable to a ~$300 NVIDIA RTX 3060 in performance – Gamers Nexus even noted Arc was a “strong value” offering for budget builds gamersnexus.net. The new Arc B580 at ~$350 claims performance above a $600 RTX 4070 tomshardware.com, though again ray tracing and some older games may temper that lead. One key mid-range battle has been over VRAM and future-proofing: NVIDIA drew criticism for releasing cards like the RTX 4060 Ti with only 8GB VRAM at $399, which TechSpot called “shockingly poor value” for a 2023 midrange GPU techspot.com. Enthusiasts pointed out that 8GB is already barely enough for some new games at high settings. AMD capitalized on this by equipping cards like the RX 7700 XT and 7800 XT with 12GB+ VRAM, and Intel’s Arc A770 came with a hefty 16GB for under $350. This means in certain games (or future titles), those AMD/Intel cards won’t run into memory limitations that an 8GB Nvidia card might. In general, AMD currently offers the best price-to-performance in the mid-tier (especially for pure raster graphics), NVIDIA offers solid performance with extra bells and whistles (albeit at higher prices – e.g. the $399 RTX 4060 Ti was widely panned as underwhelming techspot.com), and Intel offers fantastic value if you’re okay being an early adopter and dealing with the occasional driver quirk.

- Content Creation and Compute: Beyond gaming, GPUs are used for rendering, video editing, AI, and more. Here, raw performance can depend on the software’s support for each brand. NVIDIA’s compute dominance is well-established; their CUDA and OptiX frameworks mean many professional apps (Blender, Maya, Adobe Premiere, etc.) are optimized for NVIDIA. For instance, in Blender rendering, even a mid-card like NVIDIA’s RTX 3060 Ti could outperform a much more expensive AMD card from the prior gen due to NVIDIA’s superior GPU acceleration (OptiX). In one Blender Cycles benchmark, a $400 RTX 3060 Ti beat an AMD Radeon RX 6900 XT that originally cost $999 cgdirector.com – a stark reminder of how much software optimization matters. AMD has been catching up by improving its drivers (the AMD PRO drivers and HIP runtime allow Blender and some pro apps to use Radeon GPUs now), but NVIDIA still often leads in creative workload performance out-of-the-box cgdirector.com cgdirector.com. That said, AMD’s 7900-series offers hefty VRAM (20–24GB) which can be useful for 4K video projects or large 3D scenes, and at a lower price than NVIDIA’s 24GB models, so they’re a viable choice for creators on a budget. Intel’s Arc GPUs, thanks to 16GB VRAM and decent compute units, also punch above their weight in some creator tasks – for example, Arc GPUs have shown strong performance in video encoding and AI image generation when the software supports oneAPI or Arc’s media engine. Overall, if your priority is compute/AI work, NVIDIA’s higher-end cards (and even their pro-level “RTX A” workstation GPUs or HPC cards like the NVIDIA H100) are favored in industry – not just for their raw hardware power but for the mature software ecosystem (CUDA is ubiquitous in machine learning). In fact, NVIDIA’s focus on AI has been so successful that in the past year roughly 90% of NVIDIA’s revenue came from AI/datacenter sales, not gaming tomshardware.com. AMD is trying to challenge that with its CDNA and MI series accelerators (and the Radeon PRO GPUs for workstation users), but those are niche for the public. For an individual buyer doing compute, the choice usually tilts toward NVIDIA for proven performance, unless cost is a constraint – in which case AMD’s and Intel’s offerings can provide more bang for buck if the apps you use support them.

In summary, NVIDIA leads the pack in absolute performance – especially for high-end gaming and GPU compute – with AMD close behind in gaming and offering better value per dollar. Intel’s Arc isn’t competing at the top end, but it’s shaking up the mid-range with surprisingly competent performance for the price. Next, we’ll examine the price-to-performance equation more closely, and how each vendor fares in delivering value.

Price-to-Performance: Which GPUs Give You the Best Bang for Your Buck?

Value for money is a crucial factor for most consumers. Here’s how the GPU makers stack up when it comes to pricing strategy and bang-for-buck in mid-2025:

- NVIDIA: Historically, NVIDIA has not been shy about premium pricing, and the RTX 40-series launch continued that trend. In many cases you pay extra for NVIDIA’s brand, polish, and features. The RTX 4090 at $1,599 and RTX 4080 originally at $1,199 set eye-watering new price points for consumers. Even midrange Ada cards were pricey – for example, the RTX 4070 Ti launched at $799. Notably, NVIDIA tried to introduce a cut-down RTX 4080 12GB at $899, but this move backfired; the 12GB model had significantly lower specs than the 16GB 4080, prompting a backlash over confusing naming and poor value. NVIDIA actually “unlaunched” the RTX 4080 12GB after the outrage and rebranded it as RTX 4070 Ti with a $799 price (still high, but $100 less) tomshardware.com. This saga was a rare instance of NVIDIA responding to price criticism. In the midrange ($300-$500), NVIDIA’s offerings like the RTX 4060 Ti and 4060 also faced criticism – an 8GB $400 card in 2023 was seen as a bad deal when games were already exceeding that VRAM. TechSpot didn’t mince words: the RTX 4060 Ti 8GB “represents shockingly poor value” techspot.com. NVIDIA did release a 16GB version of the 4060 Ti later (for $499), but by then AMD had 16GB cards for similar or less cost that often outperformed it in raw fps. On the budget end, NVIDIA’s newer RTX 4060 (non-Ti) at ~$299 and RTX 3050 around ~$249 are decent 1080p cards, but they often carry a price premium over AMD/Intel cards that deliver similar frame rates. In summary, NVIDIA generally offers the highest performance in each class, but usually at the highest price – the value (fps per dollar) tends to be lower unless you specifically need their proprietary features. One area where NVIDIA might surprise you in value is previous-gen cards: as the 40-series took over, older RTX 30-series GPUs (like an RTX 3080 or 3070) sometimes went on sale and could outvalue new midrange cards. But as of mid-2025, those stocks are mostly dried up tomshardware.com tomshardware.com.

- AMD: Value is where AMD often shines. AMD’s strategy with the RX 7000 series was to undercut NVIDIA’s pricing while delivering competitive performance in that tier. A prime example: the Radeon RX 7900 XTX at $999 was about 20% cheaper than the RTX 4080, yet in many games the XTX actually outperforms the 4080 (especially in non-ray-traced performance) tomsguide.com. Even against the $1,599 RTX 4090, the $999 Radeon holds its own in a lot of scenarios – making it a favorite of high-end gamers who want high fps without spending ultra-enthusiast money. In the midrange, AMD launched the RX 7600 8GB at a budget-friendly $269 to target 1080p gamers, and the 16GB RX 7700 XT/7800 XT at $450-$500 range where NVIDIA’s closest (the RTX 4070 12GB) costs $600. Generally, AMD has been offering more VRAM and similar or better raster performance for less money than NVIDIA. This translates to better longevity and value – for example, an RX 6700 XT from last gen with 12GB often aged better in new games than an 8GB RTX 3070, despite being cheaper. Tom’s Hardware pointed out one pricing quirk in AMD’s lineup: the RX 7900 XT (the step-down model) was originally priced only $100 less than the XTX, making it a relatively poor value next to its big brother tomshardware.com. AMD adjusted some prices after launch, but the principle remains: always compare the next model up, because AMD often offers a lot more performance for a bit more money. For outright price/perf champions, AMD’s last-gen cards on clearance (RX 6000 series) and current midrange (like the 7800 XT) are frequently recommended by reviewers. It’s common to see AMD GPUs topping “best value” charts in various price bands. The trade-off is you might miss out on some of NVIDIA’s top-end features or ultimate ray tracing performance – but if those aren’t priorities, AMD offers some of the best deals in GPUs right now. And importantly, AMD tends to keep its mainstream and budget prices more grounded; the RX 7600 8GB at ~$269 undercut NVIDIA’s RTX 4060 by $30 or more, for comparable 1080p performance (with the 7600 even winning in some games).

- Intel: With Arc, Intel is aggressively chasing the value crown to gain market share. The Arc A770 16GB debuted at $349 – an unheard-of price for 16GB of VRAM and performance rivalling a $400+ NVIDIA card. Over time, Intel even slashed prices: the Arc A750 8GB dropped to $249 MSRP, and was often on sale closer to $200, making it one of the best price-to-performance bargains for 1080p gaming. To put that in perspective, an Arc A750 (after driver improvements) competes with or beats an NVIDIA RTX 3050 ($250) and AMD RX 6600 ($230) in many titles, while also offering more VRAM and features like AV1 encoding those didn’t have. That’s tremendous value. Moving into 2024–2025, the new Arc B-series (Battlemage) looks to continue this trend at higher performance levels. The Arc B580 aims at the ~$300-$350 bracket and, as mentioned, can outperform cards like NVIDIA’s RTX 4060 and even RTX 4070 in several metrics tomshardware.com tomshardware.com. If Intel can deliver these at volume, they could redefine midrange value – a card cheaper than a $500+ competitor that beats it is a huge win for consumers. However, caveats apply with Intel: the value of Arc can depend on the specific game or workload. Some older or niche games still run less efficiently on Arc (due to driver overhead for DX11 titles, for example), meaning an Arc A770 might perform more inconsistently than an NVIDIA/AMD card of similar raw horsepower. If you play a wide variety of games, especially older ones, the “worst-case” performance of Arc might undercut its value. Still, for many mainstream modern games (especially those using DX12/Vulkan), Arc’s performance per dollar is excellent. Intel also sweetened the deal by focusing on future-proof features – every Arc card has full AV1 encode/decode, plenty of VRAM, and PCIe 4.0, which at their price points made NVIDIA’s and AMD’s budget cards look a bit skimpy. In short, Intel Arc offers fantastic hardware for the money, but you are also “investing” in a newer ecosystem – the value equation might be less about average FPS per dollar and more about your tolerance for a few rough edges. Enthusiast reviews have generally praised Intel for delivering on value; as one user quipped, “$250 for the A770 16GB is incredible value – if you’re a moderate skill PC gamer” who doesn’t mind tweaking settings and drivers linustechtips.com.

Overall, in mid-2025 the price-to-performance king in pure gaming fps is often AMD, especially from midrange downwards, with Intel Arc challenging that crown in the lower midrange. NVIDIA provides the lowest value in raw fps per dollar (you pay extra for those last few percentage points of performance and the features), though for some buyers that premium is worth it. One must also consider the market conditions: due to supply and demand fluctuations (crypto mining in the past, and now AI demand), GPU prices have sometimes been far above MSRP. At least by 2025, the worst of the shortage was over – you can generally buy cards now, though often at “elevated” prices compared to launch. As Tom’s Hardware noted, we’re not back to MSRP across the board; many GPUs still sell at a premium, and “outside of isolated sales, we expect these elevated prices to be the state of things for the foreseeable future” tomshardware.com. In other words, value-seekers have to stay vigilant for sales and maybe consider last-gen cards or the new Intel offerings to get the best deals.

Power Consumption and Efficiency

Modern GPUs are incredibly powerful – but with great power comes great power consumption. Let’s compare how NVIDIA, AMD, and Intel GPUs handle power and efficiency:

- NVIDIA: The RTX 40-series pushed performance limits, and that meant high power draw on the top-end cards. The flagship RTX 4090 has a 450W TGP (Total Graphics Power) thepcenthusiast.com, which is the highest ever for a single consumer GPU. Running such a card requires a quality power supply (NVIDIA recommends at least an 850W PSU) and adequate case cooling. NVIDIA also introduced a new 12VHPWR power connector (16-pin) for the RTX 4090/4080 – which, while compact, sparked a controversy when a small number of users reported melting connectors/adapters. NVIDIA acknowledged ~50 cases globally of 4090 cables melting, attributing it largely to improperly seated connectors causing high resistance and heat, and advised users to ensure the plug is firmly inserted pcworld.com pcworld.com. This was more a cable issue than a fundamental flaw of the GPU, and by 2023 new revisions and user awareness have largely resolved it, but it’s a reminder that these cards push a lot of watts. Power vs performance: Despite the high absolute wattage, NVIDIA’s Ada Lovelace GPUs are actually quite efficient for their performance level. On a perf-per-watt basis, the RTX 4090 at 450W still delivers better FPS/Watt than its predecessor (RTX 3090 at 350W) did, thanks to architectural improvements. In fact, many enthusiasts undervolt or power-limit the RTX 4090 to, say, 350W, and still get near-max performance – showing Ada’s efficiency headroom reddit.com. For more mainstream cards, NVIDIA dialed back power: the RTX 4070, for instance, draws around 200W, and the compact RTX 4060 is only ~115W. These lower-tier cards are extremely efficient, easily handled by modest PSUs and producing little heat. NVIDIA also tends to manage idle power well and has features like adjustable power profiles via GeForce Experience. One knock on NVIDIA’s midrange is the narrow 128-bit buses and smaller caches mean that when pushed to the limit (4K or ultra settings), they can become inefficient if VRAM is insufficient, as the GPU stalls waiting on memory – but this is more a design tradeoff than a power issue. Overall, NVIDIA’s efficiency is excellent at the chip level, but they willingly use more power at the high end to guarantee leadership performance. If you go NVIDIA, be prepared for big coolers and high wattage on the flagship, but also know you’re getting a lot of performance per watt.

- AMD: AMD’s RDNA architecture has focused heavily on efficiency improvements, and it shows. The Radeon RX 7900 XTX has a 355W TBP, nearly 100W lower than the RTX 4090 thepcenthusiast.com, yet as we’ve seen it’s not too far off the 4090’s performance in many scenarios. In pure raster performance per watt, AMD’s RDNA 3 is competitive with or even slightly better than NVIDIA’s Ada (varies by workload). Tom’s Hardware listed “excellent efficiency gains” as a pro for the RX 7900 series tomshardware.com. AMD achieved this by moving to a chiplet design (smaller 5nm graphics die plus 6nm memory/cache dies) and architectural tweaks. The result: at equal power limits, the RX 7900 XTX can match or beat NVIDIA’s second-best (RTX 4080) while using less power. One example of efficiency: a power-modded RX 7900 XTX at an extreme 700W (!) was shown in a test to roughly match an RTX 4090 in performance tomshardware.com – highlighting that the XTX, if allowed to draw more power, had headroom to spare. But running any GPU at 700W is impractical; more relevant is that at ~300W the 7900 XTX is extremely fast and more efficient than previous-gen AMD cards by a big margin (RDNA 3 improved perf/W by ~50% over RDNA 2). AMD’s midrange cards also sip power: the RX 7600 has a modest 165W TBP, and even the 7800 XT is around 263W, often drawing less in practice. One area AMD focused on is idle and multi-monitor power – early RDNA 3 drivers had a bug causing high idle power, but updates fixed that, and now Radeons idle at only a handful of watts when the monitors allow it (similar to Nvidia). When comparing AMD vs NVIDIA, it’s often noted that AMD’s GPUs have slightly higher maximum efficiency in purely GPU-limited scenarios, while NVIDIA’s advantage comes in scenarios where their specialized cores (RT/Tensor) can do work more efficiently than AMD’s general-purpose approach. For example, in ray tracing with DLSS on, the RTX 4080 might deliver a certain 60 fps at 300W, whereas the RX 7900 XTX might need 355W to hit lower fps with FSR – making NVIDIA effectively more performance per watt in that context. Still, if looking at gaming without RT, AMD’s top cards deliver more frames per watt than NVIDIA’s top cards. The bottom line: AMD provides high performance with somewhat lower power draw at the high end, and great efficiency in the midrange – making them attractive for those conscious of power/heat. The triple-fan coolers on cards like 7900 XTX handle the 300-350W range well (and notably the reference AMD cooler stayed under criticism until a faulty vapor chamber issue was found in some units – a manufacturing defect that AMD fixed via RMA tomshardware.com tomshardware.com). AMD also tends to allow a bit more flexibility with power sliders in their driver software if users want to undervolt or overclock.

- Intel: Intel’s Arc GPUs are built on TSMC 6nm (for Alchemist) and likely 4nm for Battlemage, and they target midrange performance levels, so their power consumption is moderate. The Arc A770 has a 225W TDP, and the A750 is 200W. In real-world gaming, they often draw around that or a bit less. One interesting quirk was Arc’s idle power and multi-monitor power initially – early on, Arc cards could draw 30-50W at idle with multiple monitors, which is quite high, and the fans on the Intel Limited Edition cards sometimes ran constantly because of that gamersnexus.net. Driver updates fixed some of these issues (e.g., better fan control, improved idle behavior), but Intel is still ironing things out. In terms of efficiency, Intel’s GPUs can be very efficient in modern APIs (DX12/Vulkan) where they utilize the hardware fully. They were fabbed on a slightly older process node and have fewer years of architectural refinement, so generally an Arc A770 is a bit less performance-per-watt than an AMD/NVIDIA equivalent. Additionally, if Arc is running a game in DX11 via driver translation layers, it might use more CPU and operate less efficiently, spiking power for less performance gamersnexus.net gamersnexus.net. That said, Intel did design Arc with some forward-looking efficiency choices: they rely on Resizable BAR (ReBAR) to efficiently feed the GPU, which works great on modern systems but means on older PCs without ReBAR, Arc cards suffer significant performance loss and thus very poor efficiency (again, something to be aware of) gamersnexus.net. The new Arc B580 reportedly has a 175W TDP for performance slightly above a 200W NVIDIA RTX 4070 tomshardware.com tomshardware.com, indicating Intel may have improved perf-per-watt with Battlemage. In summary, Intel’s efficiency is decent but not class-leading – their focus was getting the performance and features right first. If you run Arc in ideal conditions (new games, ReBAR enabled, latest drivers), you get good performance per watt. But if not, Arc can draw a lot of power doing translation work (e.g., older API overhead). The upshot: Intel’s cards are fine on power for the midrange (and they certainly don’t approach the high TDPs of NVIDIA/AMD flagships), but they don’t currently threaten NVIDIA/AMD in efficiency leadership.

- Real-world implications: For most users, power consumption matters for three reasons – thermals/noise, electricity cost, and required PSU capacity. NVIDIA and AMD’s high-end cards will dump a lot of heat into your case; partner cards from both often have massive 3-4 slot coolers to keep noise down. AMD’s slight edge in using fewer watts means something like a reference 7900 XTX can often run a bit quieter/cooler than a 4090 under load (since it’s not consuming as much power), but on the flip side NVIDIA’s cooler designs (like the 4090 Founders Edition) are very robust too. At the midrange and lower, all the cards from any vendor are fairly easy to cool and quiet – a 200W Arc or 220W RTX 3060 Ti, etc., are well within the cooling capability of dual-fan designs and don’t mandate huge PSUs. One notable change in power standards was the introduction of ATX 3.0 power supplies and the 12VHPWR connector for next-gen GPUs. While NVIDIA adopted it for 40-series, AMD stuck to the traditional 8-pin connectors for 7000-series (so no adapters needed for AMD cards). Intel’s Limited Edition Arc A7 cards also used standard 8-pins. So, if you were concerned about the 4090 cable issues, know that AMD’s high-end cards avoid that entirely by using 3 x 8-pin connectors, albeit at the cost of more cables. It’s also worth noting that new power-saving features are coming via software: NVIDIA, for example, has a Frame Rate Target feature and support for ULMB (ultra-low motion blur) at high refresh which can affect power draw; AMD introduced HYPR-RX which can auto-tune settings for performance or efficiency.

In the end, if you want the most efficient gaming GPU for a given performance, AMD’s RDNA3 offers slightly better efficiency in raster games, while NVIDIA’s Ada offers better efficiency in ray-traced or AI-accelerated tasks. Intel is respectable for the mainstream but is still optimizing. All three vendors now support modern codec acceleration and have improved idle power, so day-to-day energy use (when not gaming) is low for all. Just remember: the faster the card, generally the more power it will use – so a midrange card from any vendor will be far more watt-friendly than the ultra-high-end models.

Features and Technology: Ray Tracing, AI, DLSS vs FSR vs XeSS, and More

Raw speeds and feeds are only part of the story. The 2020s GPUs are defined by their advanced features – real-time ray tracing, AI-enhanced graphics, upscaling techniques, media encoders, and software ecosystems. Here’s how NVIDIA, AMD, and Intel compare on the feature front:

- Real-Time Ray Tracing: NVIDIA pioneered modern ray tracing on GPUs with its RTX 20-series (2018), and by the RTX 40-series they are on their 3rd generation of RT Cores. This gives NVIDIA a strong lead in ray-traced performance and capabilities. Games that use DirectX Raytracing or Vulkan RT run significantly faster on GeForces, especially high-end ones, than on equivalent Radeon or Arc cards. For example, enabling ray tracing can make an AMD 7900 XTX fall well behind an RTX 4080 or 4090 in the same game – ThePCEnthusiast’s tests showed the RTX 4090 “outperforms the RX 7900 XTX significantly in games where ray tracing is enabled”, often by a large margin thepcenthusiast.com. NVIDIA’s architecture just has more dedicated hardware (like 128 RT cores on a 4090 vs 96 “Ray Accelerators” on 7900 XTX) and more refined algorithms. They also support full path tracing in tech demos (e.g., Portal RTX or Cyberpunk RT Overdrive mode) that really stress ray tracing; these demos are basically NVIDIA-only at playable framerates. AMD introduced ray tracing with its last-gen and improved in RDNA 3 (2nd-gen ray accelerators). AMD cards can certainly do ray tracing – and in lighter implementations or at lower resolutions, they do fine – but in heavy scenes, they lag. It’s not uncommon to see an RX 7900 XTX performing like a card one or two tiers lower from NVIDIA when RT is on (e.g., closer to an RTX 4070 in some cases). Intel’s Arc Alchemist GPUs also have hardware ray tracing (they actually have competitive ray intersection units – Intel positioned Arc’s RT performance between AMD and NVIDIA’s first-gen efforts). Arc GPUs can surprisingly hold their own in ray tracing against similarly priced cards; one review noted Arc A770’s RT performance was closer to an RTX 3060 Ti, and better than AMD’s RX 6600 XT in some tests techpowerup.com pcgamesn.com. However, since Arc struggles in some games generally, its RT advantage is situational. Summary: NVIDIA leads in ray tracing, making it the preferred choice if you want to max out ray-traced effects. AMD’s latest cards are roughly on par with NVIDIA’s previous gen for RT (a 7900 XTX ~ an RTX 3080 Ti/3090 in RT performance). Intel is around that same previous-gen level in RT for supported games. All three support the major APIs (DXR, Vulkan RT) so any ray-traced game will run on all, just at different speeds.

- AI Acceleration & Tensor Cores: NVIDIA also has a multi-year head start in AI hardware on GPUs. Their Tensor Cores (4th-gen in RTX 40 series) are specialized units for matrix math, originally added for pro/workload uses but now heavily used in consumer features like DLSS (AI upscaling) and RTX Voice. These give NVIDIA an edge in any feature that leverages AI. For instance, when running AI inference tasks (like Stable Diffusion image generation or training small ML models), NVIDIA GPUs tend to be far faster because frameworks are optimized for CUDA and Tensor Cores. AMD’s RDNA3 does include some AI accelerators (they call them AI Matrix cores), but they are not as broadly utilized – AMD hasn’t yet introduced a marquee consumer feature that uses those (though things like AMD’s Radeon Super Resolution or noise suppression exist, they don’t tap “matrix cores” the way Nvidia does). Intel’s Arc GPUs have XMX Engines, which are functionally similar to Tensor cores (and in fact can also do matrix ops for AI). Intel leverages XMX for XeSS (on Arc GPUs, XeSS can use either XMX or DP4a instructions on non-Arc hardware). In practice, NVIDIA’s AI advantage is in software ecosystem and performance. The DLSS feature (detailed next) is a prime example, but also the broader support: NVIDIA GPUs accelerate AI in Topaz video upscalers, Adobe Photoshop (for certain filters), Blender (OptiX AI denoiser), and countless machine learning projects. AMD is trying to catch up by promoting open AI libraries (ROCm and HIP for compute) and even releasing an AI-driven upscaling competitor called FSR 3 “Fluid Motion Frames” (which generates interpolated frames akin to DLSS 3’s Frame Generation). As of mid-2025, AMD’s FSR 3/AFMF is just rolling out and works on any GPU (even NVIDIA’s) but is still new tomshardware.com tomshardware.com. It’s fair to say NVIDIA is ahead in practical AI features on consumer GPUs, AMD is a bit behind but pushing open solutions that work across hardware, and Intel is using AI mainly to enhance their own features like XeSS and not for a lot of third-party apps yet.

- Upscaling Technologies (DLSS vs FSR vs XeSS): These have become game-changers for squeezing more performance out of GPUs. NVIDIA DLSS (Deep Learning Super Sampling) is the most mature and generally the highest-quality upscaling tech, now in its 3rd iteration. DLSS 2 can boost frame rates by rendering at a lower res and using AI to reconstruct an image close to native quality. DLSS 3, exclusive to RTX 40-series, adds Frame Generation where the GPU’s Optical Flow Accelerator and AI interpolate entirely new frames, doubling frame rates in CPU-limited or ultra-ray-traced scenarios. Gamers and reviewers have found DLSS 3 can massively improve smoothness (e.g. taking a 60 fps base to 120 fps), though it can add a bit of latency and sometimes visual artifacts. AMD’s FidelityFX Super Resolution (FSR) is the open-standard alternative. FSR 2 is a spatial upscaler (no AI) that works on any GPU and is widely supported in games. It doesn’t require special hardware, so even older cards benefit. The trade-off: DLSS 2 still tends to produce slightly sharper or more stable images than FSR 2 in head-to-head comparisons (especially in fine detail and anti-aliasing). ThePCEnthusiast noted “NVIDIA’s DLSS is overall far better than AMD’s upscaling” in terms of quality thepcenthusiast.com – perhaps a bit hyperbolic, but it reflects the sentiment that DLSS (with dedicated AI training per game) is more refined. AMD’s upcoming FSR 3 (with Fluid Motion Frames) aims to close that gap by offering frame generation that could work on all hardware. If AMD succeeds, even NVIDIA gamers might use FSR3 in games that don’t have DLSS3. Intel’s XeSS is somewhat in-between: it does use AI (on Arc’s XMX or via DP4a on others) and provides image quality on par with DLSS/FSR in many cases. It hasn’t been adopted as widely as FSR or DLSS, but notable games like Shadow of the Tomb Raider and Death Stranding support XeSS. Bottom line: NVIDIA currently leads in upscaling tech with DLSS, particularly because of frame generation on RTX 40 cards. AMD’s FSR is a strong contender that’s universally accessible, making it a great boon for AMD card owners and even those on older GPUs. Intel’s XeSS is a nice bonus for Arc users, showing Intel is contributing to this space too.

- Resolution and Refresh Rate Features: All modern GPUs support high resolutions and high refresh monitors. One differentiator historically was adaptive sync tech: NVIDIA had G-Sync (proprietary module) monitors, AMD championed FreeSync (open standard using Adaptive-Sync). In 2025, this has converged – virtually all monitors are “FreeSync” (Adaptive-Sync) and NVIDIA supports those as G-Sync Compatible. So no matter your GPU, you can get tear-free variable refresh on most monitors. NVIDIA still sells some premium G-Sync Ultimate displays with extras, but that’s niche. In terms of max resolutions: NVIDIA and AMD high-end can drive 4K and even 8K (with DSC). The RTX 4090 even has HDMI 2.1a and DisplayPort 1.4 (curiously not DP2.0 yet on 40 series, whereas AMD’s 7000 series actually included DisplayPort 2.1 support – meaning AMD cards are a bit more future-proof for upcoming ultra-high-res monitors). Intel’s Arc also supports DisplayPort 2.0, which is a forward-looking feature (for e.g. 4K at 240Hz or 8K at 60Hz without compression). So AMD and Intel get a nod for being first with DP2.0/2.1 support. NVIDIA likely will add that in next gen.

- Media Encoding & AV1: A big win for this generation of GPUs is the inclusion of AV1 video encoding on all three vendors’ latest cards. AV1 is a modern, more efficient codec than H.264/HEVC, great for streamers and video editors. Intel Arc was first to market with AV1 encode, and it earned a lot of praise – streamers found that an Arc A770 could encode 1080p or 1440p AV1 with excellent quality and performance, outclassing CPU encoding and matching or beating NVIDIA’s older encoders. NVIDIA caught up by putting dual AV1 encoders in the RTX 40-series (the 4090/4080 can encode two 4K streams at once). AMD’s RX 7000 series finally added AV1 encode as well (their previous gen lacked it). In terms of encoder quality, independent tests by Tom’s Hardware reveal a nuanced picture: for H.264 encoding, NVIDIA’s NVENC is top-notch with Intel Arc “just a hair behind” in quality tomshardware.com, and AMD trailing (AMD’s H.264 quality has historically been worst of the three) tomshardware.com. For HEVC (H.265), Intel’s Arc actually came out best in quality, slightly ahead of NVIDIA, with AMD closing the gap but still behind tomshardware.com tomshardware.com. And for AV1, all three are very close, but interestingly Arc’s AV1 quality was measured a tad lower than its HEVC (Arc led in HEVC, but in AV1 it was about 2-3 points lower in VMAF than NVIDIA) tomshardware.com tomshardware.com. The differences are small – practically, NVIDIA and Intel both offer excellent broadcast-quality encoders, and AMD’s new encoder is much improved from the past, now “good enough” for most uses but still slightly behind in metrics. If you are a streamer or do a lot of video work: any of the new GPUs will serve you well for encoding, but NVIDIA’s widespread adoption in apps (NVENC support is everywhere) might make it the easiest choice. Intel’s Arc has niche uses, for example some OBS users build a second PC with a cheap Arc GPU purely as an AV1 streaming encoder. AMD’s encoder should not be discounted either – at high bitrates or with AV1, the gap is small. One more note: NVIDIA has two encoders on 40-series, which can be used in parallel or for features like dual-stream recording or 8K60 capture, which is a plus for extreme cases.

- Proprietary and Niche Features:

- NVIDIA has a whole suite: RTX Voice/Broadcast for AI noise removal and virtual background (works only on GeForce GPUs with Tensor cores), NVIDIA Reflex to reduce input lag in supported games (this is widely supported and works on any GPU actually, but NVIDIA GPUs pair with G-Sync monitors to also have Reflex Analyzer features), and ShadowPlay for easy game recording with minimal overhead. They also have CUDA for general GPU computing which many creative apps use (from Adobe to Unreal Engine’s GPU Lightmass, etc.). For creative professionals, NVIDIA’s Studio Drivers and certifications for apps like Autodesk Maya or DaVinci Resolve provide additional stability. And one more cool feature: GeForce Experience filters (Freestyle) let you apply post-processing filters to games – fun for content creation.

- AMD’s software includes features like Radeon Anti-Lag (similar to Reflex to reduce latency), Radeon Chill (to save power by capping framerates when possible), Radeon Image Sharpening and Super Resolution (a driver-level upscaler for games that don’t have one, akin to FSR but forced at driver level). AMD has an AMD Link to stream your games to other devices. They also introduced a noise suppression for microphones (their answer to RTX Voice, using a different approach). For pros, AMD’s ROCm is their GPU compute platform, but it’s mostly relevant in Linux or AI circles; on Windows, they rely on OpenCL or DirectML for acceleration in apps – which historically meant fewer optimizations, but that’s changing as AMD works with software vendors (Blender’s Cycles now supports AMD via HIP, and Premiere Pro has some GPU acceleration that works on AMD).

- Intel’s Arc software (Arc Control) is evolving. They have a built-in performance tuner and overlay, and a neat feature called Smooth Sync which is sort of a hybrid v-sync to reduce tearing if you don’t have VRR. Arc Control also has capture and streaming features integrated (taking a page from GeForce Experience). As for unique Intel advantages: one is that Intel’s GPUs and CPUs together can leverage things like Deep Link (in laptops, combining iGPU and dGPU for certain tasks). On desktop, one interesting use is Intel’s oneAPI rendering toolkit – Intel GPUs can accelerate ray tracing in the Embree library or OSPRay (used in some professional visualization) and work in conjunction with Intel CPUs. This is niche, but worth noting for those in specific fields. Intel also open-sourced a lot of their driver stack and are working on day-0 game support slowly improving.

To sum up the features comparison: NVIDIA offers the richest and most polished feature set, from the best ray tracing and DLSS (with frame generation), to a robust media engine and broad software support. AMD offers most of the same base capabilities (ray tracing, upscaling, encoding) but with a more open approach – their features often work on any hardware and they prioritize giving users more control (many toggles in driver for customizing your experience). AMD is catching up in software, but still trails a bit in things like AI upscaling quality and certain pro application support. Intel is surprisingly competitive on features (Arc has all modern tech) but is still building up its ecosystem – drivers are improving, and features like XeSS and Smooth Sync are nice, but the overall Arc experience is not yet as seamless as the other two.

The good news is that regardless of brand, 2025’s GPUs are packed with tech that goes beyond just pushing pixels – they’re doing real-time movie-like effects, intelligent upscaling, high-quality streaming, and more, making PC gaming and content creation better than ever.

Software, Drivers and Ecosystem Support

The hardware is only as good as the software driving it. Stable drivers, game compatibility, and ecosystem support (in operating systems, game engines, creative apps, etc.) are crucial for a smooth experience.

- Driver Maturity and Stability:NVIDIA has a long-standing reputation for solid, if not always perfect, drivers. They release Game Ready drivers frequently, often on or before major game launches to ensure optimal performance and bug fixes. For most users, NVIDIA’s driver experience is plug-and-play – serious driver issues are relatively rare, though not unheard of (e.g., a driver in early 2023 had a bug that could corrupt Windows installs when updating – it was quickly fixed). NVIDIA provides both Game Ready and Studio driver branches, the latter being tested more for professional app stability. Their control panel interface is a bit old-school, but it’s stable and they’ve added features via GeForce Experience. One NVIDIA-specific ecosystem point: on Linux, NVIDIA’s proprietary drivers historically had some friction (less open than AMD’s). But they have improved and even open-sourced some kernel modules recently. Still, for open-source enthusiasts, AMD is usually the go-to. AMD’s drivers had a rocky reputation years ago (the old joke “AMD drivers” being code for issues), but in recent generations they’ve improved greatly. The Adrenalin driver suite is now feature-rich and generally stable. AMD had some stumbles – for instance, there was an infamous issue where updating the driver through the Adrenalin software without a clean install could, in rare cases, corrupt system files (this was traced to a Windows interaction and fixed). But those edge cases aside, AMD’s day-to-day driver is quite robust now. They also support day-0 game drivers, though sometimes not as quickly as NVIDIA for less popular titles. One nice aspect of AMD’s software is the Adrenalin interface, which many find more modern and user-friendly than NVIDIA’s barebones control panel. It includes monitoring, streaming, recording, tuning – all in one. AMD also leads in open-source driver development: on Linux, the AMDGPU driver is open and integrated into the kernel, which Linux gamers appreciate (Valve’s Steam Deck uses an AMD GPU, leveraging that open driver). So if you use alternative operating systems or just like transparency, AMD gets kudos. Intel’s Arc drivers are the newest and thus had the most kinks to iron out. At launch (late 2022), Arc drivers struggled with many older games, had inconsistent performance, and features like ReBar requirement caught people off guard. Over 2023, Intel pushed out dozens of driver updates, each squashing bugs and improving performance. Gamers Nexus, after a year, noted that Arc’s software “has improved dramatically” and Intel resolved many early issues, though some “disruptive” problems remained for casual users (like needing to tweak things for certain games, or requiring a second monitor to troubleshoot black screens) gamersnexus.net gamersnexus.net. Intel’s driver UI (Arc Control) has had its share of criticism for being clunky or buggy initially – e.g., earlier versions spammed the user with notifications and lacked some basic controls, though Intel has been refining this. By mid-2025, Arc drivers are stable for most modern games, but you might still encounter hiccups with certain older titles or niche use cases. Intel’s commitment will be measured by how they continue to support Alchemist users even as Battlemage rolls out. There’s optimism here: Intel open-sourced parts of their drivers and engaged with the community (providing frequent progress updates). If you’re an Intel GPU owner, you can expect to update drivers regularly for the best experience – which some enthusiasts enjoy (constant improvement) but others might find tedious.

- Game Compatibility and Performance Optimization: Most games “just work” on all three vendors nowadays, thanks to industry standards like DirectX12 and Vulkan, and efforts by GPU makers to comply with them. However, edge cases: older DirectX9/11 games ran into performance issues on Intel Arc at first because Intel’s driver uses a translation layer for DX9 (since Arc is optimized for DX12+). Intel has improved this a lot (DX9 games now run much faster than at launch, with over 40% gains in some cases hothardware.com), but if your favorite game is a 15-year-old DX9 title, an NVIDIA or AMD card might still handle it more smoothly due to their driver optimizations baked over many years. For the latest games, developers typically test on all three vendors. NVIDIA’s high market share means games are virtually always well-optimized for GeForce out-of-box. AMD, being in both PCs and consoles (the Xbox Series X|S and PS5 use AMD GPUs), also sees good optimization – many games run great on Radeon because consoles set a baseline. In fact, sometimes game devs optimize for consoles first, which inadvertently favors AMD’s architecture in PC ports. We saw this in a few titles where Radeons punched above their weight. Intel is the wildcard – with lower market share, not every dev spends equal time on Arc optimization. The flip side is Intel actively works with developers of popular engines and sponsored titles to get them on board (for example, Intel worked on enabling XeSS and optimizing for Arc in Call of Duty: Modern Warfare II and others). Over time, Intel’s presence will likely ensure better “out of the box” support.

- Ecosystem (Game Engines, APIs, and Software Support):

- Game Engines: Unreal Engine, Unity, CryEngine, etc., all support the big three GPUs. NVIDIA often provides plugins or integrations (like DLSS plugins for Unreal, or PhysX in earlier days). AMD promotes open features (like FidelityFX effects, which many engines incorporate). Intel will likely push XeSS and other tech into engines as well. If you’re a developer, NVIDIA’s dev relations are renowned – they supply tools for debugging and profiling (Nsight), and have extensive documentation. AMD also has tools (Radeon GPU Profiler, etc.) and is beloved for their open-source libraries (like AMD’s GPUOpen initiatives). Intel is building their developer ecosystem with oneAPI and tools that support cross-vendor programming.

- Content Creation software: This is where NVIDIA has a clear advantage due to CUDA and OptiX being ubiquitous. If you run Adobe Photoshop or Premiere, both NVIDIA and AMD will accelerate many effects, but some proprietary ones use CUDA (so only NVIDIA works). Blender’s Cycles renderer uses either CUDA/OptiX (NVIDIA) or HIP (AMD) or oneAPI (Intel). In Blender’s latest benchmarks, a high-end NVIDIA card still outperforms a comparable AMD card in rendering, partly thanks to the OptiX AI denoiser and RTX’s ray tracing hardware being fully utilized. AMD is closing the gap; their GPUs can now run many of these workloads with the right settings, but historically creatives tended to favor NVIDIA for reliability and performance. However, the tide is changing a bit: GPU renderer engines like OTOY Octane, Maxon Redshift, etc., that were once NVIDIA-only (CUDA) are starting to offer versions for AMD (via Metal on Mac, or via HIP on PC). AMD’s large VRAM counts also attract content creators (for instance, if you do GPU video editing or 3D, a 20GB AMD card at far less cost than NVIDIA’s 24GB could be a great value). Intel’s Arc in content creation is still nascent – one notable mention: Puget Systems found Arc GPUs after driver updates could outperform NVIDIA’s RTX 3060 in some Adobe Premiere GPU effects and even beat RTX 4060 in certain tasks pugetsystems.com. Intel has optimized their drivers for creative apps somewhat, and Arc’s media engine is excellent for transcodes. But pro users also consider driver certification and app vendor support – NVIDIA and AMD have pro driver options (e.g., NVIDIA’s Studio driver, AMD’s Pro drivers and Radeon Pro GPUs) which are certified for Maya, CAD, etc. Intel doesn’t yet have that pedigree in pro apps, so Arc is more for hobbyist creators than for, say, a 3D animation studio pipeline.

- VR and Others: All brands support VR headsets (via OpenVR or Oculus APIs). Historically NVIDIA had an upper hand in VR performance at the very high end (and features like VRWorks), but AMD’s GPUs work perfectly fine for VR and even Intel Arc can run a VR headset – though I would be cautious about Intel for VR just due to any latency or driver hiccup potentially affecting the experience. For multimedia, all support HDCP, multi-monitor, etc. Minor note: Gamers Nexus pointed out Intel Arc had some monitor compatibility issues at launch (some older monitors wouldn’t show BIOS/boot on Arc GPUs) gamersnexus.net gamersnexus.net, but these were edge cases and partly resolved (just something that early Arc adopters encountered).

- Community and Driver Features: Enthusiast communities often develop around GPUs. NVIDIA has a loyal following and lots of forums where people share optimized settings, custom mods (e.g., shunt modding a 4090 for more power, custom cooling). AMD’s community is also strong – some users love AMD for their openness and for championing the underdog. AMD’s driver issues in the past have made their community very proactive in bug reporting and workarounds, and AMD has been responsive in many cases (for instance, the community and tech media called out the 7900 XTX hotspot issue, and AMD acknowledged and addressed it through RMAs tomshardware.com). Intel’s Arc community is perhaps the most passionate in 2023–2025: these are early adopters who actively test new driver betas, report issues, and celebrate each performance gain. Intel engaging with them through social media and driver notes has been a positive sign. So while not a technical spec, community support can be a factor – AMD and Intel’s smaller user base means you might have to search a bit more for fixes if you encounter a unique issue, whereas with NVIDIA, chances are someone else had that issue too given their large user base.

In conclusion, NVIDIA offers the most polished and widely compatible software experience, ideal for those who want things to “just work” across games and professional apps. AMD’s software has nearly caught up for gaming needs, even surpassing NVIDIA in some user-friendliness aspects, and will serve the vast majority of users well while also catering to open-source aficionados. Intel’s software is the least mature, but it’s improving at a rapid clip – adventurous users can enjoy being part of that evolution (and benefit from Intel’s frequent optimizations), whereas more conservative users might prefer to wait until Intel’s ecosystem is as battle-tested as the other two.

Recent News, Releases, and Controversies

The GPU world never stays still. Here are some notable recent developments and dramas as of mid-2025 that consumers should know:

- New GPU Generations on the Horizon: We are in a transition period in 2025. NVIDIA has begun launching its next-generation RTX 50-series (“Blackwell” architecture) cards. In early 2025 they announced the architecture and even launched a few models (the ultra-high-end RTX 5090 and 5080, and upper-mid RTX 5070 Ti/5070) tomshardware.com. Similarly, AMD has started rolling out its successor to RDNA3 – confusingly referred to as the RX 9000-series (likely RDNA4). Cards like the RX 9070 XT and RX 9070 (which would slot in around the current 7700/7800 level) launched in Q1 2025 tomshardware.com. These new generation cards promise even higher performance and new features (for example, rumors of NVIDIA using even more advanced AI cores, and AMD possibly introducing chiplet-based ray tracing blocks), but availability has been severely limited. Both NVIDIA and AMD had a bit of a paper launch – demand far outstripped supply, in part because both underestimated how quickly their RTX 40 and RX 7000 stock would dry up tomshardware.com. NVIDIA’s new 50-series, in particular, was affected by the ongoing AI boom: the same chips (or manufacturing capacity) that could go into gaming GPUs are being bought up for AI/data-center uses. This led to a situation where “you can’t buy [the new GPUs] at MSRP, or sometimes even at all,” as Tom’s Hardware described tomshardware.com. In fact, many previous-gen cards (40-series and 7000-series) also went out of stock earlier than expected, leaving a gap in the market tomshardware.com. The bottom line for consumers is that as of mid-2025, the “popular” GPUs are still the RTX 40-series and RX 7000-series, since the newer ones are hard to get (and very expensive on the scalper market). If you do have your eye on upcoming tech, late 2025 might bring more accessible models like NVIDIA’s RTX 5060 etc. (NVIDIA was reportedly set to launch an RTX 5050 at $249 soon, albeit with modest performance gains tomshardware.com), and AMD’s lower RX 9000s. Also, Intel’s next-gen Arc Battlemage should fully arrive in 2025 – the Arc B-series cards B580 and B570 are already out in limited quantities, with more to come, potentially giving NVIDIA/AMD some fresh competition in mainstream segments.

- Pricing Drama and Adjustments: We touched on the RTX 4080 12GB fiasco which was a major self-inflicted wound for NVIDIA’s image – it was seen as an attempt to “upsell” a 4070-class card as a more expensive 4080 by name trickery. The community’s loud backlash forced NVIDIA to cancel and rebrand it tomshardware.com. Another pricing controversy was the initial high prices of midrange cards in this generation – e.g., the RTX 4060 Ti 8GB at $399 was widely slammed as too expensive for what it offered techspot.com. AMD also had a smaller scale issue with the RX 7900 XT being only $100 cheaper than the XTX for notably lower performance – many felt it should have been priced a lot less (and indeed, AMD quietly dropped its price after launch by around $100 to make it more attractive). Fast forward to 2025, and a new kind of pricing drama emerged: due to massive demand for GPUs in AI training (NVIDIA sells A100/H100 GPUs for servers in droves), gamers started feeling the squeeze in supply. Additionally, global factors like silicon supply constraints and tariffs on tech imports have kept prices high tomshardware.com. We’re not in the 2021 crypto mining craziness anymore – you can find GPUs in stock – but MSRPs have generally stayed high and actual retail often higher. Many enthusiasts hope that Intel’s competition or a dip in AI demand might force more price drops. There’s also chatter about “price corrections” with next-gen (for instance, if RTX 50-series brings huge performance leaps, NVIDIA might shuffle pricing to not make 40-series look bad), but that remains to be seen.

- Launch Shortages and Supply Issues: As noted, early 2025 saw launch shortages for both NVIDIA and AMD new cards. Cards selling out in minutes, followed by inflated reseller prices – a scene unfortunately familiar from past years. One twist is that this time it isn’t just crypto miners or gamers; it’s also AI labs and cloud providers indirectly causing shortages by gobbling up TSMC’s 5nm wafers. Another shortage anecdote: in late 2022 and 2023, when these 40-series/7000-series launched, the very top-end (NVIDIA 4090, AMD XTX) had tight availability initially (4090s were hard to get for months due to demand), whereas some other models flooded shelves (e.g., the ill-fated RTX 4080 12GB – after rebrand, the 4070 Ti – had decent availability because demand was weaker at that price). AMD’s reference RX 7900 XTX ran into a different kind of “shortage” when they paused shipments briefly to investigate the vapor chamber cooling issue – but they handled RMAs and it didn’t become a long-term supply problem tomshardware.com. Meanwhile, Intel Arc’s launch was small-scale; availability was limited to a few regions at first (for example, Arc A770 was hard to find in some countries), but by 2023 Intel partnered with more board vendors to produce Arc cards, improving distribution. Still, if you compare the shelf presence: NVIDIA and AMD cards are everywhere, Intel is still not in every store. We expect that to change if Battlemage succeeds.

- Controversies and Tech Issues: We already talked about the 12VHPWR cable melting controversy on RTX 4090 pcworld.com, which was the biggest negative headline of late 2022. Following that, NVIDIA was also sued (along with partners) in some class-action suits about the melting connectors – but ultimately it appears user error (not fully plugging in) was a main cause and NVIDIA even updated connectors and offered free replacements, so it blew over. AMD’s 7900 XTX overheating issue in early 2023 was another controversy: some reference cards would hit 110°C junction temperature and throttle. After investigations (notably by igor’sLAB and Der8auer), it was found the vapor chamber had insufficient liquid in some batches hardforum.com. AMD admitted the fault and arranged RMAs tomshardware.com. While unfortunate, the transparent handling earned AMD some goodwill, and it only affected “a limited number” of cards tomshardware.com. Intel’s Arc had a more quiet controversy wherein there were rumors Intel might cancel Arc due to the troubled launch and financial losses. In late 2022 some reports speculated Intel would axe the graphics division, but Intel reiterated commitment and indeed followed through with driver improvements and plans for Battlemage. By 2025, those rumors have subsided – Arc is here to stay, it seems. Another point: Driver controversies – in early 2023, AMD had one where a certain driver combined with a specific Windows update scenario could corrupt the OS during installation (fix: don’t update drivers during Windows update). NVIDIA had a bug where their software (GeForce Experience) could inadvertently limit CPU performance by engaging the wrong Perf profile – again, quickly patched. These incidents, while resolved, underscore that complex driver software can have hiccups for any vendor.

- Standout Innovations and Milestones: On a more positive note, each company achieved some cool milestones. NVIDIA’s DLSS 3 Frame Generation was a highlight of the Ada generation, doing something no one had done in games at scale (inserting AI frames to boost smoothness). This has been a real selling point for RTX 40-series; as of mid-2025, over 50 games support DLSS 3 and the number is growing tomshardware.com. AMD’s big innovation was the chiplet design in the RX 7900 XTX/XT – the first time a gaming GPU split its die into a Graphics Compute Die plus memory/cache chiplets. This was hailed as a technical achievement that could pave the way for more scalable GPU designs (possibly helping AMD offer high VRAM and decent yields). AMD also introduced features like SmartAccess Memory (SAM) in the prior gen (resizable BAR, which Intel and NVIDIA adopted too) and continued leveraging their synergy with Ryzen CPUs (SmartAccess Video, etc.). Intel’s standout was bringing AV1 encoding to the masses first and pushing an open approach (they even open-sourced their encoder for others to use). Intel Arc also proved that a newcomer can implement hardware ray tracing and AI cores in its first attempt – something that’s technically impressive. Another industry-wide milestone: the use of 3D stacked memory/cache – not quite in these consumer cards yet (except NVIDIA’s small L2 cache stacks on Hopper professional GPUs), but AMD’s chiplet approach hints at a future of stacking more on GPUs. And let’s not forget, the craze of late 2024/2025: AI image generation and large language models – this drove huge demand for GPUs and showed how important GPU tech is beyond gaming. NVIDIA’s H100 became the poster child for AI, sometimes dubbed “the chip that launched a thousand startups.” AMD is fighting back in the AI space with instinct MI300 (which is a CPU+GPU APU for servers). While these are data center parts, it trickles down – for example, gamers now care if their GPU can run AI models for fun (Stable Diffusion on GPU to make art, etc.). In that aspect, NVIDIA’s 24GB memory on 4090 and CUDA libraries have been very enabling (you can run many AI models at home on a 4090). AMD cards can do it too (some frameworks support ROCm on Windows or Linux for Stable Diffusion), but fewer tutorials exist. Intel Arc can also run AI models via OpenVINO or DirectML, but with 16GB it might be limited on huge models.

- Market Trends: AMD’s market share in discrete GPUs had fallen to historic lows by some reports in 2024 (around 10-15% vs NVIDIA’s dominance) tomshardware.com. Intel’s entry could shift this – even if Intel grabs a small share, it means more competition. The good news for consumers is that no one’s resting easy: AMD slashing prices, NVIDIA having to adjust to criticism, and Intel pushing value all benefit the buyers. We’ve also seen cross-pollination of tech: features like ray tracing and upscalers are now standard across all brands (where once they were NVIDIA-only). Looking ahead, we might see more convergence or standardization (for instance, an open standard for frame generation maybe? NVIDIA’s pushing for OS-level frameworks).

In summary, the recent news and controversies paint a picture of a vibrant, if sometimes volatile, GPU market. There have been missteps (like NVIDIA’s naming blunder and AMD’s cooler issue), and there have been triumphant leaps (like real-time path tracing showcases and new architectures). As a consumer in mid-2025, it’s a great time to be into graphics: whether you choose NVIDIA, AMD, or Intel, you’re getting an incredibly advanced piece of tech. Just go in informed – know each brand’s quirks and history – and you’ll be able to navigate the GPU landscape and choose the card that best fits your needs and budget.

Conclusion

The GPU wars of 2025 have given us an abundance of choice and performance. NVIDIA holds the performance crown and offers a gold-plated ecosystem of features (ray tracing excellence, DLSS 3, broad software support) – ideal if you demand the absolute best and don’t mind paying a premium. AMD’s Radeon counters with a value-driven onslaught: you get a huge amount of gaming performance per dollar, more than enough for high-end gaming, plus advantages like lower power draw and ample VRAM, all while steadily closing the gap in features. It’s a compelling option for gamers who want high-end capabilities without the wallet pain of NVIDIA’s top-tier prices (just remember AMD lags in ray tracing and some niche software optimizations). Intel’s Arc emerges as the wildcard: it’s the budget champion that’s also pushing technology forward (first with AV1, stylish designs, etc.). Arc GPUs are a breath of fresh air for the lower-cost segment and have proven that more competition is always a good thing – they’re a viable choice for mainstream gaming if you’re tech-savvy and patient with drivers, and they’ll only get better with time.

For most public consumers today: if you’re a competitive gamer or content creator who prioritizes stability and the highest performance (especially in new tech like ray tracing or AI workloads), NVIDIA GeForce RTX is a safe bet – just be ready to invest a bit more. If you’re a gamer looking for maximum frames per dollar and don’t mind foregoing the last 10-20% extreme performance or some proprietary features, AMD Radeon RX cards will likely give you the most satisfaction for your money. And if you’re an early adopter or on a tighter budget but still want modern features, Intel Arc can be a rewarding choice that keeps improving and nudges the industry toward more openness.

One thing is certain: the GPU space in 2025 is incredibly exciting. From photorealistic graphics with ray tracing, to AI that can upscale or even generate frames, to ever-improving efficiency, today’s GPUs are leaps and bounds beyond what we had just a few years ago. And with new releases on the horizon from all three players, the competition is set to heat up even further – meaning more innovation and potentially better prices for consumers. The ultimate winner of the GPU wars is hopefully you, the user, who now has more capable choices than ever before. Happy gaming (and rendering, and streaming) on whatever GPU you choose!

Sources:

- Tom’s Hardware – GPU Benchmarks & Hierarchy 2025 tomshardware.com tomshardware.com

- Tom’s Hardware – Best GPUs for Gaming 2025 (July 2025 update) tomshardware.com tomshardware.com

- Tom’s Hardware – Nvidia RTX 4090 Founders Edition Review (Jarred Walton) tomshardware.com tomshardware.com

- ThePCEnthusiast – RTX 4090 vs RX 7900 XTX Comparison thepcenthusiast.com thepcenthusiast.com

- TechSpot – RTX 4090 vs 7900 XTX Benchmarks techspot.com techspot.com

- TechSpot – Nvidia RTX 4060 Ti 8GB Review (Steve Walton) techspot.com

- Gamers Nexus – Intel Arc “One Year Later” Review gamersnexus.net gamersnexus.net

- Tom’s Hardware – Intel Arc B580 performance commentary tomshardware.com tomshardware.com

- Tom’s Hardware – AMD RX 7900 XTX Review (RDNA3 launch) tomshardware.com tomshardware.com

- Tom’s Hardware – Nvidia “unlaunches” 4080 12GB / RTX 4070 Ti release tomshardware.com

- PCWorld – Nvidia responds to 4090 cable melting (Gordon Mah Ung) pcworld.com pcworld.com

- Tom’s Hardware – AMD admits 7900 XTX vapor chamber issue tomshardware.com tomshardware.com

- Tom’s Hardware – Video Encoding Quality Tested (AV1 vs HEVC vs H264) tomshardware.com tomshardware.com

- CGDirector – Nvidia vs AMD GPUs in rendering (Blender, Redshift) cgdirector.com cgdirector.com

- TheFPSReview – Intel Arc driver gains discussion gamersnexus.net linustechtips.com