Key Facts

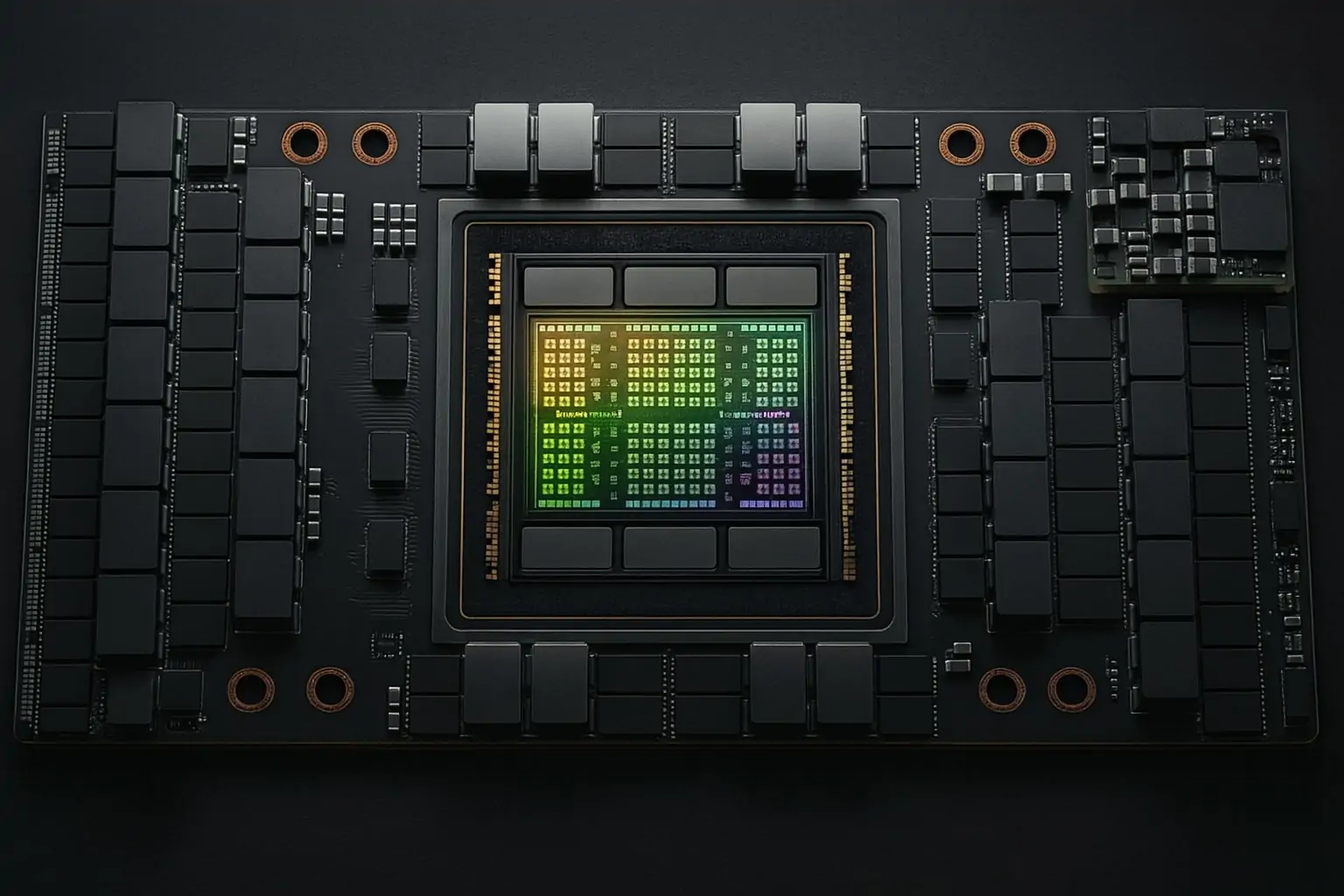

- Surging Demand: Explosive AI growth has pushed HBM (High-Bandwidth Memory) demand to unprecedented levels, with industry forecasts expecting HBM revenues to roughly double from ~$17–18 billion in 2024 to ~$34–35 billion in 2025 yolegroup.com nextplatform.com. Major buyers like NVIDIA are consuming over half of all HBM output to feed AI GPUs tomshardware.com.

- HBM3 → HBM3E → HBM4: The current generation HBM3 and enhanced HBM3E (used in flagship AI chips like NVIDIA’s H100/H200 and AMD MI300 series) dominate 2024–25 deployments. HBM3E was first mass-produced in early 2024 trendforce.com and is quickly becoming the memory of choice for new AI systems. Next-gen HBM4 is on the horizon – Micron began sampling HBM4 in mid-2025 (36GB stacks at 2 TB/s) tomshardware.com, and all three major vendors plan volume HBM4 production by 2026 tomshardware.com.

- Supply Expansion Race:SK hynix, Samsung, and Micron are racing to boost HBM output with new fabs and capacity upgrades. SK hynix (the HBM market leader with ~50% share tomshardware.com) is finishing a new M15X fab by late 2025, which will raise its HBM capacity by ~20–30% trendforce.com. Samsung is ramping aggressively – it plans to 2.5× HBM output in 2024 and double it again in 2025, even repurposing an old fab for HBM production trendforce.com. Micron, a later entrant, has pre-sold its entire 2024–2025 HBM supply and is scaling up its Hiroshima plant and packaging lines to catch up tomshardware.com.

- Sold-Out Through 2025:Virtually all HBM supply for 2025 is already locked up in long-term contracts with big customers. “The company’s supply of HBM has been sold out for 2024 and for most of 2025,” SK hynix’s CEO revealed tomshardware.com. Micron’s CEO likewise noted “our HBM is sold out for calendar 2024 and 2025, with pricing already determined” for those years tomshardware.com. In fact, 2025’s HBM output was spoken for well in advance, and even much of 2026 capacity is likely allocated to AI players nextplatform.com.

- Spot vs. Contract Pricing: Because supply is so tight, HBM is primarily obtained via contracts – AI firms must secure supply in advance. Spot market HBM is scarce, and any available units command steep premiums. Contract prices have been on the rise: analysts noted HBM contract prices increasing by mid-single-digits to ~10% in 2024–25 amid the crunch trendforce.com. NVIDIA’s deep pockets give it leverage – it can pay top dollar for HBM to secure supply nextplatform.com – but even NVIDIA must leave some HBM for others to avoid regulatory or legal issues nextplatform.com. Looking ahead, with massive capacity coming online, analysts warn of the first potential HBM price drop in 2026 if supply finally outpaces demand trendforce.com.

- Impact on AI System Costs:Sky-high HBM prices are driving up AI server costs. High-Bandwidth Memory is now one of the most expensive components in an AI accelerator – for example, industry tear-downs estimate the HBM on an NVIDIA H100 GPU board costs on the order of $2,000, comprising a huge portion of the total hardware cost ariat-tech.com. In SK hynix’s case, HBM sales made up 77% of the company’s revenue by Q2 2025 blocksandfiles.com, underscoring how much value is concentrated in these chips. Outfitting an AI server with 8 GPUs means paying for hundreds of gigabytes of HBM, so memory pricing heavily impacts the price tag of AI infrastructure for cloud providers and enterprises.

- Who’s Driving Demand: The AI arms race among tech giants is fueling this HBM boom. NVIDIA – by far the largest HBM consumer – uses HBM3/3E across its AI accelerators (each H100/H200 carries 80GB+ of HBM) tomshardware.com. AMD’s MI300 series and forthcoming MI400 GPUs also leverage large HBM3E stacks nextplatform.com. Cloud hyperscalers like Google, Amazon, Meta, and Microsoft are developing their own AI chips (TPUs, custom accelerators) that rely on HBM as well tomshardware.com. This broad-based rush for HBM has memory makers expecting 30%+ annual growth in HBM demand for years tomshardware.com. Even traditional DRAM suppliers are transforming – SK hynix calls itself a “full-stack AI memory provider” now, and projects the HBM market to reach “tens of billions of dollars” by late decade blocksandfiles.com tomshardware.com.

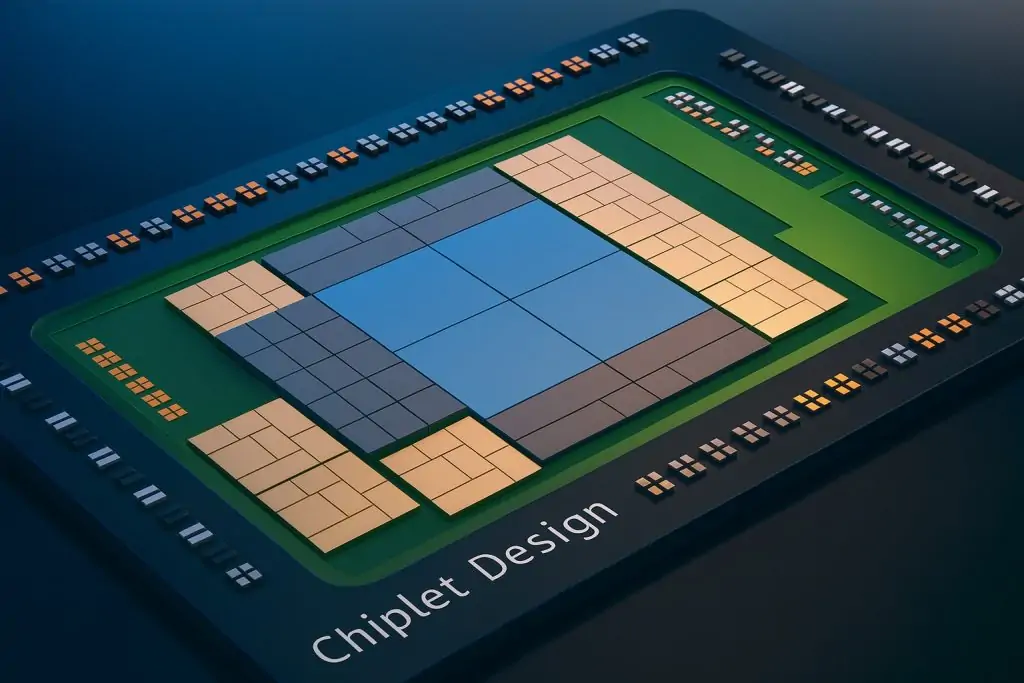

HBM Node Transition: From HBM3 to HBM4

High-Bandwidth Memory has evolved rapidly to keep pace with AI needs. HBM3 – introduced around 2022 – brought huge leaps in bandwidth and capacity per stack, and became the workhorse memory for top-tier AI accelerators like NVIDIA’s A100/H100 and Google TPUs. By 2023, attention shifted to HBM3E, an enhanced generation offering even higher performance. In early 2024, SK hynix became the first to mass-produce HBM3E (sometimes dubbed the 5th-generation HBM) trendforce.com, supplying 12-Hi 36GB stacks to NVIDIA and others. This HBM3E delivers ~1.2 TB/s per stack (versus ~0.8–1.0 TB/s for HBM3), helping power new chips like NVIDIA’s H100 upgrade (“H200”) and AMD’s MI300X. Samsung and Micron also jumped in: Samsung was validating its HBM3E with NVIDIA and AMD in 2024, and Micron qualified its HBM3E for NVIDIA’s GH200 Grace Hopper platform trendforce.com. By late 2024 and into 2025, HBM3E is becoming the default memory for cutting-edge AI systems, given its speed and capacity advantages – industry reports call it “the memory of choice” for 2025 AI deployments.

Meanwhile, HBM4 (the next-generation, 6th-gen HBM) is looming on the horizon. All three suppliers have accelerated development under pressure from their big customers. Notably, Micron shipped sample HBM4 devices in June 2025, ahead of rivals tomshardware.com. Micron’s early HBM4 prototypes pack 36 GB per stack with a blazing 2 TB/s bandwidth – over 60% higher than HBM3E’s throughput tomshardware.com. SK hynix and Samsung aren’t far behind: SK hynix disclosed it pulled in its HBM4 timeline by six months at NVIDIA’s request, aiming to sample HBM4 by mid-2025 and start volume shipments by end of 2025 trendforce.com trendforce.com. Samsung similarly plans to launch HBM4 using its 1c (6th-gen) DRAM process by late 2025 trendforce.com. Mass production for HBM4 across the industry is slated for 2026, aligning with the next wave of AI accelerators (NVIDIA’s codenamed “Rubin” data-center GPU in 2026 is expected to be one of the first using HBM4) tomshardware.com tomshardware.com. In short, 2025 is a transition period: HBM3 and 3E dominate shipments, but HBM4 will begin appearing in pilot quantities, gearing up for a broader HBM4 rollout in 2026 alongside new AI silicon.

Supply: Major Memory Makers Add Fabs and Capacity

The unprecedented demand for HBM has the three DRAM giants – SK hynix, Samsung, and Micron – scrambling to expand supply. All are investing billions to boost both front-end fabrication capacity and back-end packaging (since HBM involves stacking and advanced 3D assembly).

- SK hynix: As the current HBM leader (roughly half the market by revenue tomshardware.com), SK hynix is in full expansion mode. It kicked off mass production of HBM3E in Q1 2024 – a move that helped it surge past Samsung in overall memory sales blocksandfiles.com. Now Hynix is building a brand-new dedicated fab, M15X in Cheongju (Korea), slated for completion by November 2025 trendforce.com. This ~$3.8B facility will start producing DRAM (with a focus on HBM and other advanced nodes) in late 2025, and when fully ramped it will boost Hynix’s HBM output capacity by an estimated 20–30% trendforce.com trendforce.com. In the meantime, SK hynix is also retrofitting existing fabs for HBM: its M16 fab in Icheon produces most of its HBM today, and it’s upgrading its older M14 line to 1α/1β processes to handle more HBM and DDR5 as well ariat-tech.com ariat-tech.com. To relieve packaging bottlenecks, Hynix doubled the backend equipment for HBM3 assembly in 2023 trendforce.com. Hynix is even looking overseas: it has signaled plans for a state-of-the-art HBM manufacturing facility in Indiana, USA, to supply HBM to NVIDIA GPUs fabricated by TSMC trendforce.com. With these moves, SK hynix told investors it expects to double its HBM revenue in 2025 vs 2024 blocksandfiles.com – a reflection of both rising output and still-hot demand.

- Samsung: The South Korean memory giant was initially behind Hynix in the HBM3 race, but it is determined to catch up and win more of NVIDIA’s business. Samsung took a major step by expanding HBM3 production in late 2023, and at *CES 2024 Samsung’s memory VP Han Jin-man announced plans to boost HBM output 2.5× in 2024 and then double again in 2025 trendforce.com. The company has restarted construction on its huge Pyeongtaek P4 fab (which had been paused during the memory downturn) specifically to ramp new DRAM nodes and HBM. Samsung’s investment in 6th-gen “1c” DRAM at P4 aims to begin mass production by mid-2025, with an eye toward HBM4 in H2 2025 on that node trendforce.com. In parallel, Samsung converted a dormant LCD factory in Korea (Tianan) into additional HBM packaging capacity, and is spending another KRW 700 billion–1 trillion (~$0.6–0.8B) on new advanced packaging lines trendforce.com. Thanks to these efforts, Samsung projects its maximum HBM production could reach 150k–170k HBM units per month by late 2024 trendforce.com (though it’s not publicly disclosed how many GB that entails). Samsung’s aggressive scaling is driven by strategic goals: it reportedly even slashed HBM3 prices to woo NVIDIA into sourcing more from Samsung tomshardware.com, as SK hynix had a quasi-exclusive supply to NVIDIA in early 2023. By expanding supply and offering competitive pricing, Samsung aims to increase its HBM market share (which hovered around ~45% in 2023) and defend its overall memory leadership.

- Micron: The smallest of the “big three” DRAM players, Micron was late to HBM but is now all-in on catching up via HBM3E and HBM4. Micron’s strategy was to leapfrog straight to high-stacks and high-speed memory: it announced HBM3E samples in late 2023 and secured design wins in NVIDIA’s latest platforms (GH200/H200) trendforce.com. Micron’s CEO Sanjay Mehrotra has highlighted huge growth expectations – citing an internal TAM (total market) estimate that HBM demand will jump from ~$4B in 2023 to $25B+ by 2025 luckboxmagazine.com (this was likely a bit conservative, given newer estimates now put 2025 HBM market at $34B). To capitalize, Micron is expanding capacity across multiple sites. Its fab in Hiroshima, Japan handles HBM wafer fabrication; Micron is increasing that HBM wafer throughput to about 25,000 wafers per month by end of 2024 ariat-tech.com. Additionally, Micron is reallocating some DRAM capacity in its Taiwan fabs (Linkou, Taichung) to advanced 1β node production for HBM and DDR5 ariat-tech.com ariat-tech.com. By end of 2025, Micron’s goal is to meaningfully grow its share of HBM output – industry watchers speculate Micron could reach 20–25% HBM market share by 2026 (up from essentially 0% in 2022) investing.com. All of Micron’s 2024 and 2025 HBM production is already sold out under long-term agreements tomshardware.com, so it is focusing on executing its ramps and improving yields. The company is also investing heavily in R&D for future nodes (1γ, 1δ EUV processes) and has plans for a new leading-edge DRAM fab in the U.S., backed by CHIPS Act incentives, which could eventually produce HBM later in the decade trendforce.com. In short, Micron is betting that by being first with HBM4 and leveraging its tech advancements (it touts that its HBM stacks are “taller and faster”), it can carve out a solid slice of this fast-growing market.

Despite these expansions, supply remains tight in the near term. Even with capacity additions, ramp-up takes time and yield tuning – so through 2025 the market will likely remain supply-constrained. The collective efforts, however, should start bearing fruit by 2026, when industry HBM output may double vs 2024 levels ariat-tech.com. By then, we might finally see supply catch up to the breakneck demand growth.

Pricing Dynamics: Contract vs Spot in a Seller’s Market

The HBM supply crunch has inevitably led to soaring prices – though the dynamics differ from conventional memory markets. In commodity DRAM, we often see pronounced spot price swings and short-term gluts or shortages. HBM, by contrast, is a more strategic product: it’s often co-developed with customers, sold via long-term contracts, and wasn’t widely traded on spot markets in early years. However, the recent feeding frenzy for HBM has effectively given suppliers pricing power and driven up both contract and any spot quotations.

Contract Pricing: Because major buyers (like NVIDIA, AMD, and cloud giants) cannot risk running out of HBM, they negotiate supply contracts well in advance – often securing a year or more of output at fixed volumes and prices. For 2023–2024, those contract prices were high but relatively stable. As demand kept climbing, 2025 contracts have generally been signed at higher price points. TrendForce noted HBM contract prices saw a 5–10% increase heading into 2025 trendforce.com, contributing to a sharp jump in HBM revenue globally. In many cases, buyers essentially pre-paid to reserve capacity – Micron, for example, indicated that pricing for its 2024–25 HBM supply was “already determined” as part of multi-year agreements discussion.fool.com. This means memory makers locked in hefty orders (often with non-refundable clauses) ensuring their new fab investments would be underwritten by guaranteed demand trendforce.com. For suppliers, this lowers risk and encourages the massive capex outlays on new HBM lines. For buyers, it ensures supply but at the cost of less flexibility; they can’t easily reduce orders without penalty if their own demand fluctuates.

Spot Market: Given that virtually all current HBM output is spoken for, the spot market is extremely thin. In 2023, when AI demand exploded unexpectedly, any stray HBM units or canceled orders were snapped up at exorbitant prices by those left short. There were reports of HBM prices on brokers’ markets spiking well above contracted rates during the peak of the shortage (some estimates suggested spot HBM could be 2× or more the contract price in late 2023). One striking data point: an Nvidia H100 card contains 80 GB of HBM3 – and industry sources estimated the cost of that HBM alone at ~$2,000 in 2023 ariat-tech.com. If accurate, that suggests a per-gigabyte price far higher than any standard memory, reflecting the premium willing to be paid for immediate delivery. However, such spot purchases are rare and mostly anecdotal; the primary way to get HBM is still via allocation from the manufacturer.

In effect, the power dynamic has favored suppliers recently. SK hynix revealed it had no unsold HBM capacity through 2025 tomshardware.com – a clear sign that buyers were clamoring even at the prices Hynix set. When one supplier (like SK hynix) is maxed out, buyers turn to the others (Samsung, Micron), which puts upward pressure on those negotiations too. This tightness led to record profitability on HBM products; HBM carries gross margins well above normal DRAM. Analysts estimate HBM gross margins are 50%+ for Micron and peers (versus 30–40% for PC DRAM), contributing to Micron’s highest revenues on record in 2025 nextplatform.com nextplatform.com. As a result, memory makers have been disciplined: there’s little incentive to undercut on price or flood the market, especially as they coordinate large investments.

Customer Leverage: That said, major buyers are not completely at mercy. NVIDIA, accounting for an outsized share of HBM demand, has some bargaining leverage. Its deep pockets allow it to pay extra for priority supply nextplatform.com, and it can multi-source between Hynix, Samsung, and Micron, playing them off to some degree. For example, Samsung’s rumored HBM3 price cut in 2023 was aimed at convincing NVIDIA to shift more orders its way tomshardware.com. Big cloud firms also negotiate directly – some invest in joint development (ensuring the HBM meets their specs) or even pre-pay to fund capacity. The dynamic is somewhat like the foundry business for chips: capacity is finite and expensive, so customers may secure it with take-or-pay contracts and sometimes even capital contributions. The payoff for buyers is ensuring they can build their AI hardware on schedule, which for companies like Google or Amazon, is mission-critical.

Future Outlook: The current pricing environment is extremely favorable to suppliers, but could change if the capacity surge overshoots demand in a couple of years. By 2026–2027, supply may finally “catch up” – Goldman Sachs projects that HBM supply growth will outpace demand by 2026, potentially causing a double-digit percentage drop in HBM ASPs (average selling prices) that year trendforce.com. This would be the first significant price reduction in HBM’s history, marking a shift from shortage to surplus. If that happens, we could see a more buyer-friendly market, with contract negotiations featuring price cuts or at least more modest increases. Indeed, TrendForce noted that with yields improving and new entrants (e.g. potentially a Chinese HBM supplier by 2026) the pricing power may tilt towards customers by late 2025 trendforce.com trendforce.com. However, even in that scenario, the introduction of new HBM4 products (initially at a premium price) could keep the overall ASP mix rising trendforce.com – essentially higher-end HBM will debut at top dollar, offsetting discounts on older generations.

For now, in late 2025, HBM prices remain elevated and rising gently, underpinned by robust demand and careful capacity planning. The “feast” of AI-driven demand has banished the usual boom-bust cycle – at least temporarily – in favor of a seller’s market where memory makers confidently talk of “pricing already contracted” and fully booked production tomshardware.com. Buyers large and small have little choice but to accept these terms if they want to secure the memory that powers their AI ambitions.

Impact on AI Servers and Hyperscalers

The ramifications of the HBM crunch extend to anyone trying to build or buy AI servers and accelerators. In modern AI systems, HBM isn’t just an component – it’s a cornerstone of performance, enabling the immense data throughput needed for training large neural networks. But that performance comes at a steep cost, especially in this supply-constrained period, and it is driving AI hardware prices to eye-watering heights.

Take for example an NVIDIA HGX server (the kind used in AI supercomputers and cloud clusters): it typically contains 8 H100 GPUs, each with 80 GB of HBM2E/HBM3. That’s 640 GB of HBM in one server, easily $10k+ worth of memory at recent pricing. No wonder a single fully-loaded AI chassis can cost on the order of a few hundred thousand dollars. The HBM can account for nearly half the BOM (bill of materials) cost of such a server, according to industry estimates ariat-tech.com. This is a radical shift from past eras where memory was a relatively small fraction of system cost. It underscores how AI-centric designs have made memory (HBM) as critical and costly as the GPUs or CPUs themselves.

Leading AI chip providers have acknowledged this in their earnings calls and strategies. NVIDIA’s CEO Jensen Huang has noted that accelerators like the H100 are essentially “memory-bound” – the reason NVIDIA keeps increasing HBM quantities (from 40GB in A100 to 80GB in H100, and plans for H200 with 141GB, etc.) is because larger AI models demand more memory capacity and bandwidth. This means each new GPU generation uses more HBM per board, further amplifying demand. AMD’s MI300X GPU, as another example, carries 192 GB of HBM3, and its upcoming MI350 is said to go up to 288 GB across stacks nextplatform.com. The trend is clear: more HBM per accelerator, and more accelerators per server = a lot more HBM needed.

For hyperscale cloud companies, who might deploy tens of thousands of such AI accelerators, the stakes are huge. Companies like Google, Microsoft, Amazon, Meta are simultaneously customers and competitors in this arena: they buy HBM-based GPUs from NVIDIA/AMD, but also develop custom AI chips (Google TPU, Amazon Trainium, Microsoft’s rumored Athena chip, etc.) which also rely on HBM. For instance, Google’s TPUv4 uses HBM and Google has been securing supply directly. These hyperscalers have publicly indicated massive increases in AI capital expenditure – a chunk of which essentially flows to memory vendors as HBM purchases.

One illustrative figure: market research suggests that NVIDIA alone may account for over 50% of all HBM demand in 2025 yolegroup.com, given its dominant share in AI hardware. Add the hyperscalers’ in-house chip needs and other AI startups, and you have an environment where just a handful of end-users are driving the bulk of HBM consumption. This concentration has some side effects. For one, it gives those big players some negotiating power (as discussed in pricing), but it also means if any of them hit a roadblock – say, delays in a GPU launch or a government export restriction – it could temporarily ease demand pressure. Conversely, if AI adoption accelerates even faster (e.g. if every company rushes to add AI servers), we could see demand exceeding even the boosted 2025 supply, putting yet more strain on costs.

From the perspective of AI project managers or CFOs at these companies, HBM prices and availability have become a planning concern. A few years ago, one only worried about GPU prices, but now if you’re assembling an AI data center, you must consider that memory might be the long-lead or limiting component. Indeed, there were reports in 2023 of AI system builders having GPUs on hand but waiting for HBM modules to arrive – a reverse of the typical chip shortage. Some large buyers are hedging by ordering more HBM than immediately needed, effectively stockpiling, which in turn keeps availability tight for the rest of the market.

In terms of AI service costs, the HBM crunch indirectly affects cloud pricing for AI services. If a cloud provider pays more for HBM-laden servers, the cost to rent an AI instance (for, say, training an ML model) might increase. We’ve already seen extraordinarily high cloud prices for premium AI GPU instances in 2023–2024, partly a function of limited supply of the hardware. As memory supply improves (and if costs normalize), some of those pressures could alleviate, making AI computing a bit more affordable. But until then, HBM is a significant cost driver in every advanced AI cluster that goes online.

There’s also an innovation incentive: the scarcity and expense of HBM has spurred R&D into alternative memory solutions for AI. Companies like Intel and others have been exploring things like MCDRAM, LPDDR-based modules, or even new “HBM-substitutes” tomshardware.com tomshardware.com to see if a cheaper memory technology could be used for certain AI workloads. Thus far, nothing matches HBM’s blend of high bandwidth and capacity, but if HBM were to remain extremely costly, it’s conceivable that system architects will look for creative ways to optimize memory usage (for example, using faster interconnects to offload some data to standard DRAM or leveraging novel packaging to increase yield).

In summary, for the foreseeable future HBM is a linchpin of AI infrastructure – and its constrained supply is a pain point for every AI hardware buyer. The world’s tech giants are effectively in a race, not just for the best AI chips, but for the memory that makes those chips useful. This dynamic links the fortunes of memory manufacturers directly to the AI boom: as one analyst quipped, “AI is eating the world’s data – and HBM is what’s on the menu.”

Outlook: Relief on the Horizon?

Going into late 2025 and 2026, the big question is whether the HBM supply crunch will ease or if demand will continue to outrun supply. On one hand, massive new capacity is coming online. By some estimates, the top three vendors will collectively double HBM wafer output in 2025 versus 2024 ariat-tech.com, and then expand further in 2026. If global economic conditions remain stable and AI investments continue, much of that new supply will be absorbed – but perhaps not at the frantic sell-out pace we saw in 2023–2024. Already, there are hints that 2026 could flip the script to a more balanced market. Goldman Sachs analysts foresee a scenario of “oversupply” by 2026 leading to the first notable HBM price drop trendforce.com, which would be welcome news for AI hardware buyers.

Memory makers are also wary of overbuilding. They remember the brutal downturns of past DRAM cycles. The current oligopoly (Samsung, SK hynix, Micron) has shown unusual discipline in expanding just enough to meet demand growth. They have also tied up customers in long-term agreements, which should mitigate the risk of a sudden oversupply glut. As SK hynix’s president said, HBM is increasingly sold via deep partnerships and co-design with customers, making demand more predictable and stickier techinvestments.io techinvestments.io. This approach may smooth out the worst volatility.

Another wildcard is new entrants and technologies. Thus far, no new competitor has successfully entered the HBM market – it’s simply too technologically demanding. However, Chinese memory companies (like CXMT) are reportedly accelerating HBM R&D, aiming to produce HBM3-class chips by 2026–2027 trendforce.com. While export controls would limit their international reach, a domestic Chinese HBM source could alter the landscape for that region (reducing dependence on imports from SK/Samsung, and potentially increasing global supply if Chinese vendors eventually overshoot domestic demand). It’s a space to watch, though most analysts think the incumbents will maintain a 3-player hold on the market through the decade techinvestments.io.

On the technology front, future versions like HBM4E, HBM5, etc. will continue the bandwidth race, possibly introducing things like hybrid bonding and more layers (16-Hi stacks) trendforce.com. These will push the envelope – and likely command high price premiums initially. But as they filter down, they could make older HBM more affordable. For example, by 2027 HBM3 might be considered a last-gen product and priced much lower, which could broaden adoption into mid-tier accelerators or even mainstream GPUs.

For now, the industry sentiment as of late August 2025 is cautiously optimistic. HBM is seen not as a short-lived fad, but as a long-term growth segment, essentially becoming a foundational tech for AI, HPC, and high-end graphics. SK hynix projects the HBM market to grow ~30% annually through 2030 tomshardware.com – reaching perhaps $90+ billion by then, which would be a sizable chunk of the overall memory market. If that’s true, today’s crunch might eventually give way to a more mature market where supply and demand equilibrate at high volumes.

In the immediate term, though, the HBM gold rush is still on. Memory makers are enjoying record sales and racing to deliver on massive order backlogs, while AI hardware designers carefully count on each HBM stack they can get. The next 12–18 months will be crucial: will the new fabs relieve the pressure, or will ever-bigger AI deployments keep straining the HBM supply chain?

Either way, one thing is clear: High-Bandwidth Memory has graduated from a niche technology to a strategic resource in the AI era, and its supply and pricing will remain a barometer of the health and cost of AI innovation moving forward. As an old tech adage goes, “Where data flows, money flows” – and with HBM, both the data and the dollars are indeed flowing at high bandwidth.

Sources: High-bandwidth memory market analyses and forecasts yolegroup.com nextplatform.com; company statements from SK hynix, Samsung, and Micron executives tomshardware.com trendforce.com discussion.fool.com; TrendForce and Yole Group reports on memory revenue and supply trends trendforce.com tomshardware.com; Next Platform and Tom’s Hardware coverage of HBM product launches and roadmaps tomshardware.com tomshardware.com blocksandfiles.com; and earnings call commentary highlighting HBM’s impact on the tech supply chain nextplatform.com blocksandfiles.com. Each citation corresponds to publicly available information that substantiates the figures and claims made.