- IBM unveiled Condor in 2023 as the first quantum processor with 1,121 superconducting qubits, with a roadmap to over 4,000 qubits by 2025.

- Google released the Willow superconducting chip with 105 qubits in 2024, where adding qubits produced an exponential reduction in the error rate and crossed the fault-tolerance threshold for quantum error correction.

- IonQ’s Harmony, Aria, and Forte devices offer up to 29–36 algorithmic qubits on 20+ Ba+ ions, with Forte reaching 35 effective qubits in 2024 and a 64-physical-qubit target by 2025.

- Quantinuum demonstrated 12 fully error-corrected logical qubits in 2024 in partnership with Microsoft, and by mid-2025 claimed a universal, fully fault-tolerant gate set with repeatable error correction on its H2 ion trap.

- PsiQuantum has raised over $700 million and in January 2025 announced Omega, a silicon-photonic chipset architecture, as part of its plan to scale to a million photonic qubits.

- Xanadu’s Borealis photonic processor, unveiled in 2022, achieved quantum computational advantage on a programmable task by entangling up to 216 modes and completed the computation in 36 microseconds, with cloud access.

- Photonic two-qubit gates in linear optics are probabilistic, often succeeding with about 50% probability and requiring loss-tolerant codes and large overhead to scale.

- Photonic qubits can operate near room temperature, while their detectors typically require cryogenic sensors around 2–4 K.

- As of 2024–2025, most experts view superconducting and trapped-ion platforms as the near-term leaders, with IBM/Google leading in qubit counts and IonQ/Quantinuum in gate fidelity and early error correction.

- Many experts expect no single technology will dominate long term; potential winners include photonics or hybrids, with PsiQuantum advocating a billion-qubit-scale vision regardless of the underlying technology.

Quantum computing is advancing at a breakneck pace, with several rival technologies vying for the crown. In particular, superconducting qubits, trapped-ion qubits, and photonic qubits have emerged as leading platforms. Each uses a very different physical system to create quantum bits (qubits), and each comes with its own strengths, challenges, and big-name supporters. Tech giants like IBM and Google are betting on superconducting circuits, IonQ and Quantinuum champion trapped ions, and innovators like PsiQuantum and Xanadu push photonic approaches biforesight.com. This report compares these three quantum hardware platforms – from how their qubits work and perform, to who’s building them and what’s coming next – and examines which might dominate in the near term and long term.

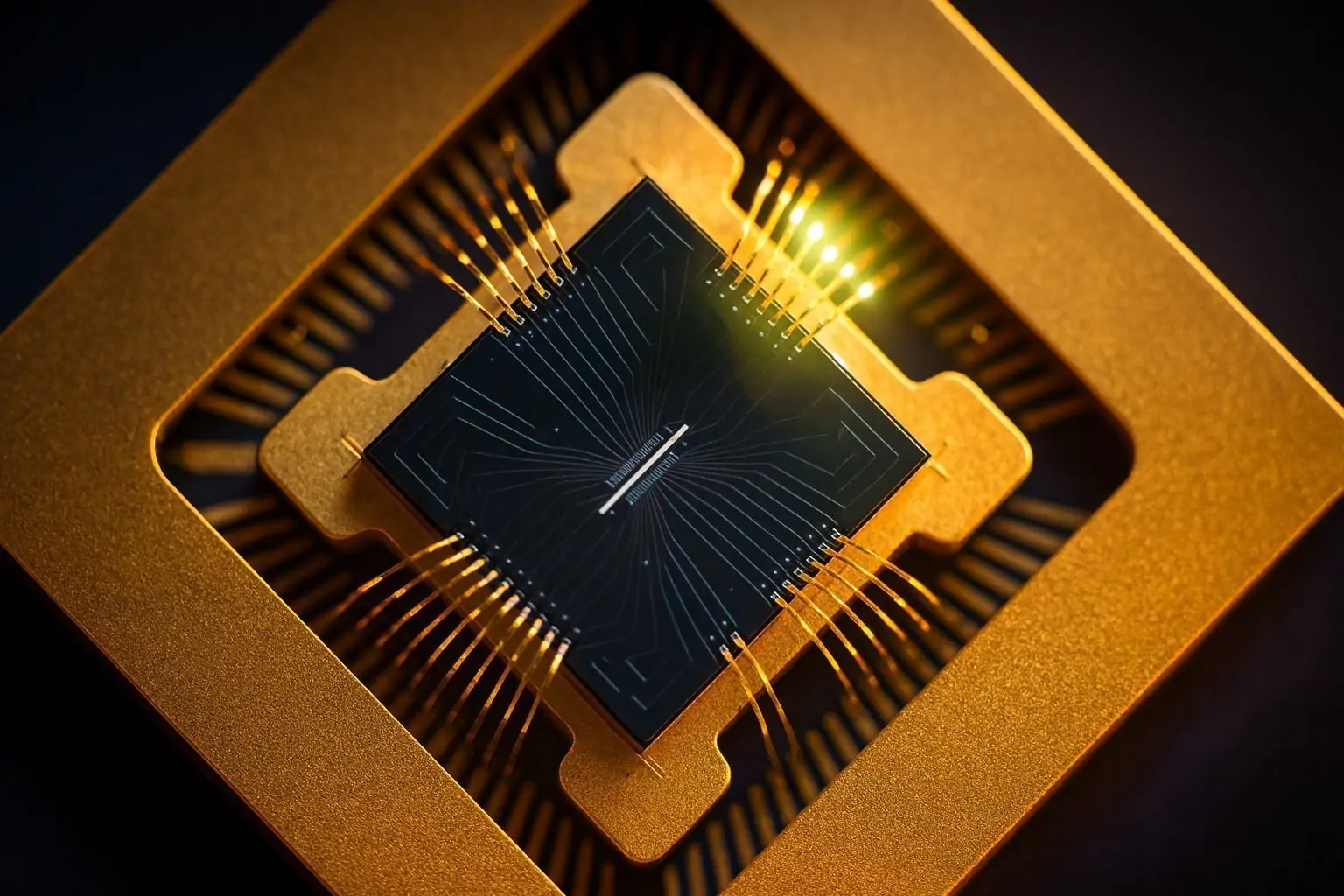

Superconducting Qubits: Fast Circuits in the Deep Freeze

Superconducting quantum computers use tiny electrical circuits cooled to nearly absolute zero. In this platform, qubits are made from superconducting materials (like aluminum or niobium) that carry currents with zero resistance at cryogenic temperatures thequantuminsider.com. Each qubit is essentially a microscopic Josephson junction circuit where quantum information is stored in electromagnetic states (current or charge orientations) controllable by microwave pulses thequantuminsider.com. Because these are electronic devices, they operate extremely fast – gate operations can take only tens of nanoseconds, meaning millions of quantum logic operations per second thequantuminsider.com. This speed is a major advantage of superconducting qubits.

Another big selling point is manufacturability and scalability. Superconducting qubits can be fabricated using well-established silicon chip manufacturing techniques, so in principle one can pack more qubits by leveraging the semiconductor industry’s expertise biforesight.com. “This is critical, because for a quantum computer to be useful, a large number of qubits is essential,” notes Michael Bruce of IQM Quantum, adding that superconducting qubits leverage existing microwave electronics and cryogenics and are “remarkably designable and tunable.” biforesight.com In practice, IBM, Google, Rigetti and others have already built chips with dozens to over a hundred superconducting qubits, and keep scaling up biforesight.com. IBM in 2023 unveiled “Condor,” the first quantum processor with 1,121 superconducting qubits biforesight.com, and their updated roadmap targets a modular “quantum-centric supercomputer” with over 4,000 qubits by 2025 thequantuminsider.com. Google’s latest superconducting chip “Willow” (with 105 qubits) showed in 2024 that adding more qubits can actually reduce errors thanks to improved error correction – achieving an “exponential reduction in the error rate” as the logical qubit size grew biforesight.com biforesight.com. In fact, Willow was the first system to push the error rate below the key threshold where quantum error correction outpaces new errors, a landmark toward fault-tolerant computing biforesight.com. These advances illustrate the rapid progress and sheer qubit counts superconducting platforms are delivering.

However, superconducting qubits face serious challenges. They are high-maintenance bits: superconducting processors must be housed in bulky dilution refrigerators at millikelvin temperatures (far colder than outer space) to keep them coherent thequantuminsider.com. Even with extreme cooling, each qubit only retains its quantum state for very short times (typically microseconds to milliseconds) before noise causes decoherence thequantuminsider.com. In other words, they forget information quickly – though their speed partly compensates for this by letting many operations happen in that brief window biforesight.com. The cryogenic setups are also complex and expensive, and wiring hundreds or thousands of qubits inside a fridge becomes a daunting engineering task thequantuminsider.com thequantuminsider.com. Moreover, superconducting qubits generally interact only with nearest neighbors on a chip, limiting connectivity thequantuminsider.com. This means algorithms often require extra swap operations to move information around, adding to the gate count and error risk.

Gate fidelities (how error-free operations are) for superconducting qubits have improved dramatically but still hover in the high-99% range. In 2024 the Finnish company IQM reported a two-qubit gate fidelity of 99.9%, approaching the threshold for practical error correction biforesight.com. Google and IBM have likewise demonstrated two-qubit error rates around 0.5–1%, and even better for single-qubit gates. These fidelities are very good, though keeping errors this low while scaling to thousands of qubits remains a grand challenge. Some optimism comes from recent error-corrected demonstrations: Google showed that by using more physical qubits in an encoded block (e.g. a 7×7 grid), they could halve the logical error rate each time, indicating the approach can scale biforesight.com biforesight.com. Still, fully fault-tolerant superconducting quantum computers will likely require integrating many chips or novel architectures to reach millions of physical qubits.

Major players doubling down on superconducting tech include IBM (with its flagship IBM Quantum processors and roadmaps through 2033), Google (Quantum AI’s Sycamore and Willow chips), Rigetti (a startup focused on multi-chip superconducting processors), and several academic efforts and startups worldwide biforesight.com. Notably, Rigetti’s CEO recently claimed that superconducting qubits are “the winning modality for high-performance quantum computers due to their many advantages, including fast gate speeds and well-established manufacturing processes.” thequantuminsider.com This confidence is backed by the tangible milestone of Rigetti’s 84-qubit Ankaa-3 system (with 99%+ two-qubit fidelity) and plans for 100+ qubit devices by 2025 thequantuminsider.com. Superconducting quantum computers have already achieved several “quantum advantage” experiments (performing specific tasks no classical supercomputer can feasibly match), starting with Google’s 53-qubit demonstration in 2019. The momentum suggests that in the near term, superconducting platforms – powered by the resources of big tech and nations – will continue to lead in raw qubit count and algorithmic demonstrations. But other qubit technologies are catching up in quality, even if they have fewer qubits today.

Trapped Ion Qubits: Laser-Precise Atoms with Long Memories

Trapped-ion quantum computers take a very different approach: they use actual atoms as qubits. Using electromagnetic fields, individual ions (charged atoms, often of elements like ytterbium or barium) are suspended in a vacuum trap so they can be manipulated without physical contact. Each ion’s internal energy states (or shared vibrational modes of a chain of ions) serve as the 0 and 1 states of a qubit, and lasers are used to perform quantum logic gates by exquisitely tuning atomic transitions and entangling ions via their Coulomb (electric) interaction thequantuminsider.com thequantuminsider.com. In essence, nature provides identical atomic qubits; engineers simply have to isolate and control them with extreme precision.

A key strength of trapped ions is that qubits are nearly perfect copies of each other – every atom of a given isotope is identical, eliminating manufacturing variability. The qubits are also very stable: trapped-ion qubits can retain quantum coherence for orders of magnitude longer than superconducting qubits. Information can persist for seconds or even minutes in an ion’s stable electronic states thequantuminsider.com, compared to microseconds for superconducting circuits. This long coherence time means ions can handle deeper algorithms before errors accumulate. And because all the ions in a trap repel each other, any qubit can in principle interact with any other (through collective motion), giving a fully connected qubit register rather than just nearest-neighbor couplings thequantuminsider.com. This all-to-all connectivity can greatly simplify certain quantum algorithms and error-correction schemes.

Trapped-ion devices have achieved some of the highest gate fidelities of any platform. For example, IonQ (a leading ion-trap company) recently announced 99.9% two-qubit gate fidelity on a next-generation system using barium ions investors.ionq.com investors.ionq.com. Quantinuum (formed from Honeywell’s quantum division) similarly reported 99.9% fidelity on its latest H1 ion processor hpcwire.com hpcwire.com. These three-nines fidelity levels are extremely impressive – higher native accuracy means needing less error correction overhead. “Achieving this level of fidelity is a major milestone … the better the native gate fidelity, the less error correction in all forms is required,” said IonQ’s engineering SVP Dean Kassmann in 2024 investors.ionq.com investors.ionq.com. Such high precision is one reason experts consider trapped ions a top contender for early quantum advantage. In fact, a Microsoft Azure Quantum spokesperson noted in 2025 that “currently, trapped-ion and neutral-atom systems are demonstrating the best performance when used to create logical qubits” for error correction, thanks to their high fidelities and connectivity biforesight.com. This led Microsoft to back ion-trap hardware (via partners like Quantinuum) on its cloud, even as Microsoft also pursues a longer-term topological qubit approach biforesight.com.

The trade-off is speed and scalability. Operations on ions are typically millions of times slower than on superconducting circuits thequantuminsider.com. Two-qubit gate pulses might take tens of microseconds to milliseconds, since they rely on physically moving ions or oscillating them with lasers. This limits how many operations can be done per second, meaning algorithms might run slower in wall-clock time. Additionally, today’s trapped-ion setups are relatively bulky and complex: they require ultra-high vacuum chambers, racks of laser optics and control systems, and often careful tuning of many parameters. “Building and scaling up trapped ion systems is technically challenging,” one review noted, citing hurdles in trapping large numbers of ions and the complex laser networks needed to control each qubit thequantuminsider.com. Research prototypes typically manipulate on the order of 10–50 ions in a single trap. Going to hundreds or thousands of ions may require new architectures (like multiple connected traps or shuttling ions between zones), which introduces engineering complexity thequantuminsider.com.

Despite these challenges, progress is steady. IonQ’s current devices (Harmony, Aria, Forte) offer up to 29–#36 “algorithmic qubits” of performance (IonQ’s metric accounting for fidelity and connectivity) with all-to-all coupling on 20+ physical ions ionq.com ionq.com. In 2024, IonQ announced its Forte system hit a benchmark of 35 effective qubits a year ahead of schedule, and its next-gen Tempo system (expected ~2025) will introduce rack-mounted, modular ion trap units using barium ions to further increase qubit counts and reduce error rates thequantuminsider.com. IonQ’s roadmap anticipates 64 physical qubits by 2025 and modular scaling to hundreds linked by photonic interconnects thereafter x.com. Quantinuum, for its part, has been regularly upgrading its H-series ion processors (H1 to H2) and in 2024 demonstrated 12 fully error-corrected logical qubits in partnership with Microsoft, a big step toward fault-tolerance thequantuminsider.com thequantuminsider.com. By mid-2025 Quantinuum declared it had achieved the first “universal, fully fault-tolerant quantum gate set with repeatable error correction” – essentially a set of operations on logical (error-corrected) qubits that outperform the error rates of the underlying physical qubits thequantuminsider.com thequantuminsider.com. This breakthrough, using their second-gen H2 ion trap, is touted as “the essential precursor to scalable, industrial-scale quantum computing” and part of Quantinuum’s roadmap toward a fault-tolerant “Apollo” system by 2029 thequantuminsider.com thequantuminsider.com.

Commercial and academic players in the ion-trap arena include IonQ (a U.S. startup born out of University of Maryland/Duke research, and the first pure-play quantum company to go public), Quantinuum (Honeywell’s quantum hardware merged with Cambridge Quantum software), AQT (Alpine Quantum Technologies, an Innsbruck-based company), Oxford Ionics (UK startup using microwave-driven ion traps), and Universal Quantum (UK, developing scaled ion trap modules) biforesight.com. Traditional NIST and university groups also continue to push the science. IonQ’s CEO Peter Chapman argues that as quantum computers tackle more complex problems, trapped ions will shine. “Real-world users are starting to realise that not all technologies are the same… it’s likely we’ll see the most cutting-edge and complex problems run on trapped ion systems like our own,” Chapman said biforesight.com. Indeed, trapped ions already hold records for quantum circuit depth executed and have been used to demonstrate algorithms like quantum chemistry simulations with high accuracy on small molecules. The consensus is that in the near-to-medium term, ion-based processors – though smaller in qubit number – could achieve practical quantum advantage on certain problems thanks to their precision. The big question is whether they can scale to the thousands or millions of qubits needed for broad, fault-tolerant quantum computing. New approaches like photonic links between ion traps, microchip traps, or even replacing laser beams with on-chip electronic control (as Oxford Ionics is doing) aim to solve these scaling bottlenecks. If those succeed, the slow-and-steady trapped ion could remain a formidable competitor well into the future.

Photonic Qubits: Computing with Light Particles

Photonic quantum computing takes a radical departure from matter-based qubits: it uses particles of light (photons) as the information carriers. In these systems, quantum information can be encoded in properties of single photons – such as their polarization, phase, frequency, or in so-called “squeezed” states of light. The biggest appeal of photons is that, unlike electrons or atoms, photons do not easily interact with their environment. Light doesn’t “feel” heat or stray electric/magnetic fields the way matter qubits do biforesight.com. As a result, photonic qubits can retain coherence for long distances and times; a photon will stay in a superposition as it zips through fiber or optical circuits until it is absorbed or measured. In practical terms, this means photonic quantum operations can often be done at or near room temperature, with minimal cooling – a stark contrast to the ultracold requirements of superconducting and even ion systems thequantuminsider.com. (The caveat: while the photons themselves don’t need refrigeration, the detectors that read out their quantum states often do require cryogenic sensors, though typically at a more manageable ~2–4 K instead of millikelvin psiquantum.com.)

Another advantage is that photonic qubits integrate naturally with today’s telecom and fiber-optic infrastructure. They can be sent over optical fibers, enabling the idea of networked quantum computing or distributed quantum networks with minimal signal conversion thequantuminsider.com. This suits tasks like quantum communication and could allow multiple smaller photonic processors to be connected into a larger machine via fiber – something that matter qubits have a much harder time doing across distance. Photons also don’t interact with each other under normal circumstances, which means a quantum state of light can be remarkably stable (no spontaneous cross-talk). But this non-interaction is a double-edged sword: it makes two-qubit logic gates between photons very challenging, because photons won’t naturally influence one another the way two trapped ions or two superconducting currents can.

To create entanglement or logic operations between photonic qubits usually requires inducing an effective interaction using additional photons, special materials, or measurement trickery. One common approach is measurement-based quantum computing, where a large entangled cluster of photons is prepared and then measuring certain photons steers the remaining ones into the desired computations. Companies like PsiQuantum are pursuing this by generating massive entangled states of photons on optical chips and performing fusion gates (interference and detection operations that entangle or disentangle photons) to carry out computation psiquantum.com. The upside is this can be made scalable and fault-tolerant with the right error-correcting codes – PsiQuantum, for example, designs their photonic circuits around error-correcting code states and uses multiplexing to overcome the probabilistic nature of photon sources psiquantum.com psiquantum.com. The downside is that it requires a lot of photons and devices – truly a massive engineering effort with thousands or millions of components. PsiQuantum openly acknowledges needing on the order of a million physical photonic qubits for a useful computer, which they aim to achieve by leveraging modern semiconductor fabrication (their photonic chips are made in conventional silicon foundries) biforesight.com.

Current photonic quantum devices are mostly in the proof-of-concept stage, but they have already notched some impressive milestones. In 2020 and 2021, University of Science and Technology of China researchers demonstrated boson sampling machines (Jiuzhang 1.0 and 2.0) where photonic circuits solved a specialized math problem 10^24 times faster than a classical supercomputer – an enormous quantum speedup, though in a non-programmable task spectrum.ieee.org. In 2022, Canadian startup Xanadu unveiled Borealis, a photonic quantum processor that achieved quantum computational advantage on a programmable task. Borealis performed a complex sampling computation in only 36 microseconds, whereas the world’s most powerful supercomputer would take an estimated 9,000 years to do the same spectrum.ieee.org spectrum.ieee.org. Unlike earlier photonic experiments, Borealis is programmable and even accessible to the public via the cloud spectrum.ieee.org spectrum.ieee.org. It uses squeezed-state photonic qubits (quantum states of many photons) and a series of time-multiplexed interferometers and detectors to entangle up to 216 modes of light spectrum.ieee.org spectrum.ieee.org. This was the first time a startup’s photonic quantum computer exceeded classical capabilities, and Xanadu has made it available for researchers to explore further spectrum.ieee.org. As Xanadu’s lead scientist noted, the “future research is squarely focused on achieving error correction and ultimately fault tolerance”, incorporating the lessons from Borealis into next-generation architectures spectrum.ieee.org.

Yet photonics faces some significant hurdles on the road to general-purpose quantum computing. The foremost challenge is implementing high-fidelity, efficient two-qubit gates (entangling operations) between arbitrary photonic qubits. In linear optical quantum computing (using beam splitters and phase shifters), two photons do not deterministically entangle – at best, an entangling operation might succeed with 50% probability, and failed attempts are lost photons that need to be discarded or heralded by detectors. This probabilistic gating means you need a lot of extra photons and complex switching or multiplexing to perform a computation, and losses in optical components quickly erode fidelity thequantuminsider.com thequantuminsider.com. Even with fancy sources and detectors, scaling to many gate operations without losing most of your photons is difficult. Error correction is particularly challenging for photonic qubits because the primary error is outright loss of the qubit (a photon lost in transit), which requires special loss-tolerant codes and redundancy to overcome thequantuminsider.com. A 2023 study by PsiQuantum scientists, for instance, outlined how to build a photonic architecture that tolerates photon loss, but it still requires significant overhead in device count thequantuminsider.com. In short, the resource requirements for photonic quantum computing are high – millions of components – but the team at PsiQuantum often points out that this is similar to how classical computing needed large transistor counts, and they argue it’s solvable with modern engineering. “We could use existing semiconductor manufacturing processes to make the chips that manipulate the photons… without a profound reinvention of the semiconductor industry,” explains Pete Shadbolt, co-founder of PsiQuantum biforesight.com. He emphasizes that photons’ immunity to heat and ability to leverage telecom tech give photonics an intrinsic scaling edge once the initial hurdles are overcome biforesight.com biforesight.com.

The leading players in photonic quantum computing include PsiQuantum (US/UK, building a silicon-photonic million-qubit machine in partnership with GlobalFoundries), Xanadu (Canada, developer of Borealis and specialized photonic chips for both discrete and continuous-variable quantum computing), ORCA Computing (UK, focusing on quantum memory and small-scale photonic processors that interface with standard fiber networks), and QuiX Quantum (Netherlands, building photonic quantum chips and simulators) biforesight.com. Notably, big tech has begun investing here as well – in 2024, Microsoft invested in a photonics startup (Optiq/Photonic Inc.) working on light-based qubits for networking biforesight.com biforesight.com. PsiQuantum, led by professors from Stanford and Bristol, has raised enormous funding (over $700 million) to pursue its vision of a fault-tolerant photonic quantum computer in the latter half of this decade thequantuminsider.com. They plan to break ground in 2025 on quantum computing centers in the US and UK to host their first utility-scale, million-qubit photonic systems psiquantum.com. In January 2025, PsiQuantum announced a new photonic quantum chip architecture called “Omega” – a manufacturable chipset intended for their modular quantum computer design, signaling that hardware is coming together hpcwire.com. All told, photonic quantum tech is a few steps behind in the race – no photonic system has as many qubits or as general capabilities as the leading superconducting/ion systems yet. But the field is moving fast. As Hermann Hauser, a veteran tech investor, observed, “although the superconducting qubits and trapped ions have the lead right now, I believe that photonic… technology might well be [one of] the long-term winners.” biforesight.com The rationale is that once photonic machines achieve a certain scale, their ability to network easily and operate at higher temperatures could make them more practical to deploy broadly.

The Quantum Race: Who Leads Now and Who Wins Later?

All three platforms – superconducting, trapped ion, and photonic – are pushing toward the same goal: a large-scale, fault-tolerant quantum computer that can solve problems intractable for classical machines. In the near term (2020s), it appears superconducting and trapped-ion systems are leading the pack. They have the most qubits online and have demonstrated the most sophisticated quantum operations so far biforesight.com. Superconducting qubit devices by IBM and Google have achieved high circuit volumes and “quantum supremacy” experiments, and they’re rapidly scaling qubit counts into the hundreds. Trapped-ion machines by IonQ and Quantinuum boast the highest fidelities and have executed the first small error-corrected algorithms, albeit on tens of qubits. “I think that you currently have superconducting qubits and trapped ions out in front,” Hermann Hauser said in 2024 when asked which technology will triumph biforesight.com. Most experts agree no single approach will deliver a knockout blow in the next few years; rather, we are likely to see different quantum modalities excel at different tasks. “It will not be a zero-sum game in the near future,” explains Justin Ging, CPO at Atom Computing. “There is currently a race for quantum computers to be able to perform computations that provide economic value… However, until universal fault-tolerant quantum computing resources are widely available, different modalities can provide particular advantages for specific applications.” biforesight.com In other words, superconducting qubits might execute certain algorithms fastest, ions might solve others with more accuracy, and photonic systems might find niche use in networking or specialized computations – all before the era of full fault tolerance arrives.

Looking further ahead, opinions diverge on which technology (if any) will dominate the long term. Superconducting qubits have a head start and benefit from decades of microelectronics know-how, but skeptics point to the cooling complexity and short coherence as potential roadblocks at million-qubit scale. Trapped ions offer superb quality per qubit, yet might face limits in speed and practicality if we try to scale to thousands of ions in a single machine. Photonic qubits are the wild card – behind now, but potentially easier to manufacture en masse and link together. Hermann Hauser and others suspect that photonic (and also silicon spin qubit) systems “might well be the long-term winners – or maybe a combination of the two.” biforesight.com The long game could even be hybrid: for example, using photonics to interconnect many cryogenic superconducting modules, or using ions for memory and photons for communication. It’s also possible that new contenders like neutral atoms or topological qubits steal the show if they mature (neutral atom arrays, for instance, combine some advantages of ions and photonics, such as long coherence and easier scaling, and are making rapid progress in qubit count biforesight.com biforesight.com).

As of 2024–2025, we are in a stage some call the “quantum utility” or “early advantage” era – quantum computers are just on the cusp of doing useful things for industry, and everyone is racing to hit that milestone first biforesight.com. Notably, in late 2024 NVIDIA’s CEO Jensen Huang opined that a “very useful quantum computer” might still be 20 years away, casting doubt on short-term hype biforesight.com. His remarks caused a reality check (and a stock dip for quantum startups), but many in the field protested that it underestimates ongoing progress biforesight.com. Indeed, nearly every major player has a roadmap aiming for meaningful quantum advantage or fault-tolerance much sooner: Google targets an error-corrected quantum computer by 2029 thequantuminsider.com, IBM is aiming for substantial quantum speedups by 2026 and beyond with its 1000+ qubit systems and improved error mitigation, IonQ expects to demonstrate broad quantum advantage by 2025 thequantuminsider.com, and Quantinuum is eyeing 2027–2030 for its fully fault-tolerant device thequantuminsider.com thequantuminsider.com. With billions of dollars in investment pouring in and rapid advances coming yearly, it’s plausible that within this decade we’ll see quantum computers (of one technology or another) solve valuable problems in chemistry, optimization, or machine learning that classical computers cannot.

So which platform will win? At this point, betting on a single winner is risky – each approach has strong momentum and unique advantages. Superconducting quantum computers are likely to lead in the near-term commercial deployments due to their head start and support from computing giants. They might be the first to integrate into datacenters for certain cloud quantum services. Trapped-ion systems, with their accuracy, could lead in achieving precisely solved small-scale problems (e.g. in quantum chemistry or materials science) and in pioneering error-corrected logical qubits. They may dominate certain niches where quality trumps quantity of qubits. Photonic quantum computers might come from behind to overtake others in the long run, especially if and when fault-tolerant scaling is paramount – their ability to leverage optical communications and semiconductor fab scalability could allow a leap to extremely large, networked quantum processors biforesight.com. In fact, PsiQuantum’s CEO Jeremy O’Brien often emphasizes looking at the endgame: “Once we have a billion-qubit quantum computer, no one will care whether it was photons or ions under the hood – only what it can do for us.” biforesight.com The race right now is exciting precisely because we don’t yet know which path (or paths) will get us there first. What’s clear is that quantum computing is no longer science fiction – multiple prototypes are improving every year – and the competition between superconducting, ion, and photonic qubits is driving the field toward the first useful quantum machines, possibly within this decade. In the end, the winner might not be one technology beating all others, but all of us winning a new era of computing power.

Sources: Quantum hardware platform comparisons and expert commentary from Foresight (Bristol Univ.) biforesight.com biforesight.com biforesight.com and The Quantum Insider thequantuminsider.com thequantuminsider.com; industry roadmaps and milestones from IBM, Google, IonQ, Quantinuum, etc. biforesight.com thequantuminsider.com thequantuminsider.com; and news reports on recent breakthroughs in superconducting biforesight.com, trapped-ion thequantuminsider.com, and photonic quantum computing spectrum.ieee.org spectrum.ieee.org.