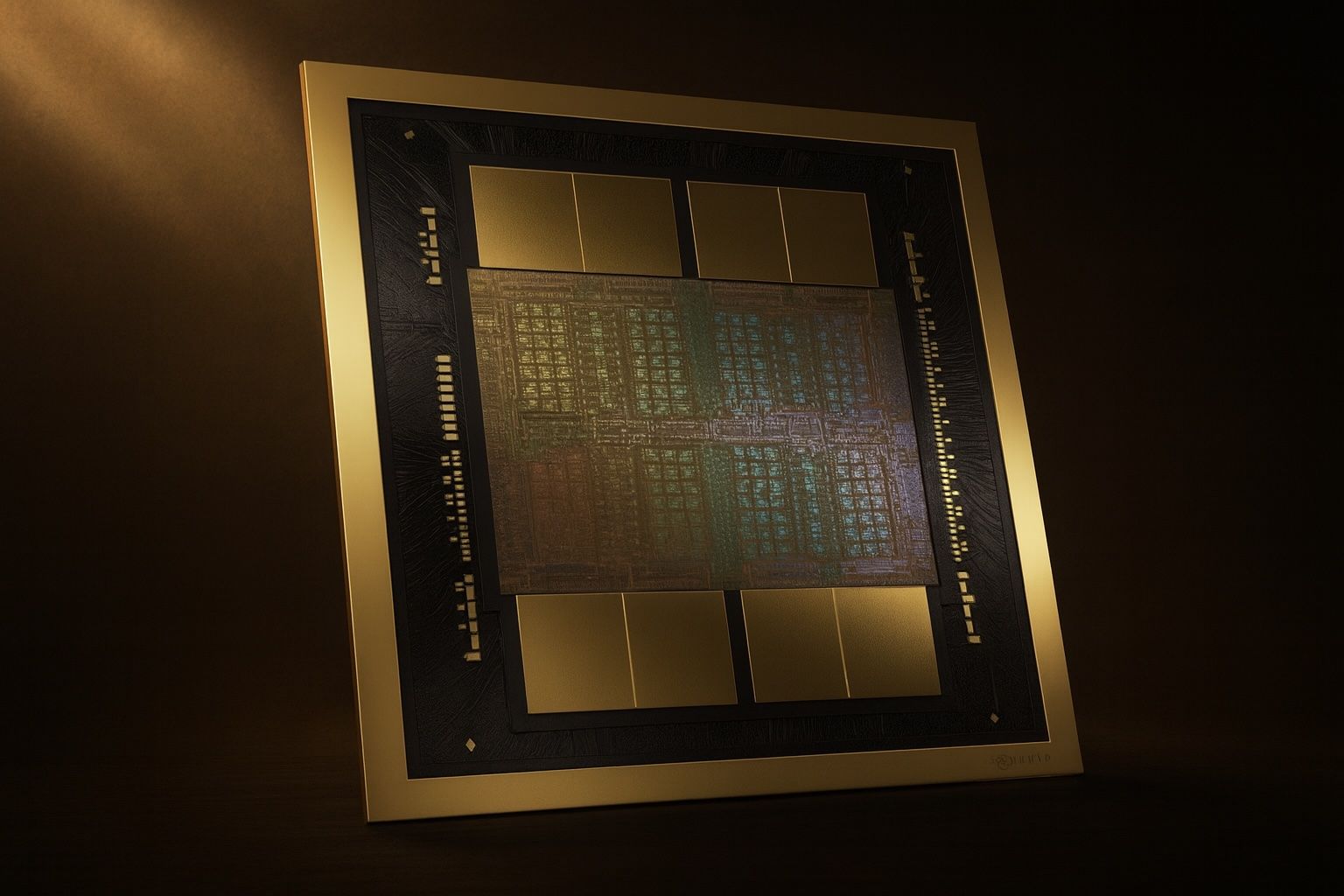

Nvidia Stock (NVDA) Today, December 3, 2025: AI Chip Giant Balances OpenAI Deal Uncertainty, Google TPU Threat and $2 Billion Synopsys Bet

Nvidia Corporation (NASDAQ: NVDA) remains the central character in the AI boom — and on December 3, 2025, its stock is trading around the $181 level after a volatile few weeks. The company sits near a $4.4 trillion market capitalization,