- NVIDIA’s Blackwell B200 features 180 GB of HBM3e memory per GPU with up to 8 TB/s bandwidth, 18 PFLOPS FP4 tensor throughput, 9 PFLOPS FP8, and 4.5 PFLOPS FP16, plus a second-generation Transformer Engine.

- NVIDIA claims DGX B200 delivers about 3× the training performance and 15× the inference performance of DGX H100 in end-to-end workflows.

- Google’s TPU v6e, codenamed Trillium, delivers 918 TFLOPS BF16 per chip, 1.836 PFLOPS INT8, 32 GB of HBM per chip, and 1.6 TB/s bandwidth per chip, with a 256-chip pod delivering about 234.9 PFLOPS BF16.

- AMD Instinct MI350X/MI355X offer 288 GB of HBM3e, up to 8 TB/s bandwidth, and FP4/FP6 support, with the MI355X drawing up to 1.4 kW and capable of about 10 PFLOPS FP4/FP6 per card or 20 PFLOPS with sparsity.

- AMD asserts the MI350 series delivers up to 4× higher AI compute and up to 35× higher AI inference than MI300, with early tests suggesting MI350X can outperform NVIDIA on some workloads.

- Memory leadership varies: MI355X ships with 288 GB, NVIDIA B200 with 180 GB, and TPU v6e offers 32 GB per chip (256 GB per host and 8 TB per TPU pod).

- Software ecosystems span NVIDIA’s CUDA/TensorRT/Transformer Engine with MIG, AMD ROCm 6+ and HIP, and Google Cloud’s TensorFlow/JAX with XLA for TPUs.

- Availability and pricing include DGX B200 entering production in 2024 with per-GPU list prices around $30–40k and a full DGX B200 around $515k, MI350X shipping in 2025 with prices near $25k per GPU, and TPU v6e available on Google Cloud since late 2024 with pricing not publicly published.

- TPU v6e pods scale to 256 chips per pod in a 2D torus with four ICI links per chip (3,200 Gbps per chip), while NVIDIA’s GPUs scale via NVLink Switch networks and DGX configurations.

- Looking ahead, NVIDIA plans post-Blackwell for 2026–2027 with potential AI-specialized variants, AMD is targeting MI400/MI450X around 2026–2027 with larger memory and possible FPGA integration, and Google may advance toward TPU v7 and continued cloud-scale efficiency.

The race for AI accelerator supremacy has reached a fever pitch in 2024–2025. NVIDIA, AMD, and Google have each unveiled cutting-edge hardware targeting the exploding demands of generative AI, large language models (LLMs), and high-performance computing (HPC). NVIDIA’s new Blackwell B200 GPU, AMD’s Instinct MI350 series, and Google’s TPU v6e “Trillium” are all touted as game-changing chips, each pushing the envelope in architecture, performance, and efficiency. This report provides an in-depth comparison of these three accelerators – covering their architectures, raw performance (FLOPS, memory bandwidth, etc.), power efficiency, software ecosystems, real-world benchmarks, deployment scenarios, and more. We also highlight expert commentary and recent developments, and peek at the next-generation roadmaps each company is pursuing.

In this showdown of AI titans, NVIDIA’s Blackwell GPU promises 3× training and 15× inference performance gains over its predecessor nvidia.com, AMD’s MI350 (CDNA4 architecture) boasts up to 4× higher AI compute and 35× faster AI inference than its prior generation tomshardware.com smbom.com, and Google’s TPU v6e delivers a 4.7× jump in peak compute along with doubled memory and interconnect bandwidth over its last-gen TPUs cloud.google.com cloud.google.com. Below, we dissect each solution and compare how they stack up in the competitive landscape of AI acceleration.

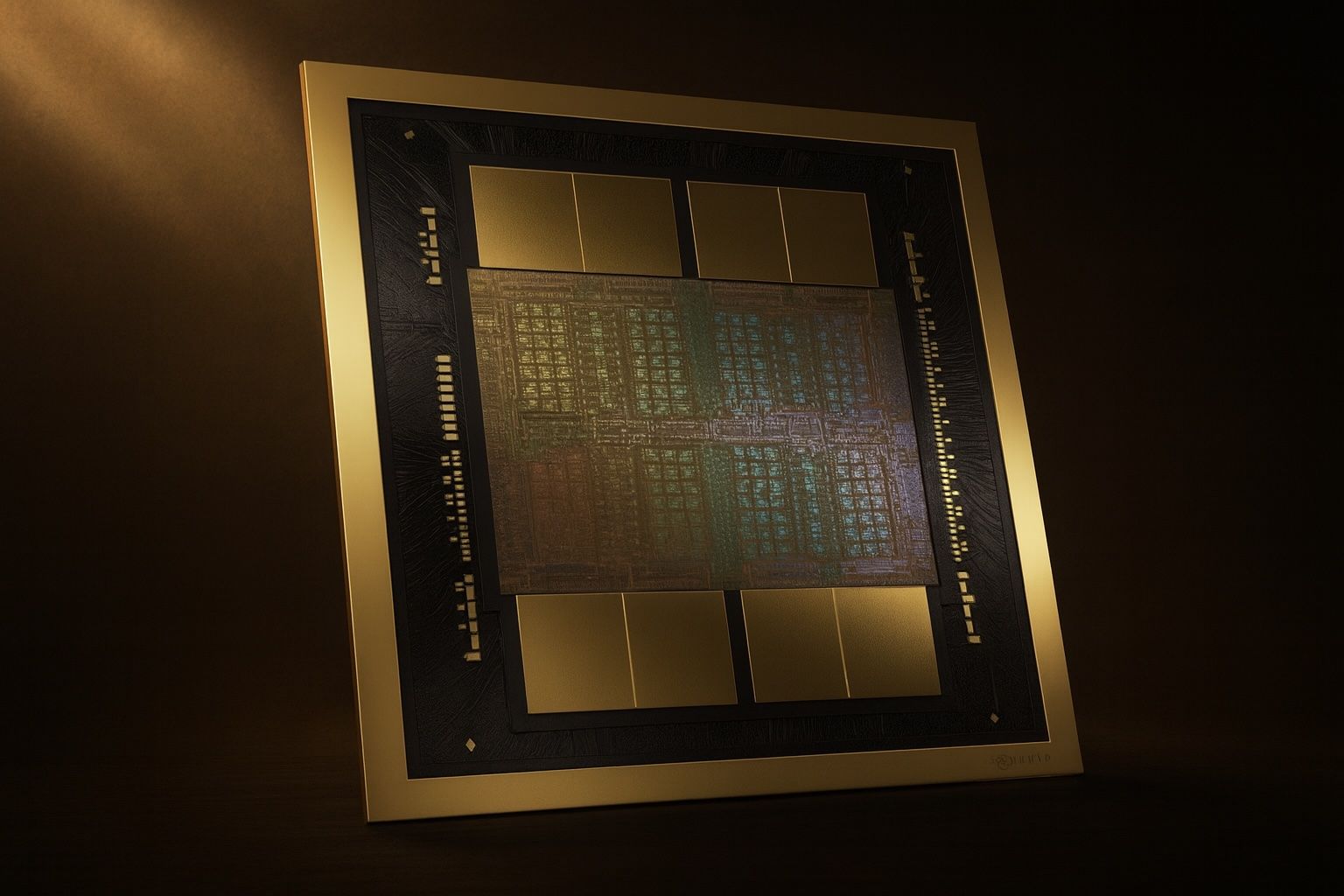

NVIDIA Blackwell B200 – Architecture and Performance

Architectural Innovations: NVIDIA’s Blackwell B200 is the flagship of NVIDIA’s latest GPU architecture, succeeding the Hopper H100. Blackwell introduces a multi-chip module (chiplet) design, significantly higher memory capacity, new low-precision math formats, and faster interconnects. A single B200 GPU comes with 180 GB of HBM3e memory (versus 80 GB on the H100), delivering up to 8 TB/s memory bandwidth exxactcorp.com exxactcorp.com. This massive memory (180 GB per GPU) enables training larger models or using bigger batch sizes, a key advantage that contributed to ~57% faster model training in some vision workloads versus H100 GPUs lightly.ai lightly.ai. Blackwell also doubles down on tensor core throughput for AI math: it supports new FP4 and FP6 precisions (on top of FP8/FP16/TF32), using a second-generation Transformer Engine to dynamically exploit lower precision for speedups developer.nvidia.com developer.nvidia.com. By sacrificing some FP64 throughput (critical for traditional HPC) in favor of massively boosting tensor core performance at lower precisions, the B200 achieves over 2× higher TF32/FP16/INT8 throughput than Hopper exxactcorp.com exxactcorp.com. In fact, the B200 can perform 18 PFLOPS of FP4 tensor operations (peak) – a new frontier enabled by its FP4 cores exxactcorp.com. The chip is fabricated on a cutting-edge process node (presumably TSMC 4nm or 3nm) and packs an enormous transistor budget (NVIDIA has not publicly stated the transistor count, but it is expected to be well above H100’s 80 billion). Cooling and power delivery are major considerations – data center B200 cards come in SXM form factor modules and can draw on the order of 1.5 kW each under load (estimates based on 8× B200 DGX system consuming ~14.3 kW) nvidia.com 1 .

Performance and Benchmarks: In terms of raw performance, the Blackwell B200 is a monster. It delivers roughly 4.5 PFLOPS of FP16/BF16 and 9 PFLOPS of FP8 tensor compute, more than double what the previous-gen offered exxactcorp.com. For inference, its INT8 throughput is about 9 PetaOps (vs ~4 PetaOps on H100) exxactcorp.com, and new FP4 capability further doubles the throughput again for certain Transformer-based models developer.nvidia.com. Real-world benchmark results are backing up these specs. In the latest MLPerf tests, systems with Blackwell GPUs shattered records. An eight-GPU DGX B200 server achieved 3.1× higher throughput on a Llama-2 70B chatbot inference benchmark compared to an eight-GPU H200 (Hopper-refresh) system developer.nvidia.com. In a massive Llama 3.1 (405B parameter model) inference scenario, NVIDIA’s Grace+Blackwell GB200 superchip (combining 72 Blackwell GPUs with 36 Grace CPUs in one cluster) delivered up to 3.4× the per-GPU performance of a Hopper-based system developer.nvidia.com developer.nvidia.com. At the system level, that GB200 configuration achieved an astounding 30× overall throughput increase on the 405B model test, thanks to more GPUs and the NVLink Switch network connecting them developer.nvidia.com. Even on smaller-scale tests, early access users report solid gains: Lightly.ai benchmarked B200 vs. H100 on real workloads and found the B200 about 57% faster in GPU-heavy training tasks (e.g. YOLOv8 computer vision training) and ~10% faster in mid-sized LLM inference tasks lightly.ai lightly.ai. Notably, Blackwell’s large memory (180 GB) lets it run larger batch sizes to improve training throughput, and its newness means inference software is still being optimized – the ~10% LLM speedup is expected to widen as CUDA libraries mature lightly.ai. NVIDIA itself claims DGX B200 delivers 3× the training performance and 15× the inference performance of DGX H100 systems in end-to-end workflows nvidia.com, highlighting Blackwell’s especial strength in inference (likely due to FP4 acceleration and other optimizations for transformer models). For HPC-style double-precision (FP64) performance, Blackwell holds steady around 30–40 TFLOPS FP64 (similar to H100) exxactcorp.com on vector operations, though FP64 tensor core throughput is reduced relative to Hopper exxactcorp.com – indicating NVIDIA’s strategic emphasis on AI workloads over classical HPC in this generation.

Power Efficiency: Despite its higher raw performance, the Blackwell B200 aims to be much more energy-efficient for AI tasks. NVIDIA cites a 15× improvement in inference performance per system (DGX B200 vs DGX H100) at similar power envelope nvidia.com. Architectural efficiencies (like optimized sparsity support and the Transformer Engine) contribute to far more performance per watt on AI workloads. Blackwell GPUs also leverage 5th-generation NVLink interconnects (900 GB/s per GPU link, 1.8 TB/s total across the GPU) exxactcorp.com, reducing data-movement bottlenecks and CPU overhead. This, combined with better power management, yields sizable energy savings for equivalent tasks. For example, one analysis showed Blackwell delivering a 25× improvement in energy efficiency in certain AI inference tasks versus its predecessor adrianco.medium.com. That said, absolute power draw is high; the B200’s appetite (up to ~1.4 kW per GPU in heavy workloads) requires advanced cooling. NVIDIA offers air-cooled and liquid-cooled variants for different deployment needs. The performance-per-TCO angle is crucial – even if each B200 GPU is expensive and power-hungry, the throughput it provides may lower the total number of servers needed. NVIDIA emphasizes that packing more performance into each rack (via powerful GPUs like B200) ultimately lowers cost and power per unit of work, especially for large-scale deployments tomshardware.com tomshardware.com. This aligns with what enterprise users seek: more AI compute density to maximize data center efficiency.

Software and Ecosystem: NVIDIA’s greatest advantage remains its software stack. The Blackwell B200 is fully supported by the CUDA and NVIDIA AI software ecosystem from day one. Developers can leverage NVIDIA’s optimized libraries (cuDNN, TensorRT, CUDA-X APIs, etc.) and frameworks like TensorRT-LLM for accelerated LLM inference developer.nvidia.com. In fact, Blackwell’s FP4 capability is exposed through TensorRT and new quantization tools, allowing models to run at FP4 precision with minimal accuracy loss – a key factor in those huge inference gains developer.nvidia.com. Multi-GPU training at scale is enabled by the NVLink Switch fabric (NVSwitch) which connects all GPUs in a server or even across servers (in the GB200 pod) as one high-speed cluster. Features like Multi-Instance GPU (MIG) are supported as well, letting a B200 be partitioned into up to 7 isolated GPU instances (each with ~23 GB) for serving multiple jobs exxactcorp.com. From a deployment standpoint, Blackwell GPUs are available in NVIDIA’s own DGX B200 systems and HGX boards for OEM server builders. Major cloud providers are also rolling out Blackwell-based instances – NVIDIA noted broad availability from leading cloud vendors in 2024 developer.nvidia.com. This means enterprises can access B200 performance either on-premises or in the cloud with relative ease. NVIDIA’s CEO Jensen Huang often describes their platform approach as providing “the full-stack, from silicon to software” to accelerate AI. Blackwell is a prime example – it’s not just a chip, but part of a unified AI platform with software and services (like NVIDIA AI Enterprise, NeMo frameworks for LLMs, and DGX Cloud integrations) nvidia.com nvidia.com. This robust ecosystem gives NVIDIA a strong competitive positioning, as developers can readily transfer models and skills from previous GPUs (like H100) to the new Blackwell with minimal friction.

AMD Instinct MI350 (CDNA 4) – Architecture and Performance

Architectural Innovations: AMD’s Instinct MI350 series represents AMD’s answer to Blackwell – a family of data center GPUs built on the new CDNA 4 architecture and advanced chiplet/3D stacking technology. Unveiled by CEO Dr. Lisa Su at Computex 2024, the MI350 chips are manufactured on TSMC’s 3nm-class process (N3P) and pack an estimated ~185 billion transistors in a multi-die design smbom.com. Like its predecessor (MI300 series), the MI350 uses 3D integration to combine compute dies and stacked HBM memory. The flagship MI355X and its air-cooled sibling MI350X come with a whopping 288 GB of HBM3e memory on-board, with total bandwidth up to 8 TB/s tomshardware.com tomshardware.com. This is 1.5× the memory capacity of NVIDIA’s B200 (180 GB), a fact AMD highlights – the MI355X carries 60% more HBM capacity than NVIDIA’s B200/GB200 GPUs (288 GB vs ~180 GB) tomshardware.com. The memory subsystem is not only larger but faster, thanks to HBM3e and a broadened memory bus. The MI350 series also introduces support for new 4-bit and 6-bit floating-point formats (FP4 and FP6) in hardware, similar to NVIDIA’s approach, to accelerate AI inference. AMD’s engineers doubled down on matrix math capabilities: the CDNA 4 architecture includes Matrix Cores optimized for lower precision. Notably, AMD opted to reduce FP64 throughput on MI350 relative to the MI300X, reallocating silicon to AI-focused units – FP64 matrix performance is roughly halved, though FP64 vector ops dropped only ~4% gen-on-gen tomshardware.com tomshardware.com. This indicates a strategic pivot toward AI workloads, echoing NVIDIA’s focus. The MI350X GPU die is purely compute (unlike MI300A which had CPU+GPU), as AMD has dropped the APU approach this generation to focus on GPU-only designs for maximum scalability tomshardware.com. Each MI350X package is OAM form factor and designed for dense data center integration, with an option for extreme liquid cooling on the high-end MI355X (which has a Total Board Power up to 1,400 W for peak performance) tomshardware.com tomshardware.com. The air-cooled MI350X runs at a lower power envelope (~1,000 W). Both variants share the same core design and are pin-compatible with existing MI300-series “Universal Base Board” systems, easing upgrades 2 .

Performance and Benchmarks: AMD claims the Instinct MI350 series will be a generational leap, especially for AI inference. According to AMD’s figures, the MI350 series delivers 4× higher AI compute performance and up to 35× higher AI inference throughput compared to the prior MI300 (CDNA 3) generation tomshardware.com tomshardware.com. In AMD’s internal benchmarks, a single MI350X is said to outperform NVIDIA’s current flagship in key metrics. For example, at AMD’s “Advancing AI 2025” event, the company asserted that MI350-class GPUs achieve up to 1.3× the inference performance of NVIDIA’s offerings in like-for-like tests, and even lead by ~13% in select training workloads tomshardware.com tomshardware.com. Some specific comparisons have emerged via industry reports: an HSBC analysis cited by the media noted that MI355X outpaces NVIDIA’s B200 in certain large-model inference tasks – approximately 20% faster on a DeepSeek R1 FP4 workload, and 30% faster on a Llama 3.1-405B LLM test (matching the performance of NVIDIA’s massive GB200 system) smbom.com smbom.com. These are bold claims that suggest AMD is competitive at the bleeding edge of LLM inference. Raw specs from Tom’s Hardware help explain this: a single MI355X can reach 10 PFLOPS of matrix compute in FP4/FP6 (or 20 PFLOPS with sparsity enabled), while the MI350X tops out around ~5 PFLOPS FP16 and ~10 PFLOPS FP8 tomshardware.com tomshardware.com. Comparing against NVIDIA, AMD states that MI355X offers roughly 2× the peak FP64/FP32 throughput of Blackwell (since NVIDIA dialed those down) tomshardware.com. At lower precision like FP16/FP8/FP4, AMD’s per-GPU throughput is similar to Blackwell’s – the MI350X appears to “match or slightly exceed the Nvidia comparables” in most cases tomshardware.com tomshardware.com. One standout is FP6 format: AMD’s architecture can run FP6 at the same rate as FP4, which they tout as a differentiator for certain models tomshardware.com. In terms of memory-bound workloads, MI355X’s huge HBM3e pool may shine: AMD says its 288 GB accelerator can generate 40% more LLM tokens per dollar than NVIDIA’s B200, partly due to handling larger context windows or batch sizes without overflow smbom.com smbom.com. We will need to see independent MLPerf results for full confirmation – as of late 2024, MI350 hardware is just ramping up – but early signs indicate AMD is very much in the race for AI training and inference at scale. Notably, MI350-series accelerators are central to the upcoming El Capitan exascale supercomputer and other HPC systems, so their FP64 and mixed-precision performance will also be tested in scientific applications. (AMD has traditionally led in FP64 GPU performance; e.g., the prior MI250X offered ~47.9 TFLOPS FP64, far above NVIDIA A100’s 9.7 TFLOPS, and MI300 series continued that trend). With MI350, AMD is balancing that HPC strength with massive AI performance gains.

Power Efficiency: Thanks to the advanced 3nm process and architectural improvements, the MI350 series is expected to be extremely energy-efficient for AI throughput – albeit at high absolute power levels. AMD quotes a 35× inference perf/W improvement vs. last-gen, which is partly from using INT4/FP4 data formats that simply do more ops per joule amd.com amd.com. The MI350X delivers far more performance per card than its predecessors, which should translate to better rack-level efficiency. However, the flagship MI355X’s 1.4 kW power draw is nearly double that of the MI300X (750 W) tomshardware.com. AMD’s argument is that this higher power envelope pays for itself in density: one MI355X yields so much performance that fewer GPUs are needed overall, ultimately improving performance per TCO (total cost of ownership) at the data center level tomshardware.com tomshardware.com. In other words, you might deploy one rack of MI355X nodes instead of two racks of a less powerful accelerator, saving on cooling, networking, and facility costs for the same work done. AMD also touts the efficiency gains of its CDNA4 architecture: moving to 3nm, using advanced packaging, and the introduction of new instructions for AI have boosted perf/W significantly. For instance, MI350 adopts newer low-precision formats (FP4/FP6) that slash memory bandwidth and storage needs for a given model, directly cutting down power per inference. Cooling solutions (liquid for MI355X) ensure the GPUs can sustain high clocks without throttling. All this suggests that AMD designed MI350 not just to compete on raw speed, but on throughput per dollar and per watt. Executive commentary reinforces this: “We are relentless in our pace of innovation, providing the leadership capabilities and performance the AI industry expects, to drive the next evolution of data center AI training and inference,” said Brad McCredie, AMD’s VP of Accelerated Compute, emphasizing the focus on value and performance together 3 .

Software Ecosystem and Use Cases: Historically, software has been AMD’s uphill battle in the AI space, but the company has made significant strides with its ROCm open software stack. By the time MI350 launches broadly (expected in 2025), AMD’s ROCm 6+ platform and libraries will be quite mature, with support for major frameworks (PyTorch, TensorFlow, JAX) and model libraries. In fact, AMD has partnered with companies like Hugging Face to proactively test hundreds of thousands of popular AI models on MI300-series hardware, ensuring they run out-of-the-box on AMD GPUs amd.com amd.com. That kind of software enablement is crucial for customer confidence. AMD also integrated support for popular transformer optimizations – e.g., software for exploiting FP8/FP4, and tools to ease porting CUDA code to HIP (AMD’s parallel programming API). While NVIDIA still leads in software polish, AMD is positioning MI350 as a viable alternative for cloud providers and large enterprises that want to diversify their AI hardware. Notably, Microsoft Azure, Meta (Facebook), and major OEMs (Dell, HPE, Lenovo) have already been working with MI300X accelerators tomshardware.com tomshardware.com, and they are likely candidates to adopt MI350 in 2025+. For instance, Azure is offering AI instances with MI300A/X for OpenAI services, and Meta has validated MI300 for internal workloads tomshardware.com. That momentum is expected to carry into the CDNA4 generation. AMD is also exploring partitioning and multi-GPU scaling akin to NVIDIA’s NVSwitch – the MI350 cards use the same UBB form factor which supports AMD’s Infinity Fabric links between GPUs. Although details are sparse, leaks suggest AMD may allow large configurations (e.g., Instinct MI450X with IF128 fabric connecting many GPUs in 2026) tomshardware.com tomshardware.com. For now, MI350X can be used in 2P or 4P GPU configurations with high-speed links. The primary use cases for MI350 will span both AI and HPC: training giant LLMs, deploying inference for models like ChatGPT or image generators, and accelerating scientific simulations (climate modeling, physics, etc.) that increasingly mix AI with traditional compute. With its beefy FP64 and huge memory, MI350 can handle HPC simulations or large graph analytics that need high precision and memory, while also crushing through neural network training in the same system. This flexibility, if delivered, could make AMD’s solution attractive to national labs and cloud giants alike. Pricing-wise, AMD has been aggressive: reports indicate AMD initially priced MI350X around $15k but, seeing high demand, raised it to ~$25k – still a bit lower than NVIDIA’s equivalent which is in the $30–40k range smbom.com smbom.com. Analysts view this as a sign that AMD feels confident in MI350’s value relative to NVIDIA’s Blackwell. As one tech outlet noted, “AMD believes the MI350’s performance can rival Nvidia’s Blackwell B200, and despite the price hike, the MI350 remains more affordable than Nvidia’s offering.” smbom.com 4 .

Google TPU v6e “Trillium” – Architecture and Performance

Architecture Overview: Google’s TPU v6e, codenamed Trillium, is the sixth generation of Google’s proprietary Tensor Processing Unit – an AI accelerator ASIC designed specifically for neural network training and inference. Announced at Google I/O 2024, Trillium TPUs are built to maximize performance per dollar on Google’s targeted workloads (large transformers, recommendation models, etc.) in Google Cloud. Architecturally, a TPU v6e chip is quite different from a GPU: it contains one giant TensorCore that houses two matrix multiply units (MXUs) along with vector and scalar units cloud.google.com cloud.google.com. These MXUs implement massive systolic arrays for matrix math, tailored to BF16 (bfloat16) and INT8 computations (TPUs historically do not natively support FP32/FP64 arithmetic to save silicon). With Trillium, Google significantly scaled up the core: v6e’s MXUs are larger and run at higher clock speeds than in previous TPUs cloud.google.com. The result is a 4.7× increase in peak compute per chip compared to the last-gen Cloud TPU v5e cloud.google.com cloud.google.com. Concretely, a single TPU v6e chip delivers 918 TFLOPS of BF16 peak (up from ~197 TF on v5e) and about 1.836 POPS (PetaOPS) of INT8 throughput cloud.google.com cloud.google.com. Each chip is equipped with 32 GB of High Bandwidth Memory (HBM2e/HBM3) – double the 16 GB on v5e cloud.google.com cloud.google.com. Memory bandwidth per chip also doubled to 1.6 TB/s cloud.google.com cloud.google.com. While 32 GB per chip may sound small next to GPU memory, TPUs are meant to be used in swarms, and Google doubled the Inter-Chip Interconnect (ICI) bandwidth as well (from 1,600 Gbps to 3,200 Gbps per chip) cloud.google.com cloud.google.com. Each v6e chip has 4 ICI links forming a 2D torus topology with up to 256 chips in a TPU pod cloud.google.com cloud.google.com. In essence, Google’s design philosophy is to use many moderately sized chips working in concert, rather than one enormous die. A standard Cloud TPU v6e pod consists of 256 chips (spread across 32 host machines with 8 chips each), all tightly interconnected for collective training on very large models cloud.google.com cloud.google.com. Google also introduced the 3rd-gen SparseCore in Trillium – a specialized co-processor on each chip dedicated to accelerating large embedding and recommendation workloads (by handling sparse data lookups more efficiently) cloud.google.com cloud.google.com. This addresses use cases like ranking, embeddings for search/ads, etc., which are vital to Google’s business. Notably, TPUs traditionally operate on lower numeric precision; v6e supports bfloat16 and INT8, and there are hints it may support experimental 8-bit or 4-bit float formats, though Google hasn’t officially detailed that nextplatform.com nextplatform.com. (The Next Platform speculated that FP8/FP4 might be added to Trillium to keep up with industry trends nextplatform.com.) The process node and power for TPU v6e have not been publicly stated, but given the efficiency gains, it likely uses a 5nm or 4nm process. Each chip might consume on the order of ~200–250 W (prior TPU v4 chips were ~175 W), but exact figures are undisclosed. Google emphasized 67% better energy efficiency on v6e versus v5e cloud.google.com cloud.google.com, indicating far more performance per watt.

Performance and Scalability: A single TPU v6e chip delivers roughly 0.918 PFLOPS (918 TF) of BF16 and can be clustered massively, so performance is usually discussed at pod-scale. A full pod (256 chips) has a theoretical peak of ~234.9 PFLOPS BF16 aggregate and over 1 exaOP/s of INT8 cloud.google.com cloud.google.com. In practice, Google has demonstrated impressive real-world performance with Trillium. Internal Google models such as Gemini 1.5 (next-gen foundation model) and Imagen 3 (image generator) were trained on TPU v6 hardware cloud.google.com. Google states that these TPUs enable training “the next wave of foundation models faster and serving them at reduced latency and cost” cloud.google.com cloud.google.com. For instance, in one public example, Google noted that switching from TPU v5e to v6e yielded 4.7× speed-up in a transformer-based workload at the chip level cloud.google.com. Another key metric is performance per TCO – Google lists v5e as 0.65× and v6e as 1.0 (baseline) in performance/TCO, implying v6e delivers ~54% better throughput per dollar than v5e in their cloud deployments cloud.google.com cloud.google.com. The doubling of memory and communication bandwidth means v6e can handle much larger models efficiently. With 32 GB per chip (and 8 chips per host), each TPU host has 256 GB HBM total, and an entire 256-chip pod has 8 TB of HBM spread across the mesh – effectively enabling training of models with tens of billions of parameters in data-parallel or model-parallel regimes. In terms of benchmarks, Google typically doesn’t submit to MLPerf for TPUs, but anecdotal comparisons exist. TPU v4 had been roughly comparable to NVIDIA A100 in some tasks (at least when scaled to pods), and TPU v5e was a cost-optimized version of TPU v5. The v6e “Trillium” appears to close much of the gap with NVIDIA’s Hopper/Blackwell in raw compute: e.g., 918 TF BF16 is in the ballpark of an NVIDIA H100’s ~1,000 TF FP16, although a single Blackwell B200 at ~4,500 TF16 is much higher. However, Google can deploy 256 of these chips (or even larger multi-pod clusters with optical interconnects cloud.google.com), achieving performance that rivals supercomputers. Google also reports that TPU v6e is 67% more energy-efficient than v5e for the same work cloud.google.com, a crucial stat for reducing cloud costs. In real terms, this means tasks like serving a large language model can be done at much lower power consumption, which translates to savings for Google and its Cloud TPU customers. Trillium’s third-gen SparseCore boosts throughput on recommendation systems significantly – a domain where GPUs often struggle due to memory-bound sparse operations. By offloading embedding lookups to SparseCore, Google claims big gains for things like ad ranking models cloud.google.com cloud.google.com. Overall, TPU v6e’s performance is laser-focused on transformer models, CNNs, and embeddings. It likely does not perform FP32/FP64, so it wouldn’t be used for general HPC tasks, but for AI training at scale, it’s among the best – Google called it “our most performant and most energy-efficient TPU to date” 5 .

Efficiency and Deployment: Google’s strategy with TPUs is to maximize throughput-per-dollar in the cloud. The Trillium TPU exemplifies this by offering a better price/performance ratio than previous TPUs and, Google implies, a competitive alternative to renting NVIDIA GPUs. One analysis noted that Google might price TPU v6e instances a bit over 2× the cost of v5e, but since they’re 4–5× faster, the net cost per unit of work still improves by >2× nextplatform.com. Indeed, Google markets v6e as the “highest value product” for a broad range of AI tasks (transformers, text-to-image, CNNs for vision) in Cloud TPU offerings cloud.google.com cloud.google.com. TPU v6e pods can also scale beyond a single pod: Google introduced multislice technology and Titanium IPUs to connect multiple 256-chip pods over its datacenter network fabric cloud.google.com cloud.google.com. This could allow thousands of TPU v6e chips to work together in a “building-scale supercomputer,” essentially unlimited scale-out for ultra-large models – a concept Google has hinted at for future AI infrastructure cloud.google.com. In terms of availability, Cloud TPU v6e is available (as of late 2024) in Google Cloud regions through instances like ct6e-standard VM types cloud.google.com. Developers can access configurations from a single chip (v6e-1) up to a full pod (v6e-256), or even multi-pod via special arrangements cloud.google.com cloud.google.com. Google provides integrations for TensorFlow and JAX to seamlessly utilize TPUs, and PyTorch is supported via the XLA compiler (many PyTorch models can run on TPUs with minimal code changes using PyTorch XLA). Common model serving frameworks like vLLM have been demonstrated on TPU v6e as well medium.com. The TPU ecosystem is more closed than CUDA/ROCm; you cannot buy a TPU and run it on-premises (outside of rare partnerships), so the users are essentially those on Google Cloud or internal Google teams. However, Google’s TPUs have proven their mettle by powering nearly all of Google’s own AI breakthroughs – from Transformer research to flagship models like PaLM and Gemini – and now they are offered to external customers who need massive scale without purchasing physical GPUs. A key quote from Google’s VP/GM of Systems and AI, Amin Vahdat, encapsulated the launch: “We’re excited to announce Trillium, our sixth-generation TPU, the most performant and most energy-efficient TPU to date.” cloud.google.com This underlines Google’s intent to keep pushing the envelope on specialized AI silicon to compete with general-purpose GPU makers.

Real-World Benchmarks and Power Efficiency Comparison

Each of these accelerators excels on different metrics, so comparing them requires context. NVIDIA’s B200 and AMD’s MI350 are more directly comparable (both are general-purpose GPUs for AI/HPC), whereas Google’s TPU is a specialized cloud ASIC for AI only. Still, it’s illuminating to see how they line up in the tasks they all target – namely training and serving large AI models.

- AI Training Performance (BF16/FP16): On paper, a single NVIDIA B200 delivers the highest peak throughput (4.5 PFLOPS FP16) exxactcorp.com, roughly equal to two MI350X GPUs or five TPU v6e chips (each 0.918 PFLOPS BF16) cloud.google.com. In practice, multi-GPU scaling and software maturity play big roles. NVIDIA demonstrated a Blackwell GPU cluster (GB200 NVL) training a giant LLM with record-breaking speed – per GPU, ~3× faster than the previous-gen H200 developer.nvidia.com developer.nvidia.com. AMD, meanwhile, claims ~1.13× training speed vs. NVIDIA in select tests tomshardware.com, but this likely refers to scenarios where MI355X’s extra memory or FP32/FP64 might aid convergence. Google’s TPU v6e can train models like PaLM efficiently when scaled to pods, but single-chip performance is below the GPUs. For instance, an 8-GPU B200 server (DGX B200) can outrun an 8-chip TPU host by a significant margin for training, given the DGX’s 72 PFLOPS FP8 vs a TPU host’s ~7.3 PFLOPS BF16 total nvidia.com cloud.google.com. However, Google can deploy 32 such hosts (256 chips) to brute-force large jobs. In summary, for raw training speed on one server, B200 likely leads, followed by MI350 (which should be in the same ballpark), with TPU v6e reaching peak efficiency only at pod scale.

- AI Inference & Low-Precision Throughput: This is where all three have made big claims. NVIDIA advertises up to 15× inference performance gain over H100 nvidia.com, thanks largely to FP4. AMD touts 35× inference gain over MI300 and says MI355X beats B200 by 20–30% in some LLM inference tasks smbom.com. Google’s TPU v6e, with INT8 and potential FP8 support, is very inference-optimized (67% more performance per watt than before, largely for serving). Notably, NVIDIA’s Blackwell has the advantage of flexibility: running any model with existing GPU frameworks. AMD’s MI350 can leverage new FP4/FP8 in PyTorch/TensorFlow as those get supported, and AMD claims a 40% cost-per-token advantage for MI355X vs. B200 in inference serving smbom.com. Google’s TPU might win on absolute latency at scale – TPUs have specialized interconnect and batching for serving many requests (Google uses them to serve Search and Bard-like services globally). If cost is considered, a TPU v6e pod likely delivers inference at a lower cost than an equivalent GPU cluster for certain workloads, which is Google’s selling point. For example, one Google Cloud blog noted 2.3× better price-performance going from GPU to TPU solutions in some cases nextplatform.com. It really depends on the workload; transformers with heavy matrix math play to NVIDIA/AMD’s strengths, while recommendation and sparsity-heavy tasks play to Google’s SparseCore strengths.

- Memory and Model Size: AMD clearly leads in raw memory per accelerator: 288 GB HBM3e on MI355X tomshardware.com. NVIDIA’s B200 has 180 GB nvidia.com. Google’s TPU v6e has 32 GB per chip, but effectively 256 GB per 8-chip host and 8 TB across a pod cloud.google.com. For single-instance jobs (one model fitting in one accelerator), AMD can handle the largest models (e.g. a model needing 250 GB weights could sit on one MI355X, whereas it would need to be sharded across two B200s or 8 TPU chips). That said, both NVIDIA and Google use model parallelism to split large models across GPUs/TPUs. NVIDIA’s NVLink and NVSwitch allow multiple B200s to act like one big memory pool (8×180 GB = 1.44 TB in a DGX, which they did utilize to run 400B+ param models in MLPerf) nvidia.com developer.nvidia.com. Google’s TPU pod similarly pools 8 TB HBM total. So for ultra-large models (hundreds of billions of parameters), all can accommodate via distributed training – but AMD might let you do somewhat large models without distribution (a simpler programming model). Also, AMD’s memory bandwidth is a match for NVIDIA’s: 8 TB/s vs 8 TB/s tomshardware.com tomshardware.com, both far above TPU’s 1.6 TB/s per chip (though TPU compensates with more chips feeding the model). For models that are memory-bandwidth bound (like vision CNNs or sparse ML), the GPUs have an edge in raw BW per device.

- Power Efficiency: All three vendors improved perf/W, but how do they compare? NVIDIA hasn’t given a simple perf/W figure, but Blackwell’s perf per watt for AI likely doubled over Hopper (which was ~TOPS/W range). AMD explicitly says 4× compute for similar power by going CDNA3 → CDNA4, plus 3D stacking gains; MI350X’s performance density (PFLOPS per watt) seems very high, albeit at high absolute watts. Google’s 67% energy efficiency gain is huge – TPU v6e performs nearly 5× the work at perhaps ~3× the power of v5e, so ~1.67× net perf/W improvement. It’s tricky to rank without identical metrics, but for inference throughput per watt, Blackwell using FP4 likely takes the crown (since FP4 doubles throughput with only small energy cost). AMD’s FP4 is similar and they specifically claim MI355X 20 PFLOPS with sparsity at 1.4 kW smbom.com, which is ~14.3 TFLOPS per watt for FP4 sparse. NVIDIA’s B200 at ~1.5 kW does 18 PFLOPS FP4 (no sparsity needed) exxactcorp.com exxactcorp.com, about 12 TFLOPS/W – slightly lower if these numbers are directly compared. With sparsity, Blackwell would also double, so it could hit ~24–30 TFLOPS/W in ideal cases. In sum, all are extremely power-efficient for AI relative to previous gen, and differences may be within 10–20%. Real-world efficiency also depends on utilization (Google’s TPUs shine at high utilization in cloud datacenters, whereas self-hosted GPUs could idle at times).

In real enterprise terms, many organizations will evaluate throughput per dollar. NVIDIA’s hardware tends to be premium-priced; indeed B200 GPUs are rumored at ~$30k–$40k each smbom.com. AMD undercut that with ~$25k for MI355X smbom.com, aiming to offer more memory and similar speed for less. Google charges by usage; currently Cloud TPU v6e pricing isn’t public, but previous TPUs were on the order of $2–$3 per chip-hour. One source indicates B200 cloud instances are around $4/hour per GPU, whereas older MI300X were priced much higher on some clouds (due to limited availability) getdeploying.com. Thus, cost comparisons are fluid. One clear trend is that companies are increasingly considering diversifying their AI hardware to optimize cost: using GPUs for flexibility, TPUs for certain workloads or scale, and playing AMD against NVIDIA for price leverage nextplatform.com. Google’s TPUs initially served as a way to reduce their dependence on NVIDIA and drive internal research nextplatform.com, but with v6e they’re also courting cloud customers who might otherwise rent NVIDIA GPUs. AMD’s presence gives cloud providers and hyperscalers an attractive non-NVIDIA option, potentially curbing NVIDIA’s ability to command a huge price premium. For example, AWS and Azure could deploy AMD MI350-based instances to offer competitive performance at a lower price point than NVIDIA-based ones, appealing to cost-sensitive AI startups. In the end, the “best” accelerator depends on the use case: if you need the absolute highest performance on a single training job and mature software, NVIDIA’s Blackwell is a safe bet. If you need the most memory or want to mix HPC workloads, AMD’s MI350 might be ideal. If you’re running large-scale inference or training on Google Cloud, TPU v6e could offer the fastest and cheapest path, provided your models are compatible.

Software and Ecosystem Support

All three platforms come with distinct software ecosystems, which can be as important as the hardware capabilities:

- NVIDIA (CUDA + AI Enterprise): NVIDIA’s stack is the most established. Developers use CUDA and libraries like cuDNN, TensorRT, NCCL, and others that have been highly optimized over a decade. Frameworks such as PyTorch and TensorFlow have native CUDA support, meaning models run on NVIDIA GPUs with minimal fuss. With Blackwell, NVIDIA also provides updates like the TensorRT-LLM package for large language models and the Transformer Engine integration that automates mixed precision (now including FP4) developer.nvidia.com. Additionally, NVIDIA’s AI Enterprise software suite (included with DGX systems) ensures stability and support for enterprise AI workflows, and tools like NeMo Megatron simplify training huge models on DGX clusters nvidia.com. The ecosystem advantage is evident: many AI researchers and engineers “think in CUDA” and benefit from extensive community support and documentation for NVIDIA GPUs. Blackwell GPUs can largely reuse Hopper-optimized software; NVIDIA released a Blackwell-optimized CUDA toolkit concurrently with hardware availability. This backward and forward compatibility is a big plus – code that ran on V100/A100/H100 can typically be recompiled or sometimes even run as-is on B200 (just running faster). NVIDIA’s close partnerships with software vendors and cloud platforms also mean even proprietary or closed-source AI software (e.g., certain enterprise AI platforms) often default to NVIDIA support first.

- AMD (ROCm + HIP): AMD’s ROCm (Radeon Open Compute) is an open-source GPU computing stack that has matured considerably in recent years. For MI300/MI350, AMD provides ROCm 6 which supports all major AI frameworks via either native backends or HIP (Heterogeneous-compute Interface for Portability). HIP is AMD’s CUDA-interoperable API – it allows many CUDA codebases to be compiled for AMD GPUs with minimal changes. AMD worked with PyTorch to develop PyTorch Direct ML for ROCm, and with TensorFlow and JAX teams to enable those on ROCm as well amd.com. By 2024, PyTorch on ROCm could run popular transformer models on MI300X with only slight performance deltas vs. on NVIDIA (assuming the model wasn’t using NVIDIA-specific ops). Moreover, AMD has been optimizing popular model kernels (attention, transformer block, convolution, etc.) for CDNA architectures. One highlight: Hugging Face’s nightly testing of 700k models on MI300X ensures that the model hub’s breadth is being validated on AMD amd.com, which gives confidence that many architectures (BERT, GPT, Stable Diffusion, etc.) will work on MI350 at launch. ROCm still has a smaller user community than CUDA, and tooling/debugging on AMD can be more challenging due to its relative newness. But AMD is addressing this by collaborating with universities, national labs, and cloud providers to build a base of expertise around Instinct GPUs. They also emphasize open standards – support for PyTorch/XLA (used also by TPUs) and OpenMP offloading for HPC code, for example. The software gap is closing; if an organization’s models rely on custom CUDA kernels, porting to AMD might require effort, but for many mainstream models it’s increasingly “swap and run”. AMD’s MI350 will also leverage MIOpen (analogous to cuDNN) for deep learning routines and AOMP compilers for HPC. One challenge is that AMD is moving quickly – MI250, MI300, MI350 all have some differences – so software needs to keep up with new features like FP4/FP6. But given that MI350 uses the same UBB platform and largely extends MI300, software support should be ready (the MI350 was mentioned as being used as a “proxy” in projections during MI300X performance evaluations amd.com, implying software prototypes were in testing). In summary, the AMD ecosystem is not as plug-and-play as NVIDIA’s yet, but for committed users, it’s viable and improving fast, lowering the barrier to adopting MI350 GPUs especially in controlled environments like supercomputers or managed cloud instances.

- Google TPU (TensorFlow & JAX on Cloud TPU): The TPU v6e’s ecosystem is quite different because it’s only available as a service. Users don’t manage drivers or low-level libraries; instead, they access TPUs via Google’s Cloud TPU API or through integrations in TensorFlow and JAX. Google provides the TPU compiler (XLA) which transforms computational graphs into TPU executable code. For TensorFlow, it’s almost seamless – you can distribute a model on TPUs using high-level APIs (tf.distribute.TPUStrategy). For JAX (which Google favors for research), the jax.jit compiler can target TPUs effectively, and many Google research models are written in JAX+Flax to run on TPUs. PyTorch support on TPUs historically lagged, but with TPU v5e and v6e, Google and Meta collaborated on PyTorch XLA which allows PyTorch code to run on TPUs with minimal modifications. That is crucial, as PyTorch is the dominant framework in industry. There are some limitations (not every PyTorch operation is TPU-compatible, and performance tuning sometimes needed), but many common models can be executed. Additionally, Google’s tooling like tf.data pipelines, the TPU profiler, and Cloud Monitoring dashboards are available to help optimize input pipelines and debug performance issues on TPUs cloud.google.com cloud.google.com. One advantage of the TPU ecosystem is that you get a managed experience – you don’t worry about software versions or driver mismatches; Google ensures the whole stack (from silicon to cooling) works out of the box. The downside is less flexibility: you can’t, for example, write custom kernel code for TPUs (outside of writing custom ops in TensorFlow’s compilable subset). If your model uses an operation that XLA doesn’t support, you have to wait for Google to add it or find a workaround. Also, certain types of models (like those heavy in dynamic control flow) don’t map well to TPU’s batch-oriented, statically compiled paradigm. But for the bread-and-butter of deep learning – large matrix multiplications – TPUs are highly efficient. Google has also pre-built high-performance implementations for things like multi-head attention, Transformer layers, etc., within their TensorFlow and JAX libraries for TPU. They continuously incorporate their research (such as optimizations for mixture-of-experts, efficient sharding strategies, etc.) into the cloud TPU software. So, if your use case aligns with Google’s (LLMs, vision models, etc.), TPU software can be very robust. Another part of the TPU ecosystem is Google’s higher-level services: for instance, Pathways (for multi-host inference) and managed solutions like Vertex AI may leverage TPUs under the hood. This further abstracts the hardware from the end-user, making TPU’s benefits accessible without hardcore engineering effort. In essence, TPU v6e’s ecosystem is ideal for those already in the Google Cloud and TensorFlow/JAX world, but it’s less accessible to those wanting on-prem or full control (which is by design, as Google uses TPUs to draw users into its cloud platform).

Deployment Scenarios and Availability

NVIDIA, AMD, and Google each have distinct deployment models and timelines for these accelerators:

- NVIDIA Blackwell B200 Availability: NVIDIA’s Blackwell GPUs were introduced around GTC 2024, and by late 2024 they moved into full production. DGX B200 appliances (8× B200 GPUs) are now the cornerstone of NVIDIA’s AI data center offerings, with shipments to key customers and NVIDIA’s own cloud (DGX Cloud) starting around Q4 2024 developer.nvidia.com. By 2025, major cloud providers like AWS, Azure, GCP, and Oracle are expected to offer B200 instances, as indicated by NVIDIA developer.nvidia.com. Enterprises can also buy HGX B200 server boards through OEMs like Dell, HPE, Supermicro, etc. The DGX B200 (with 8 GPUs, dual Xeon CPUs, networking, 1.44 TB HBM total) is a high-end system costing on the order of $500K+ (rumored around $515k) modal.com. Per-GPU pricing is in the tens of thousands; industry chatter suggests a single B200 card lists for $30K–$40K depending on volume smbom.com. Early adopters include research labs training frontier AI models and companies building private AI clusters – essentially those who pushed Hopper H100 to the limits and need the next boost. NVIDIA is also deploying Blackwell GPUs in its superchips: the Grace-Blackwell GB200 (1 Grace CPU + 2× B200 on one board, connected by NVLink-C2C) is geared for giant models that benefit from tight CPU-GPU integration and huge memory (the Grace CPU adds 512 GB LPDDR memory). This GB200 “Ultra GPU” configuration is aimed at HPC-AI fusion workloads and is slated for 2025, with a price tag rumored around $60K–$70K per module nextplatform.com. In summary, Blackwell will be ubiquitous at the high end of AI by 2025 – any organization that can afford them and needs the best performance for training or inference will consider deploying B200s, either in on-prem clusters (for data sovereignty or consistent utilization needs) or via cloud rentals (for occasional scaling). NVIDIA’s extensive partner network ensures that from SuperPOD solutions down to smaller 4-GPU servers, Blackwell GPUs will be available in various form factors.

- AMD Instinct MI350 Availability: AMD has outlined that the MI350X and MI355X accelerators are expected to be available in 2025 amd.com amd.com. The first silicon was demoed in mid-2024, and by mid-2025 AMD indicated they had begun shipping production MI350X units (in limited quantities) tomshardware.com tomshardware.com. Volume ramp is anticipated through late 2025 as major deployments come online. One highly anticipated deployment is in the U.S. DOE’s El Capitan supercomputer (at Lawrence Livermore National Lab), which will use a variant of MI300 – potentially upgraded to MI350 by the time of installation. Additionally, cloud giants like Microsoft have publicly aligned with AMD: Azure announced intent to offer MI300-series VMs, and one can infer MI350 will follow if performance proves out tomshardware.com. There are also reports of Meta evaluating MI300/MI350 for their AI infrastructure, which if successful, could lead to large orders (Meta historically has used mostly NVIDIA, but cost pressures may drive them to mix in AMD). ODMs such as Lenovo and Supermicro showcased systems supporting MI300 OAM modules, so those will support MI350 as well. However, early on, availability may be limited – AMD is gaining traction but still ramping up supply chains and software support. As the press reporting of price hikes indicates, demand is strong enough that AMD felt confident to raise MI350 prices by ~66% mid-2025 smbom.com smbom.com, perhaps due to large pre-orders. We might see MI350 accelerators in cloud offerings by late 2025 or early 2026; one challenge is that hyperscalers need time to validate software and performance at scale. AMD’s annual roadmap cadence (Instinct MI400 expected in 2026) amd.com amd.com suggests MI350 will be the flagship throughout 2025. As for use cases, initial customers will likely be those who need the memory capacity (e.g. training very large models or doing big data analytics) or those seeking better pricing than NVIDIA. Enterprises with existing CUDA code might adopt AMD slowly, but those building new workloads (especially on PyTorch or for inference serving) could jump in if ROCm support is solid. The OEM ecosystem for AMD Instinct is also growing: HPE, Dell, and others will offer server models with MI350 for customers who request them. AMD is positioning MI350 for both AI mega-projects and mainstream cloud – expect to hear announcements of MI350 deployments in supercomputers, cloud AI platforms, and perhaps even in some OEM’s turnkey AI systems (similar to DGX, but AMD-based). Pricing-wise, as noted, AMD undercuts NVIDIA slightly: maybe ~$25k per GPU in large quantities smbom.com. If those prices hold, one might see an 8xMI355X server (with ~2.3 TB HBM total) priced somewhat below an NVIDIA DGX of similar scale – a tempting value proposition if performance meets the hype.

- Google Cloud TPU v6e Availability: TPU v6e (Trillium) was announced in May 2024 and made available shortly thereafter in Google Cloud (it was showcased at SC24 in Nov 2024 as well) servethehome.com. Google typically introduces TPU availability region by region; by the end of 2024, TPU v6e pods (256 chips) were likely accessible to select Google Cloud customers (Google often lets Google Research teams and select partners use new TPUs first, then opens them more broadly). Official documentation shows support for TPU v6e instance types from 1 chip up to 256 chips cloud.google.com, which means they’re essentially publicly usable now. Any Google Cloud user can request these via the Cloud console or API (assuming quota is approved, since these are expensive resources). Deployment scenario for TPU v6e is essentially Google Cloud’s data centers – unlike GPUs, you cannot deploy TPUs on-premise. So, the target users are those willing to run their training/inference on GCP. Notably, Google has integrated TPU v6e into its own products: e.g., Google’s internal AI research likely shifted to v6e for new model development (Gemini, etc.), and services like Google’s Bard AI or Search with AI features are presumably leveraging v6e pods to serve queries efficiently. Google also offers TPUs through its Vertex AI managed service for a higher-level interface (so a customer might not even know they’re using a TPU, just that their model trains fast on Vertex AI). As of early 2025, TPU v6e is one of the most advanced offerings on GCP’s menu, and Google will likely expand availability as demand grows. In terms of cost, Google hasn’t published v6e pricing at the time of writing; for reference, earlier TPU v4 pods cost thousands of dollars per hour. It’s expected that TPU v6e will be priced premium but still competitive against renting an equivalent number of high-end GPUs. Google’s cloud TPU strategy historically was to be cheaper than GPU for training (TPU v3 was quite cost-effective vs V100). If that holds, we might see v6e offered at a rate that yields ~50% better $/training-unit than an H100-based VM. One community source indicated a TPU v6e pod (256 chips) might cost on the order of $1000+/hour, which is only viable for well-funded efforts but can be more efficient than managing dozens of GPU servers for a shorter burst. Overall, TPU v6e deployment is tied to Google Cloud’s success in attracting AI workloads. Some companies (like Midjourney for image generation, or Character AI for chatbots) reportedly utilize Google TPUs on the backend – these v6e upgrades will directly benefit them. The constraint is that those solutions must be in GCP; companies heavily invested in AWS/Azure might skip TPUs for logistical reasons. But for those open to multi-cloud or already in GCP, v6e offers a powerful tool. Google will continue to use TPUs as a way to differentiate its cloud for AI – essentially saying, if you want the fastest training for the lowest cost, come to GCP and use TPUs instead of renting 3rd-party GPUs. It’s a different play from NVIDIA and AMD, which sell hardware directly or through OEMs.

Next-Generation Outlook and Competitive Landscape

The current battle between NVIDIA’s Blackwell, AMD’s Instinct MI300/350 series, and Google’s TPUs is intense, but the roadmap ahead suggests it will only heat up further. Each company has plans for the next generation that will build on the strengths (and try to address weaknesses) of these current chips:

- NVIDIA’s Next Gen (Post-Blackwell): NVIDIA has historically operated on a 2-year cadence for major GPU architectures. After Blackwell (2024/2025), the next architecture – name unconfirmed, but likely another famous figure’s name – can be expected around 2026 or 2027. While details are scarce, some educated speculation can be made. First, NVIDIA will probably continue its strategy of specializing GPUs for AI. In Blackwell, they already downplayed FP64; in the future, we might see an even bigger divergence where NVIDIA offers separate product lines or modes for “AI accelerators” vs “HPC accelerators.” In fact, some reports suggest NVIDIA might split its flagship GPUs into specialized lineups for AI vs. HPC use cases tomshardware.com. This could mean one variant with maximal tensor core density and another with extra FP64/FP32 for scientific computing. There’s also the trend of GPUs integrating more with CPUs: NVIDIA’s Grace CPU (Arm-based) is part of their platform now, and the Grace+Blackwell “GB” superchips foreshadow tighter coupling of CPU memory and GPU memory. It wouldn’t be surprising if the next-gen architecture introduces something like a unified CPU/GPU package or broader coherence across CPU-GPU memory (NVLink-C2C already provides cache coherence between Grace and GPU). Another likely feature is the continued expansion of memory and interconnect – HBM4 or HBMNext memory could appear, pushing memory bandwidth toward 10+ TB/s per GPU. NVIDIA might also move to a smaller process node (perhaps TSMC 3nm or even 2nm if timeline is 2026/27), yielding more transistors to allocate. Those could be used for larger SM counts, more cache, or new functional units. One intriguing possibility is AI-specific accelerators on GPU: for instance, Blackwell added the Transformer Engine for FP8/FP4. Future GPUs might add dedicated hardware for things like recommendation models (an area AMD and Google have targeted with SparseCore and such). Jensen Huang has also hinted at long-term research in optical interconnects, DPUs, and integrating photonics – perhaps not in the immediate next gen, but on the horizon to keep scaling networking. In terms of positioning, NVIDIA will aim to maintain its leadership in end-to-end AI solutions. By the time AMD’s MI400 or MI500 arrives, NVIDIA will counter with its next architecture. The competition likely ensures that NVIDIA’s next-gen will be significantly more powerful (maybe another 2–3× leap in AI performance) to stay ahead. Pricing might also adjust – if AMD continues to challenge on price, NVIDIA might offer more “graceful” (no pun intended) cost options, maybe via cloud partnerships or bundling (e.g., discounts on DGX Cloud credits). One thing to watch is NVIDIA possibly venturing into more domain-specific chips: they already have GPUs and DPUs, but will they ever create a TPU-like dedicated matrix ASIC? So far they haven’t needed to, but if Google’s approach started encroaching on NVIDIA’s market, NVIDIA could respond by making a variant of its GPU that is stripped down to only tensor cores and sold as an inference accelerator. For now, though, the GPU approach offers flexibility that serves them well.

- AMD’s Next Gen (MI400 & Beyond): AMD has publicly shared a rough roadmap: after CDNA4 (MI350 series in 2025), the CDNA “Next” architecture will power the Instinct MI400 series expected in 2026 amd.com amd.com. Interestingly, some early info suggests MI400 might be a substantial leap, possibly even pulling in tech from what might have been MI500 plans nextplatform.com. An updated report claimed an MI400X GPU could be ~10× faster than MI300X and is slated for a new “Helios” rack-scale system with EPYC “Venice” CPUs tomshardware.com. This hints that AMD is working not just on the chip but on system-level innovation (similar to NVIDIA’s Grace+GPU strategy). There was also mention of an Instinct MI450X with IF128 (Infinity Fabric 128 lanes) for 2026 tomshardware.com, which suggests a design that can connect 128 GPUs in a rack seamlessly – analogous to NVIDIA’s NVLink Switch networks. For MI400, we can expect a move to an even newer process (possibly TSMC 2nm if timeline aligns, or a refined 3nm), more advanced 3D packaging (maybe even integrating HBM on top of compute dies, etc.), and likely further increases in memory capacity and bandwidth. AMD will probably incorporate whatever new memory technology is available (HBM4 or HBM3e with even higher densities). On the compute side, given AMD’s progress, MI400 might introduce support for even smaller data types (FP2? Int4 fully? who knows) and more aggressive use of sparsity and AI-focused instructions. AMD’s recent announcements about future AI products (like a mention of “Radeon AI” GPUs for the prosumer market tomshardware.com) indicate they want to cover more of the market. By MI400 or MI500, AMD may also unify some features from consumer GPUs (gaming) into the AI line (for example, leveraging RDNA graphics cores for certain mixed workloads if needed). Positioning-wise, AMD is clearly targeting leadership performance per dollar. If MI350 gains traction, MI400 will double down, possibly leapfrogging NVIDIA in certain metrics if they can. For example, AMD might choose to far exceed NVIDIA in memory (imagine MI400 with 512 GB HBM) or in arithmetic precision support (AMD might keep full FP64 rate, appealing to HPC centers, while NVIDIA has cut back – thus winning HPC deals). AMD also acquired Xilinx, so a wild card is how they might integrate FPGA or adaptive logic into their accelerators. One could envision future Instinct platforms that include some reconfigurable cores for specialized tasks. In short, AMD’s roadmap shows annual cadence now amd.com – they are no longer a generation or two behind NVIDIA; they are running neck-and-neck. If they execute well on MI400 in 2026 and MI500 around 2027 (as some leaks like “MI500X in 2027” suggest tomshardware.com tomshardware.com), AMD could emerge as a co-leader in AI accelerators, forcing a truly competitive market. That’s good for buyers, as it tends to drive costs down and innovation up.

- Google’s Next Gen (TPU v7 and More): Google has not officially talked about a TPU v7, but it’s almost certain they’re working on it. Historically, Google has released a new TPU generation roughly every 1–1.5 years (TPU v4 in 2020–21, v4i/v5e in 2022–23, v6 in 2024). So a TPU v7 could land in 2025 or 2026. One interesting wrinkle is that Google often does a “and variant”: for v4 they had v4 and v4i (inference-optimized), for v5 they had v5e (cheaper, scale-out version). The Next Platform piece speculated that TPU v6 might also have an “v6i” inference-oriented chip later nextplatform.com. If so, Google could introduce a TPU v6i with perhaps fewer MXUs but more chips per pod or something to maximize throughput per dollar for serving. Alternatively, TPU v7 might unify both needs. Technically, we might see Google incorporate support for even lower precision; since others have FP4, Google will likely ensure TPU v7 supports FP8, FP4 (probably as software-emulated within bfloat16 format or new hardware). Google could also increase memory – a long-standing limitation of TPUs has been relatively low memory per chip, but in v6 they doubled to 32 GB. If HBM3 and 3D packaging allow, TPU v7 could go to 64 GB per chip, for example, which would help with larger models and also reduce needing to shard as much. Another area is interconnect: Google already uses optical links for multi-pod, but they might move to a 3D torus or other topologies to improve collective operations latency. We should also expect more integration with Google’s networking and infrastructure: the mention of “Titanium IPUs” for connecting pods cloud.google.com suggests Google might incorporate intelligent network switches or photonic interposers in future systems to scale even bigger (tens of thousands of chips). In terms of competition, Google’s TPU program is inward-focused (to support Google products) but also outward-facing to attract cloud customers. They will continue to position TPUs as the most cost-effective way to train huge models – even if NVIDIA or AMD have faster chips, Google can argue their TPU pods, at scale, deliver results cheaper (since Google controls the whole stack and can price aggressively). One challenge for Google is the software gap for outsiders – they might need to invest more in tools that make migrating from GPU to TPU easier. If not, many will still choose GPUs despite cost, simply due to familiarity. It wouldn’t be surprising if Google eventually offers larger memory TPUs or hybrid systems (imagine a TPU with some integrated CPU or more DRAM) to make them more general-purpose. For now, though, Google will likely keep TPUs focused: pure ML number-crunchers that it deploys by the thousands in its data centers. As generative AI demand grows, Google will scale TPU deployments to meet its needs (for Search, Maps, YouTube recommendations, etc.), meaning TPU v7 and beyond will be central to Google’s own AI future. Also of note, other hyperscalers might respond by developing their own chips (Amazon has Trainium and Inferentia ASICs, though not as advanced yet; Microsoft is rumored working on an AI chip too). So Google won’t be alone – but TPU v6e and v7 set a high bar for any newcomers. In the broader landscape, if cloud customers increasingly flock to TPUs for cost reasons, that could pressure NVIDIA and AMD to adjust pricing or cloud strategies. However, many enterprises will still prefer the flexibility of GPUs unless Google TPUs become significantly easier to adopt without lock-in.

Competitive Landscape Summary: In 2025, we have a three-way competition where NVIDIA dominates market share and ecosystem, AMD is aggressively closing the gap with a compelling hardware value proposition, and Google is leveraging its vertically integrated approach to push AI capabilities at scale. NVIDIA’s Jensen Huang often notes that we’re in a “new computing era” driven by generative AI, and NVIDIA wants to be for this era what it was for the PC and gaming era – the default platform. AMD’s CEO Lisa Su, on the other hand, emphasizes choice and openness, positioning AMD Instinct as offering “exceptional performance and value”, to quote VP Brad McCredie, in a market that badly needs more than one supplier tomshardware.com. Google’s leadership (like Amin Vahdat) highlights efficiency and sustainability – the fact TPUs can do more work per watt, aligning with both cost and environmental goals cloud.google.com cloud.google.com. Ultimately, each company is playing to its strengths: NVIDIA with a full-stack tightly-coupled strategy, AMD with a cost-disruptive and memory-rich approach, and Google with a hyperscale-optimized bespoke solution. For customers and the industry, the good news is that AI hardware performance is skyrocketing while software is gradually abstracting away the differences. It’s conceivable that future frameworks will allow dynamic targeting of whatever hardware is best for a given workload (e.g., train on GPUs, infer on TPUs, etc.).

Conclusion: The NVIDIA Blackwell B200, AMD Instinct MI350, and Google TPU v6e are all marvels of engineering responding to the insatiable demand for AI compute. NVIDIA’s B200 brings blistering speed and a trusted ecosystem, AMD’s MI350 offers enormous memory and competitive performance at (potentially) lower cost, and Google’s TPU v6e delivers remarkable efficiency and scale for those in its cloud. As we move through 2025 and beyond, we’ll see these accelerators deployed in everything from supercomputers tackling scientific grand challenges to the behind-the-scenes AI powering internet services used by billions. The competition is driving innovation at a breakneck pace – with each new generation promising larger models, faster training times, and more intelligent applications. For AI practitioners, it’s an exciting time with a richer hardware menu than ever. And for the industry, this three-way showdown will shape how AI infrastructure is built and priced in the coming years, ultimately influencing how quickly we can advance AI capabilities in a sustainable, accessible way. In the words of one analyst, “the AI compute maze” is only getting more complex and more powerful engineering.miko.ai – and the entrants we’ve discussed here are leading the charge in navigating that maze towards the next breakthroughs.

Sources:

- NVIDIA DGX B200 product page nvidia.com 6

- NVIDIA MLPerf Technical Blog (Blackwell performance) developer.nvidia.com 7

- Exxact Corp. – Blackwell vs. Hopper specs exxactcorp.com 8

- Tom’s Hardware – AMD MI350/MI355X coverage tomshardware.com 9

- AMD Press Release – Instinct roadmap (Computex 2024) amd.com 2

- SMBOM News – AMD MI350 price hike and performance claims smbom.com 10

- Google Cloud Blog – TPU v6e “Trillium” announcement cloud.google.com 11

- Google Cloud Docs – TPU v6e architecture & specs cloud.google.com 12

- ServeTheHome – TPU v6e at SC24 report servethehome.com 13

- NextPlatform – Analysis of TPU v6 (Trillium) nextplatform.com 14

- Lightly.ai Blog – B200 vs H100 real-world benchmarks lightly.ai 15

- Tom’s Hardware – MI350X launch (Paul Alcorn, 2025) tomshardware.com 16

- Tom’s Hardware – Computex 2024 (Anton Shilov) tomshardware.com 17

- AMD Instinct MI350X specs (Tom’s Hardware table) tomshardware.com 18

- Yahoo Finance / SeekingAlpha – pricing info smbom.com (via HSBC report)

- AMD VP Quote (Brad McCredie) 3 .