- 360° Space Camera Debut: Jeff Bezos shared spectacular footage from a free-flying 360-degree camera deployed during Blue Origin’s New Shepard suborbital launch on September 18, 2025 techeblog.com. The untethered camera drifted alongside the rocket, capturing a dramatic external view of the booster high above Earth.

- “Spinning Glass” Explained: Viewers were puzzled by a rotating “bubble” artifact in the video. Bezos clarified that this “bubble” was simply the seam between two 180° lenses on the panoramic camera petapixel.com. The camera’s 360° footage is stitched from dual wide-angle lenses, and when engineers stabilized the shaky video to keep the rocket centered, the lens seam showed up as a spinning glass-like distortion petapixel.com 1 .

- Social Media Reactions: The breathtaking orbital view drew both praise and critiques online. Elon Musk replied “epic view” under Bezos’s post petapixel.com, while some viewers found the spinning seam distracting – one user commented “That is really annoying to watch. Please do better” and others urged Bezos to use better stitching tech petapixel.com. The strange artifact even led some to wonder if a lens cap or glass cover was left spinning in front of the camera by accident.

- Tech Mystery – Recovered or Lost?: It remains unclear what became of the free-flying camera after filming. Blue Origin has not confirmed whether the device was recaptured (for example, reattaching to the capsule or deploying a parachute) or if it was simply a disposable unit that fell back to Earth petapixel.com techeblog.com. Bezos’s team has not yet disclosed if the camera will be a regular feature on future flights.

- Part of a Bigger Trend: Bezos’s space camera stunt highlights a broader push toward autonomous cameras and drones in space. NASA and other agencies have experimented with free-flying camera robots for years – from the 1997 AERCam Sprint spherical camera tested on a Space Shuttle ntrs.nasa.gov ntrs.nasa.gov to modern ISS “Astrobee” helper bots nasa.gov nasa.gov and Japan’s adorable floating camera drone Int-Ball maxongroup.com. The success of Blue Origin’s camera suggests future missions could deploy their own “camera satellites” to document launches, inspect spacecraft, and bring stunning views to the public.

Bezos Unveils a Futuristic Free-Flying Space Camera

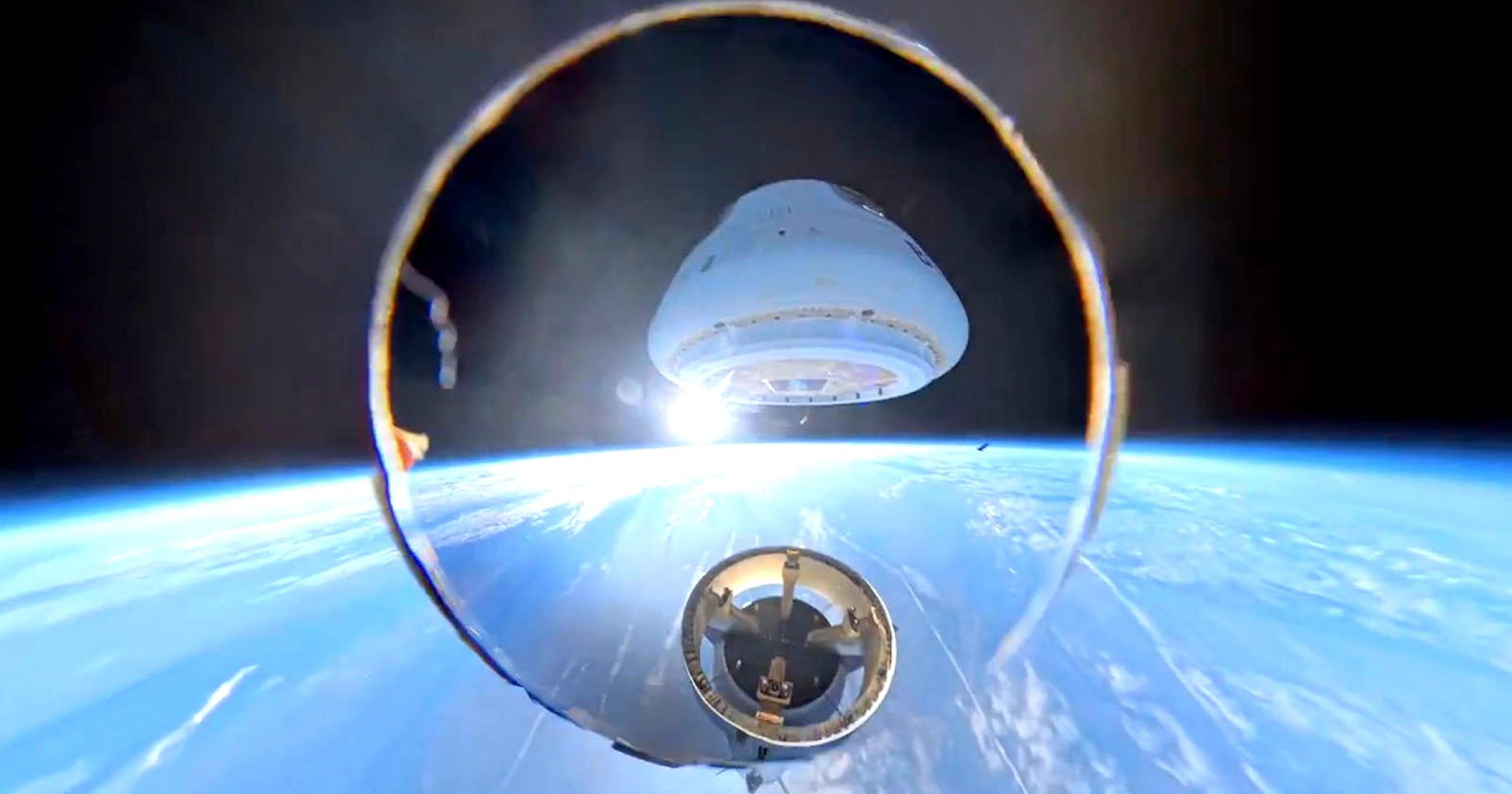

A frame from Blue Origin’s New Shepard NS-35 flight footage, captured by the free-flying 360° camera after it detached in space. The camera’s perspective shows the booster and capsule above Earth’s horizon, offering a dramatic “third-person” view of the rocket.

Jeff Bezos took to social media to reveal an “amazing” free-flying space camera that was deployed during Blue Origin’s latest suborbital flight. The camera was released on the New Shepard NS-35 mission (launched September 18, 2025 from West Texas) to independently float alongside the rocket and capsule techeblog.com. In the short clip Bezos shared, the camera’s eye provides a spectator’s view of the booster coasting in the blackness of space with Earth’s blue curve below. The Amazon founder exulted in the footage, tweeting “What a view. From our new free flying camera – deployed on yesterday’s New Shepard mission.” He then preemptively explained the strange visual artifact in the video, noting that “the ‘bubble’ is the seam between two 180 degree lenses.” 2

This free-flying camera represents a new twist on space launch photography. Traditionally, rocket companies mount cameras directly on the vehicle (pointing at stages or the Earth) or rely on ground/air tracking cameras. In this case, Blue Origin engineers ejected an autonomous camera unit into microgravity – essentially creating a tiny satellite cameraman that drifted along with the rocket to film it from a short distance. Bezos described it as a 360-degree camera system: two wide-angle lenses stitched together to form a complete circle of view techeblog.com techeblog.com. By detaching from the booster, the camera could capture the entire rocket in frame, something on-board cameras cannot do. The result was a spectacular third-person perspective of the booster and capsule separating against the backdrop of Earth, a scene usually only imaginable via CGI or complex drone choreography.

The New Shepard NS-35 flight itself was an uncrewed suborbital mission carrying over 40 science payloads, ranging from student experiments (via NASA’s TechRise program) to university research projects techeblog.com. During the roughly 10-minute flight, the rocket reached space (about 62 miles up) and then returned safely – the booster performing a powered upright landing and the capsule parachuting down a few minutes later techeblog.com. At some point during the coast at apogee, the team “slipped out this camera and let it go, no strings or rails” techeblog.com. Freed from any mount, the camera pod floated freely, likely tumbling slowly as it filmed the capsule’s separation from the booster. This innovative setup gave mission controllers and the public an unprecedented view of the launch: effectively watching the rocket from a companion “eye” in space.

The Mystery of the Spinning “Glass” in the Footage

While the footage was stunning, it also left viewers scratching their heads about that odd, spinning “glass” visible in front of the camera. In the video, a translucent curved line – which Jeff Bezos dubbed the “bubble” – can be seen rapidly rotating in the center of the frame. Social media buzzed with questions: What on Earth (or space) is that spinning thing? Was it a lens cover? A protective dome? A glitch in the camera?

According to Bezos, the answer is much more mundane: it’s the image seam of a 360° camera petapixel.com. The camera uses two back-to-back 180° fisheye lenses to capture the entire sphere of view. This design is common in consumer 360-degree cameras (like Insta360 or GoPro Max devices) – they record two ultrawide images and then software “stitches” them into one seamless panoramic video. However, where the two hemispherical images meet, there is inevitably a “stitch line” or seam. On a well-calibrated 360° camera, that seam might barely show as a vertical blur or distortion. But in Blue Origin’s space video, that seam manifested as an obvious spinning halo.

Why was the seam so prominent and moving? The culprit is the motion of the camera and the subsequent stabilization process. The free-flyer was not stabilized in space – in fact, it was likely tumbling end-over-end once it left the rocket techeblog.com. The raw footage would have been a dizzying whirl, with the rocket and Earth whipping in and out of frame. To make the video watchable, Blue Origin’s team ran the footage through image stabilization software, effectively rotating and reframing the video so that the rocket stays centered in view petapixel.com. This clever post-processing turns a chaotic spin into a smooth tracking shot – but it has a side effect. As the software twists the video to follow the booster, the fixed stitch line in the 360° image sweeps across the frame, appearing as a spinning semi-transparent line or “bubble.” In other words, the seam that would normally be a static vertical line in a 360° video became a rotating ring because the frame itself was being rotated continuously.

PetaPixel explains that on Earth you might only see a slight line of distortion at the seam. In space, with the camera rotating rapidly, stabilization made the lens seam highly visible as a spinning artifact petapixel.com. The TechEBlog analysis further elaborates: “Raw clips would be too fast to follow, with the booster blurring past in streaks. The crew then runs it through stabilization algorithms, twisting the entire frame to center the rocket. The lens seam comes into view with that motion, and becomes a spinning bubble that’s amazing as often as annoying.” techeblog.com. Essentially, the camera was doing its job – capturing everything around it – but our effort to keep the rocket in sight turned the invisible stitch into a prominent, rotating “glass” in the middle of the shot.

Reactions to this quirk were mixed. Many viewers were simply thrilled by the unique viewpoint and didn’t mind the odd artifact. Others found it jarring. “That is really annoying to watch. Please do better,” one user on X (Twitter) replied bluntly petapixel.com. Another commenter even tagged 360-camera maker Insta360, joking “Please give Jeff a camera so he can stitch his panoramas correctly.” petapixel.com The implication being that perhaps a more advanced camera or stitching software could eliminate such distractions. In online forums, some quipped that it looked like Blue Origin “left the lens cap on and it’s flapping around” – an impression reinforced by the bubble’s glassy look and constant spinning.

Despite the snark, many acknowledged that this was a minor technical wart on an otherwise groundbreaking clip. It’s a known trade-off in 360° videography: you get a fully immersive view, but you also get a seam somewhere. Future improvements (like using more lenses or real-time stitching with better blending) might reduce the visibility of the stitch line. Bezos, for his part, didn’t seem too bothered – he preemptively identified the bubble and kept the focus on the “What a view” afforded by the camera petapixel.com. As the technology matures, that seam – the spinning glass – may become less noticeable, but in this maiden outing it certainly got the internet talking.

Not the First Space Camera Drone: Similar Systems in Orbit

Bezos’s free-flying space camera may sound like science fiction, but it builds on a long history of space agencies experimenting with autonomous cameras and “drones” in microgravity. Over the decades, engineers have developed several gadgets and mini-satellites to float around spacecraft for photography or inspection. Here are a few notable examples that put Bezos’s space camera in context:

- NASA’s AERCam Sprint (1997): The Autonomous Extravehicular Robotic Camera nicknamed “Sprint” was a 14-inch (36 cm) spherical free-flying camera tested outside the Space Shuttle Columbia in 1997. Astronaut Steven Lindsey remote-piloted AERCam Sprint around the Shuttle, demonstrating how a small untethered probe could inspect the spacecraft’s exterior ntrs.nasa.gov. The 15 kg sphere carried two cameras (one for navigation and one for inspection) and maneuvered with tiny nitrogen gas thrusters ntrs.nasa.gov ntrs.nasa.gov. Sprint’s successful 1-hour flight proved that a free-flying “camera ball” could operate in orbit and beam back live video – a concept well ahead of its time. NASA later worked on a smaller “Mini-AERCam”, though that project was eventually shelved 3 .

- NASA “Astrobee” Robots (2019–Present): On the International Space Station, NASA employs a trio of cube-shaped free-flying robots called Astrobees. Each Astrobee is a 12-inch cube that can navigate inside the ISS autonomously or via remote control, using electric fans to propel it in the weightless environment nasa.gov. Equipped with cameras and sensors, Astrobees can document experiments, take inventory, and even videotape astronauts at work, effectively serving as robotic camera operators and assistants nasa.gov nasa.gov. These cute flying cubes (named Honey, Queen, and Bumble) carry out routine monitoring tasks so that astronauts can focus on more complex work. Astrobee builds on earlier ISS free-flyer experiments (like the MIT’s SPHERES drones) and shows how autonomous camera-bots can function as a crew’s extra eyes and hands in space.

- JAXA’s Int-Ball (2017): The Japanese space agency (JAXA) developed a charmingly futuristic camera drone known as Int-Ball (Internal Ball Camera). Debuted on the ISS’s Japanese “Kibo” module, Int-Ball is a spherical drone about 15 cm in diameter that can float autonomously or be remote-controlled from Earth maxongroup.com. It has two large “eyes” (cameras) and can record HD video and photos of crew activities. Int-Ball’s mission is to “look over the shoulder” of astronauts – it can hover at their side, filming their work without distracting them maxongroup.com. JAXA estimates astronauts spend a sizable chunk of time taking photos/video for documentation; Int-Ball could save 10% or more of crew time by taking over that job maxongroup.com. Inside its compact body, Int-Ball uses reaction wheels and small fans to maneuver, and it relays footage to ground control in real time maxongroup.com. There are even plans to upgrade Int-Ball so that future versions might operate outside the space station as free-flying external cameras maxongroup.com, which would truly be akin to having autonomous camera drones on spacewalks.

- D-Orbit’s ION Satellite Carrier (2020s): In the commercial space sector, D-Orbit (an Italian company) has developed the ION Satellite Carrier, a free-flying spacecraft designed to deploy smaller satellites and test new technologies in orbit. Essentially a self-propelled CubeSat deployer, ION is released from a rocket and then flies on its own, releasing client CubeSats into precise orbits over time space.skyrocket.de. While its primary job is delivery, ION carries sensors and sometimes cameras to monitor these deployments. One ION vehicle even served as a rendezvous target for a demonstration: in 2024, startup Starfish Space’s “Otter Pup” spacecraft successfully approached a D-Orbit ION and captured images of it from 3 km away during a close-proximity flyby satellitetoday.com satellitetoday.com. (Redwire Space provided the camera system for that mission satellitetoday.com.) The ION/Otter Pup experiment shows that autonomous small satellites can meet up and photograph each other in orbit, a capability useful for inspection and servicing in space. D-Orbit’s free-flying carrier, while not just a camera, exemplifies the new generation of versatile small spacecraft that can roam independently in orbit – much like Blue Origin’s camera pod did, albeit for a shorter suborbital jaunt.

- Camera CubeSats for Missions: A recent trend is to include dedicated tiny camera satellites on major missions to document key events. For example, when NASA launched the uncrewed Artemis I mission to the Moon in 2022, one of the secondary payloads was ArgoMoon, a 6U CubeSat developed by the Italian Space Agency (ASI) nasa.gov. After deployment from the Space Launch System rocket, ArgoMoon’s task was to perform autonomous visual inspections around the spent upper stage and capture historic photos of the Moon and Earth nasa.gov. ArgoMoon carried high-definition cameras and imaging software to record the rocket’s upper stage, the Earth, and the Moon, providing documentation of Artemis I’s journey nasa.gov. In essence, it acted as a tiny free-flying photographer in deep space. NASA noted that ArgoMoon is a forerunner for technologies that could inspect satellites or hardware in deep space that weren’t designed to be serviced nasa.gov. Similarly, on NASA’s 2022 DART mission (the asteroid impact test), a small Italian CubeSat called LICIACube was released to trail the main spacecraft and photograph the asteroid collision – another example of a deployable camera buddy flying alongside a mission nasa.gov phys.org. All these efforts mirror what Blue Origin did on a smaller scale: deploying an independent camera unit to capture angles and data that primary spacecraft can’t get on their own.

In light of these examples, Blue Origin’s free-flying camera is part of a broader movement towards autonomous imaging platforms in space. What makes Bezos’s demo special is that it was relatively low-cost and short-lived – essentially tossing out a camera for a few minutes of suborbital filming – and it reached a massive audience through social media. It bridges the gap between high-end NASA experiments and consumer tech, suggesting that even private space tourism flights might soon feature free-flying camera drones to film the experience. As technology miniaturizes, we can expect many more “eyes in the sky” accompanying rockets and spacecraft in the near future.

Implications for Space Exploration and Satellite Photography

The successful use of a free-flying camera on New Shepard hints at significant implications for how we explore and document space going forward. For one, it opens new possibilities in space photography and public engagement. The footage provided a visceral, almost cinematic perspective of a rocket in flight – something rarely seen outside of science fiction. This could become a staple of future launches, turning routine mission data into stunning visual experiences. Blue Origin’s test shows that instead of just relying on telemetry and onboard cams, missions can deploy a camera satellite to make everyone watching feel like they have a “front row seat” to spaceflight techeblog.com. As TechEBlog noted, such feedback views can “turn dry telemetry into something tangible,” making spaceflight more accessible and exciting to the public techeblog.com. This has obvious outreach and educational benefits – inspiring the next generation of engineers and explorers with real footage from novel angles.

From a technical and operational standpoint, having free-flying cameras could greatly aid spacecraft operations and safety. Imagine a future spacecraft or space station that can release a small camera drone to inspect its exterior for damage, instead of sending an astronaut on a risky spacewalk. This was exactly the vision behind NASA’s AERCam Sprint and the newer inspection microsatellites. A free-flyer can get up-close views of areas that fixed cameras or human eyes can’t easily see. For example, after a launch, a disposable camera drone might check a booster for any signs of damage or anomalous behavior during ascent. During docking or landing operations, an external camera pod could observe alignment and provide an independent viewpoint to mission control.

There are also science and engineering benefits. High-quality imagery of rocket separations, plume behavior, and spacecraft deployments can provide data for engineers to refine their designs. In the New Shepard case, the camera captured the capsule separation from an external angle – data that could be useful in analyzing how the capsule and booster behave right after separation in microgravity. Multiply this capability to orbital missions: a camera satellite could monitor a stage separation or a satellite deployment in real time, possibly catching anomalies (like debris or partial deployment) that onboard instruments might miss.

For human spaceflight and exploration, autonomous cameras might become part of the standard toolkit. Future lunar missions or space station crews could use free-flying camera bots to scout around a lander or habitat. NASA’s current Artemis plans include the “Gateway” station around the Moon – one could imagine small cameras periodically released to inspect Gateway’s exterior or to film arriving spacecraft as a PR bonus. Even on Mars, while true free-flying drones are unfeasible in thin air, NASA has already flown the Ingenuity helicopter as an aerial scout for the Perseverance rover. Ingenuity’s success shows the value of a detached viewpoint; similarly, in orbit, detached camera sats can serve as eyes in places where we otherwise have blind spots.

There is also a strategic aspect: space domain awareness and satellite inspection. With the proliferation of satellites and space junk, being able to capture images of other objects in space has become important for both civil and defense purposes. Starfish Space co-founder Dr. Trevor Bennett, after their Otter Pup demo, remarked that “Space domain awareness, capturing images of other objects in space, is often a mission in and of itself.” satellitetoday.com In other words, deploying a camera to examine a satellite or orbital debris can be a valuable standalone mission. Blue Origin’s free-flyer might not have been intended for “inspection” per se, but it demonstrates the principle on a small scale. In the future, every major satellite or spacecraft might carry a few “camera bee” drones that can be sent out to take a look around, perform surveillance, or even aid in docking and rendezvous by providing additional viewpoints.

However, there are challenges and considerations. One is retrieval or disposal – what do you do with the camera after the show is over? In New Shepard’s case, it’s unclear if the camera was designed to survive and be reused. It may have simply fallen back and burned up, or perhaps parachuted down separately (Blue Origin hasn’t said) techeblog.com. For orbital use, a free-flying camera sat would ideally either redock with its host or deorbit itself after completing its task, to avoid adding new space debris. That adds complexity – propulsion, guidance, capture mechanisms – to what might otherwise be a simple camera. In some cases (like one-time CubeSats on deep space missions), the imaging spacecraft is indeed consumed or abandoned after the mission (e.g., LICIACube flew past the asteroid and then continued into solar orbit after snapping DART’s impact). Future designs might include reusable camera drones that can come back for recharging, akin to Astrobee returning to a dock on the ISS.

Another consideration is stabilization and control. Blue Origin’s camera was essentially in free-fall and had no active stabilization (hence its spinning). For longer use, you’d want a camera platform that can orient itself (using, say, reaction wheels or small jets) to keep subjects in frame. This requires power and smarts onboard, raising the cost and complexity. Yet, with advances in miniaturization, even micro-satellites can have surprising autonomy now – as seen with ArgoMoon using AI-based algorithms for autonomous navigation and fault recovery in deep space nasa.gov nasa.gov. In short, the technology to make smart, self-steering camera satellites is rapidly evolving.

Reactions from Elon Musk and the Space Community

Jeff Bezos’s space camera test did not go unnoticed – it quickly lit up conversation among space enthusiasts and rival billionaires alike. On X (formerly Twitter), Elon Musk gave an uncharacteristically terse compliment, replying “epic view” to Bezos’s video post petapixel.com. It’s not every day Musk praises something Blue Origin does – the comment underscored that the visual was indeed striking. Space fans across social media echoed that sentiment, marveling at how the clip looked “like it’s straight out of a sci-fi movie” and how it showcased the curvature of Earth and the rocket in a single shot.

Amid the awe, there was also plenty of tongue-in-cheek critique. The spinning seam became a meme in itself, as mentioned earlier. Some joked that even in space, Windows needs a better screensaver (riffing on the circular “loading” icon similarity), or that Bezos’s camera was “buffering reality.” Others playfully suggested that next time, “maybe use a GoPro – at least those don’t spin a ghost orb in your video.” The gentle ribbing highlights that while the idea was cool, the execution left room for improvement in the eyes of a tech-savvy audience.

Professional photographers and camera geeks also weighed in, discussing the technical aspects. Many immediately recognized the artifact as a stitching issue common in 360° video. Some offered tips: use a different 360 camera model, adjust the stabilization algorithm, or even try a single-lens ultrawide camera to avoid seams. (However, a single-lens solution wouldn’t capture a full 360 sphere, so it’s a trade-off.) The fact that Bezos’s post explicitly mentioned the 180° lenses seam suggests Blue Origin anticipated these questions – and indeed, by addressing it upfront, Bezos turned a potential negative (the weird bubble) into an educational moment about how their camera works.

Within the space industry, engineers noted that despite the quirk, the experiment was a win. Getting a device to free-float near a rocket and record stable footage is non-trivial. There are concerns like ensuring the camera doesn’t interfere with the rocket (imagine if it had bumped into the booster or engines – though in this suborbital flight, separation was gentle and the camera likely drifted away safely). There’s also the challenge of maintaining communication – presumably the camera was either recording onboard or transmitting video to the capsule or booster to relay down. Pulling off this stunt hints at Blue Origin’s confidence in their vehicle’s stability and their willingness to push boundaries for the sake of innovation and public outreach.

Publicly, Blue Origin has been fairly quiet beyond Bezos’s reveal. The company did not livestream the NS-35 mission (it was a closed, uncrewed research flight), so this camera footage served as the public’s main glimpse of the launch. It certainly generated more buzz than a standard press release. Space journalists hailed it as a clever way to generate excitement for an otherwise routine flight. PetaPixel, a photography news site, highlighted the clip and dissected the stitching issue, while tech outlets like TechEBlog and others picked up the story techeblog.com techeblog.com. The consensus was that this might become a new trend for rocket companies – expect more free-flying or deployable cameras on future missions to give that cinematic flair.

The Future of Space-Based Imaging: New Frontiers and Innovations

Bezos’s free-flying camera is one piece of a larger puzzle in next-gen space imaging. As we look ahead, we can foresee a convergence of trends that will make space photography and video more immersive, autonomous, and ubiquitous.

One trend is the push for higher fidelity and immersive media from space. Companies and organizations are already experimenting with virtual reality (VR) and 360° video in orbit – for instance, astronauts on the ISS have filmed in 360° for VR documentaries. A free-flying 360 camera fits right into that narrative, potentially enabling VR experiences of riding alongside a rocket or floating outside a spacecraft. It’s not hard to imagine a future Blue Origin tourist flight where paying customers get a 360° video of their trip to space, viewable in a VR headset for a fully immersive memory. The tech demonstrated here is a stepping stone to that reality.

Another key area is on-orbit servicing and inspection, which we touched on. In the coming years, there are multiple missions planned where spacecraft will rendezvous with, repair, or refuel other satellites. Almost all of these will rely on cameras to navigate and evaluate target spacecraft. Having independent camera probes could greatly aid these missions. In fact, space agencies are actively developing such capabilities – for example, the European Space Agency’s SpEye project aims to test a two-satellite system where a small “inspector” nanosatellite autonomously flies around a “mothership” satellite to image it asi.it. This concept is strikingly similar to what Blue Origin’s cam did with the New Shepard booster, just on a more permanent and controlled scale. Demonstrations like SpEye (planned for the near future) will validate the software, multi-spectral cameras, and autonomy needed for a nanosatellite to safely maneuver around another object and photograph it from all angles 4 .

Earth observation and science will benefit too. We’re entering an era where swarms of small satellites operate in coordination. One could imagine a swarm where one satellite is the “subject” and others are “cameramen” capturing phenomena from multiple viewpoints. For example, during a solar storm or atmospheric re-entry, having multiple free-flying cameras could yield valuable 3D data. We already deploy cameras in odd places – think of the impact cameras on Mars landers watching parachutes deploy, or cubesats like LICIACube watching an asteroid impact. These are essentially specialized free-fly cameras. Expect more “camerasats” tasked purely with observing either spacecraft or natural events (like volcanic eruptions from orbit, etc.) in ways fixed satellites cannot.

The commercialization of space imagery is another factor. With companies like SpaceX, Blue Origin, and Rocket Lab competing not just to launch but to capture the public’s imagination, stunning visuals are a key differentiator. SpaceX made a splash years ago by mounting cameras that showed simultaneous booster landings, Starman riding a Tesla in orbit, and most recently streaming Starship’s leaps. Blue Origin’s free-flying camera ups the ante – it suggests a future where every launch comes with Hollywood-grade footage from all angles. This could spawn new business opportunities: companies might deploy independent camera drones as a service (“we’ll film your satellite deployment for a fee”). It also raises interesting questions about intellectual property and jurisdiction – if a third-party camera sat flies around imaging someone else’s spacecraft, is that allowed? Regulations may need to catch up to these possibilities, ensuring such imaging doesn’t compromise security or safety.

Finally, there’s the inspirational aspect. We’ve all seen iconic space photos: Earthrise from Apollo, the Blue Marble, the “selfie” of the Curiosity rover on Mars, etc. Many of those required either a human photographer or a robotic arm. Free-flying cameras open up new angles for iconic shots. Imagine a photo of an astronaut on a spacewalk taken by a companion drone floating a few meters away – no tether, no fellow astronaut needed to hold a camera. Or consider a probe approaching Mars, ejecting a mini-cam that hangs back and snaps a picture of the mothership entering the Martian atmosphere. These kinds of shots would be both scientifically useful and deeply inspiring, blending engineering with artistry.

In conclusion, the free-flying space camera demonstrated by Jeff Bezos and Blue Origin is more than a gimmick – it’s a glimpse into the future of how we will observe and share our adventures beyond Earth. By combining existing consumer camera tech (360° stitching) with aerospace engineering (autonomous free-flight in microgravity), Blue Origin created a small marvel: a camera that briefly became an astronaut with a lens, floating alongside a rocket. Yes, the spinning “bubble” in the video reminded us that it’s an emerging technology with kinks to iron out. But those will be solved in time. Today a spinning seam; tomorrow, perhaps, a perfectly stable drone camera giving us live 360° feeds from orbit.

The reaction from the public and experts shows there’s an appetite for these kinds of innovations. People want to vicariously experience space, and technology is finally at a point to deliver that in rich detail. As free-flying cameras become more common – whether on suborbital hops, orbital launches, or deep space missions – we’ll gain not only prettier pictures, but also valuable tools for exploration. The sky (and space) is no longer the limit for camera tech. From Blue Origin’s test to NASA’s planned inspector drones, we’re entering an era where every spacecraft might have a little photographer buddy nearby. And as Bezos’s viral video proved, the results can be truly spectacular – seams and all.

Sources: Jeff Bezos via X petapixel.com petapixel.com; PetaPixel (M. Growcoot) petapixel.com petapixel.com; TechEBlog techeblog.com techeblog.com; NASA and JAXA program reports ntrs.nasa.gov nasa.gov maxongroup.com; Via Satellite (R. Jewett) satellitetoday.com; NASA Artemis I mission blog nasa.gov 5 .