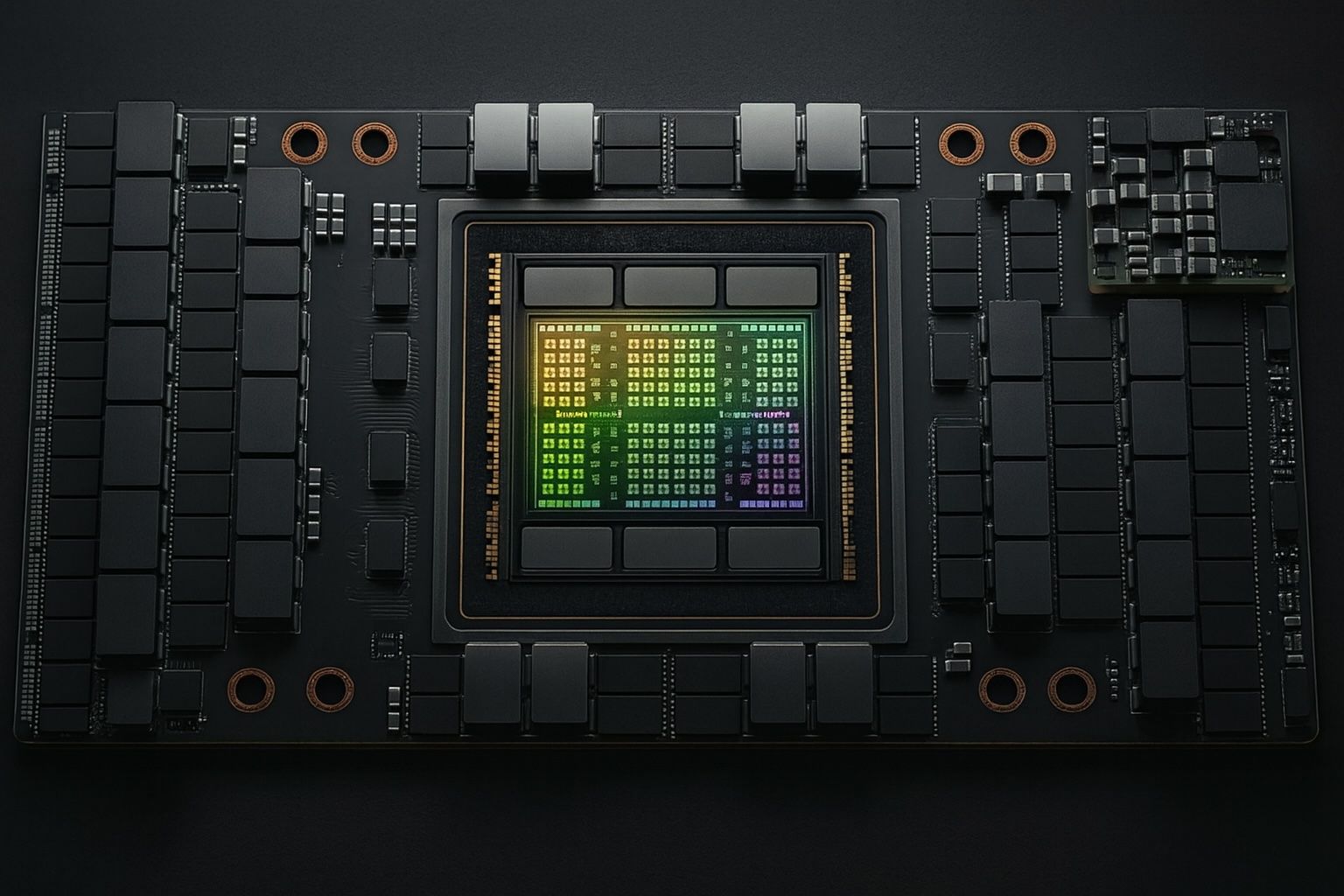

OpenAI’s Mega-Deals with Nvidia & AMD Spark a $1 Trillion AI Frenzy

OpenAI Fuels a $1 Trillion AI Hardware Boom OpenAI – the creator of ChatGPT – has positioned itself at the center of an AI hardware buying frenzy that is reshaping the tech industry. In the past few weeks (early October 2025),