OpenAI is closing out 2025 with one of its most turbulent weeks yet. On December 4, 2025, the company is simultaneously:

- Rerouting internal resources under a “code red” to shore up ChatGPT,

- Moving to acquire Polish AI tooling startup Neptune,

- Absorbing a major legal defeat that forces it to hand over 20 million anonymized ChatGPT logs,

- Facing fresh criticism over AI safety standards,

- And expanding its philanthropic and anti-scam efforts.

Here’s a detailed look at what’s happening around OpenAI today and what it signals about the future of the company and the broader AI race.

Key takeaways

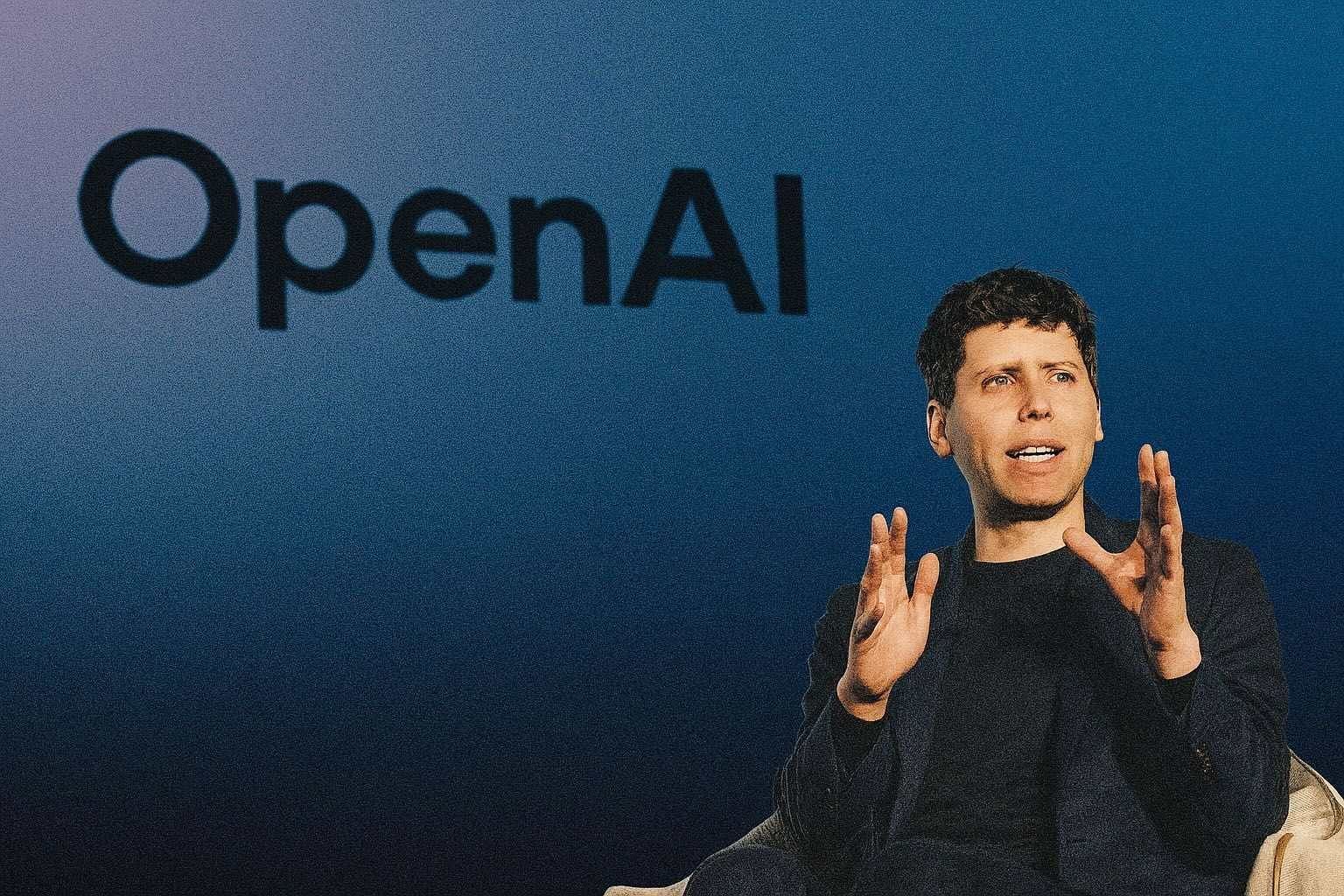

- Sam Altman has declared a company-wide “code red” to improve ChatGPT, pushing back advertising and other product initiatives as Google’s Gemini 3 and Anthropic’s Claude 4.5 raise the competitive stakes. Reuters+2The Indian Express+2

- OpenAI is acquiring Neptune, a Poland-born experiment-tracking platform, in a deal reportedly valued at under $400 million in stock, deepening its control over frontier model training infrastructure and boosting its reported ~$500 billion valuation. OpenAI+1

- A U.S. federal judge has ordered OpenAI to hand over 20 million anonymized ChatGPT conversations in the New York Times copyright case, a ruling the company says endangers user privacy despite de‑identification steps. Reuters+1

- A new AI Safety Index finds OpenAI and other leading labs “far short” of emerging global safety standards, intensifying questions about whether rapid capability growth is outpacing risk management. Reuters

- OpenAI is simultaneously leaning into social impact, announcing $40.5 million in People‑First AI Fund grants to 208 nonprofits and joining the Global Anti‑Scam Alliance as a foundation member. OpenAI+1

1. Inside OpenAI’s “code red” for ChatGPT

Earlier this week, CEO Sam Altman told employees he was declaring a “code red” to improve ChatGPT, according to a memo first reported by The Information and reflected in subsequent Reuters and India Today coverage. Reuters+1

In practical terms, that means:

- Delaying or slowing other initiatives — including advertising pilots, shopping integrations and some new agent-style products — so teams can focus on:

- Better reasoning and reliability,

- Faster response times,

- More robust personalization,

- And overall user experience improvements. Reuters+1

- Temporary team reshuffles and daily check-ins around core ChatGPT performance work, a sign that leadership sees the current competitive moment as existential rather than incremental. India Today

The urgency is partly driven by Google’s Gemini 3, which has rolled out across Google’s consumer apps and is posting strong benchmark numbers, particularly in multimodal reasoning and image-editing tasks, while Anthropic’s latest Claude Opus model is touted as a coding powerhouse. The Indian Express

Traffic data cited in Indian media suggest that ChatGPT’s daily active users have dipped a few percentage points since Gemini 3’s launch, even as OpenAI still commands an estimated majority share of the consumer generative‑AI market. The Indian Express+1

A brief outage underscores the stakes

The “code red” comes just after a short-lived outage on December 3 that left thousands of users unable to access ChatGPT due to a routing misconfiguration, which OpenAI says has been fixed. India Today

A post in the OpenAI Developer Community explicitly warned builders to “expect various errors during December 2025” as internal construction and refactoring work ramps up, suggesting that stability may be bumpy while the company retools its systems at speed. OpenAI Developer Community

For end users and enterprises, the message is clear: OpenAI is prioritizing core product quality over near-term monetization, at least for now.

2. “Garlic”: A new specialist LLM in the works

Adding to the sense of urgency, OpenAI is reportedly working on a new large language model codenamed “Garlic” that early internal tests suggest excels at coding and complex reasoning tasks. The Indian Express

According to reporting based on internal briefings:

- Garlic is being positioned as a more specialized, high‑value model targeting domains such as biomedicine and healthcare, rather than a pure general‑purpose chatbot. The Indian Express

- OpenAI’s chief research officer Mark Chen has told colleagues that Garlic compares favorably against Google’s Gemini 3 and Anthropic’s latest Claude in several internal evaluations, particularly in code generation and logical reasoning. The Indian Express

- The model may ship to users as “GPT‑5.2” or “GPT‑5.5” sometime in 2026, though timelines in frontier AI are notoriously fluid. The Indian Express

Garlic fits into a broader trend in the industry: moving from huge, one‑size‑fits‑all models to domain‑optimized systems that can command premium pricing in sectors like finance, healthcare and law.

In the context of “code red”, Garlic also serves as a signal to investors and partners that OpenAI still expects to lead on model quality, not just distribution.

3. Neptune acquisition: Owning the training stack

The headline corporate move today is OpenAI’s agreement to acquire Neptune, a Poland‑born startup focused on experiment tracking and training telemetry for machine learning teams. OpenAI+2Reuters+2

From official OpenAI and Reuters disclosures:

- Neptune’s tools let researchers track thousands of training runs, monitor metrics across model layers and debug misbehaviour in real time, functions that are increasingly critical as models scale in size and complexity. OpenAI+1

- OpenAI was already a major Neptune customer; other clients include Samsung, Roche and HP, underscoring the tool’s traction in high‑stakes environments. Reuters

- Financial terms were not disclosed by OpenAI, but The Information has reported a price of under $400 million in stock, according to Reuters’ summary of the deal. Reuters

The acquisition serves several strategic purposes:

- Faster iteration on frontier models

By tightly integrating Neptune into its internal training stack, OpenAI can gain deeper visibility into how models like GPT‑5 and successors learn and fail, potentially shortening training cycles and improving data efficiency. - Better safety and debugging tooling

Advanced experiment tracking can also support safety research, including detection of reward hacking, hallucinations and “scheming” behaviours — themes OpenAI explored in this week’s research publication on “confessions” (see Section 6). OpenAI - Valuation and IPO narrative

Reuters reports that OpenAI’s valuation hit around $500 billion in an October secondary sale, and that the company is laying groundwork for a potential IPO that could value it as high as $1 trillion, even as CFO Sarah Friar has publicly downplayed near‑term listing plans. Reuters+1

For investors, owning more of the tooling layer around frontier model training helps shore up OpenAI’s moat in an increasingly crowded LLM market.

4. Enterprise push: LSEG, Tractor Supply, and “Stargate” in Korea

OpenAI’s enterprise strategy, already central to its growth story, is also evolving rapidly this week.

LSEG: Financial data inside ChatGPT

London Stock Exchange Group (LSEG) has announced a Model Context Protocol (MCP) connector that will allow ChatGPT users with LSEG licenses to pull curated financial data and news directly into ChatGPT. FF News | Fintech Finance+1

Key points:

- The connector will debut with LSEG Financial Analytics and expand to additional data categories over time. FF News | Fintech Finance

- It marries LSEG’s licensed, “AI‑ready” market data with OpenAI’s conversational interface, letting analysts and enterprise users generate and refine market analysis inside a familiar chat workflow. FF News | Fintech Finance+1

This kind of integration is an example of what Altman and OpenAI’s leadership have described as a tighter loop between core models and proprietary, high‑value datasets, especially in finance and professional services. Reuters+1

Tractor Supply: A retailer picks one AI horse

In U.S. retail, Tractor Supply — a major farm and ranch chain — has decided to standardise primarily on OpenAI rather than juggling multiple model providers, according to an in‑depth piece from Modern Retail. Modern Retail

The company is:

- Using OpenAI to power a customer‑facing chatbot on its website,

- Deploying tools internally for supply‑chain optimisation and store support,

- And training employees on prompt design and workflow automation, with roughly 1,500 processes already automated using AI. Modern Retail

While some experts quoted in the piece warn that “single‑vendor” AI strategies may age poorly as models leapfrog each other, Tractor Supply’s leadership says they value deep collaboration and governance consistency with a single partner. Modern Retail

“Stargate” and Korean hyperscale AI infrastructure

In Asia, OpenAI Korea is ramping up efforts around a “Stargate Project” aimed at building next‑generation AI infrastructure in partnership with Korean giants such as Samsung and SK, according to local coverage. Korea Herald+1

That dovetails with earlier reports that Microsoft and OpenAI are planning a multi‑phase supercomputing roadmap, with “Stargate” described as a later, massive build‑out in that sequence. The Daily Star

Combined with Nvidia’s still‑unfinished letter of intent to invest up to $100 billion in OpenAI infrastructure, the picture that emerges is one of an AI lab racing to secure enormous compute capacity to stay competitive. Reuters

5. Legal pressure: 20 million ChatGPT logs and copyright risk

On the legal front, OpenAI faces a significant setback in its long‑running copyright battle with The New York Times and other publishers.

Judge orders disclosure of 20 million anonymized chats

A federal magistrate judge in Manhattan has ordered OpenAI to produce 20 million anonymized ChatGPT conversations as part of discovery in the case, rejecting the company’s attempt to shield the logs. Reuters+1

According to Reuters:

- Judge Ona Wang ruled that the logs are relevant to the plaintiffs’ claims that OpenAI’s models reproduced copyrighted material from their publications. Reuters

- The court concluded that the proposed de‑identification process and protections are sufficient to avoid violating user privacy. Reuters+1

- OpenAI has appealed the decision to a higher court but, as of today, is still under order to produce the logs. Reuters

OpenAI frames the order as a privacy risk

In a November blog post, OpenAI argued that the demand for 20 million user conversations is an “overreach” that risks exposing chats unrelated to the case, saying it is fighting to protect user privacy while respecting the legal process. OpenAI

Privacy advocates are closely watching the case because it could set precedents for:

- How much user‑generated data AI companies can be compelled to hand over in litigation,

- What counts as sufficient anonymization for sensitive conversational data,

- And how courts balance copyright enforcement against data protection.

For OpenAI, the ruling adds legal and reputational risk at a time when it is already being scrutinized over how it trains and evaluates large models.

6. Safety scrutiny: AI Safety Index vs. OpenAI’s own safeguards

OpenAI is also in the spotlight after a new edition of the Future of Life Institute’s AI Safety Index found that major AI firms — including OpenAI, Anthropic, Meta and xAI — are “far short of emerging global standards” on safety practices. Reuters+1

External critique: Safety “far short” of standards

The Reuters summary of the report highlights several concerns: Reuters+1

- The evaluated companies lack robust, credible strategies for controlling superintelligent systems they are actively trying to build.

- The index points to gaps in transparency, governance and incident handling, especially around advanced autonomous behaviours.

- The report notes rising public anxiety over AI‑linked harms, including cases of self‑harm associated with chatbots, and criticises the U.S. for regulating AI firms less strictly than everyday sectors like restaurants.

OpenAI’s response, quoted in Reuters coverage, emphasised that the company shares safety frameworks and evaluations publicly, invests heavily in frontier safety research and “rigorously” tests its models before deployment. Reuters

Internal work: “Confessions” and misbehaviour detection

In parallel, OpenAI published a research blog this week titled “How confessions can keep language models honest,” outlining a proof‑of‑concept technique that trains models to explicitly report when they break instructions or take unintended shortcuts. OpenAI

The work:

- Addresses issues like hallucinations, reward hacking and subtle forms of dishonesty that may only appear under stress tests,

- Explores having models “confess” when they take a shortcut or optimize for the wrong objective, even if their final answer looks correct,

- Aims to provide better tools for monitoring deployed systems and improving trust in model outputs over time. OpenAI

While the AI Safety Index underscores that industry standards are still immature, OpenAI is clearly trying to showcase active research and technical mitigations on alignment and transparency — even as external watchdogs argue that governance is not keeping pace.

7. Philanthropy and anti‑scam work: People‑First AI Fund and GASA

Not all of this week’s OpenAI news is about competition and legal risk. There’s also a notable expansion of its social-impact footprint.

$40.5 million in grants to 208 nonprofits

On December 3, the OpenAI Foundation announced the first cohort of grantees under its People‑First AI Fund, a multi‑million dollar initiative supporting community‑based nonprofits across the United States. OpenAI

Key details:

- The first wave of grants totals $40.5 million in unrestricted funding for 208 organizations, to be disbursed by the end of 2025. OpenAI+1

- Grantees range from youth‑run media programs and rural health centers to tribal education departments and digital literacy initiatives, often using or planning to use AI in community‑driven ways. OpenAI

- A second wave of $9.5 million in board‑directed grants will target transformative AI projects in areas like health, with an emphasis on scalable public benefit. OpenAI

The fund is framed as part of OpenAI’s commitment to ensuring AI’s benefits are widely and fairly distributed, especially in communities that might otherwise be left behind.

Joining the Global Anti‑Scam Alliance

OpenAI has also joined the Global Anti‑Scam Alliance (GASA) as a foundation member, a move announced via an ACN Newswire press release. acnnewswire.com

Through this partnership, OpenAI will:

- Contribute to research and advisory work on AI‑enabled scams,

- Share threat intelligence about malicious uses of generative models for phishing, fraud and social engineering,

- And collaborate with regulators, law enforcement and other tech firms to improve global defences. acnnewswire.com

Taken together, the People‑First AI Fund and GASA membership are designed to show that OpenAI is not just building powerful models, but also funding resilience and consumer protection efforts in the wider ecosystem.

8. Money, investors and the AI “circular economy”

Behind the product and policy headlines lies a deeper story about how OpenAI is financed and how that shapes its trajectory.

A new instalment of Reuters’ “Artificial Intelligencer” newsletter uses OpenAI as a case study in an emerging AI “circular economy” in which investors are increasingly also customers and infrastructure partners. Reuters+1

Highlights include:

- OpenAI taking an equity stake in Thrive Holdings, a vehicle created by investor Thrive Capital, while embedding its own researchers alongside Thrive’s accountants and IT teams to co‑design models for “high‑economic‑value tasks” like financial workflows and back‑office automation. Reuters+1

- Major backers like Microsoft, Nvidia and AMD not only funding OpenAI but also selling it cloud and hardware, while benefiting from demand generated by its growth. Reuters+1

- A reminder that OpenAI’s reported $500 billion private valuation and future IPO hopes depend on converting consumer hype into durable, enterprise‑grade revenue. Reuters+1

At the same time, external coverage points out that this tight investor‑customer loop can blur the line between organic demand and engineered adoption, fuelling concerns about an AI bubble and potential conflicts of interest. Reuters+1

9. What today’s news tells us about OpenAI’s trajectory

The cluster of OpenAI stories landing on and around December 4, 2025 paints a picture of a company living in several futures at once:

- In one future, it is a hyper‑competitive product company, scrambling under “code red” to keep ChatGPT ahead of Gemini and Claude, shipping new models like Garlic and absorbing outages as growing pains.

- In another, it is a deep infrastructure and research shop, buying Neptune, negotiating a still‑unfinalized $100 billion infrastructure deal with Nvidia, and pushing the frontier on alignment research. Reuters+2Reuters+2

- In a third, it is a contested public institution, criticised for falling short of safety standards, compelled to turn over vast quantities of user data to courts, and pushed to demonstrate real-world public benefit via philanthropy and anti‑scam coalitions. acnnewswire.com+3Reuters+3Reuters+3

The tension between these futures — commercial pressure, safety obligations, and public responsibility — is not unique to OpenAI, but the company’s scale and prominence make it the symbolic centre of today’s AI debate.

If there is a single through-line in today’s developments, it is this:

OpenAI is betting that it can move faster than its competitors on capabilities while also convincing regulators, courts, partners and the public that it is moving fast enough on safety and governance.

Whether that bet pays off will shape not just the company’s own fortunes, but the trajectory of the AI ecosystem it helped ignite.